Details

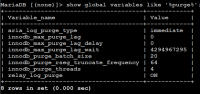

-

Bug

-

Status: Closed (View Workflow)

-

Critical

-

Resolution: Fixed

-

11.1.1, 11.2.0, 10.6, 10.7(EOL), 10.8(EOL), 10.9(EOL), 10.10(EOL), 10.11, 11.0(EOL), 11.1(EOL), 11.2(EOL)

Description

The issue with growing innodb_history_length (aka growing disk footprint of UNDO logs) that was originally reported in MDEV-29401 is still not solved for certain workloads.

Attachments

Issue Links

- blocks

-

MDEV-16260 Scale the purge effort according to the workload

-

- Open

-

- causes

-

MDEV-32788 CMAKE_BUILD_TYPE=Debug build failure with SUX_LOCK_GENERIC

-

- Closed

-

-

MDEV-33819 The purge of committed history is mis-parsing some log

-

- Closed

-

-

MDEV-34515 Contention between secondary index UPDATE and purge due to large innodb_purge_batch_size

-

- Closed

-

- relates to

-

MDEV-17598 InnoDB index option for per-record transaction ID

-

- Open

-

-

MDEV-26356 Performance regression after dict_sys.mutex removal

-

- Closed

-

-

MDEV-29401 InnoDB history list length increased in 10.6 compared to 10.5 for the same load

-

- Closed

-

-

MDEV-30628 10.6 performance regression with sustained high-connection write-only OLTP workload (55-80% degradation)

-

- Closed

-

-

MDEV-32573 Document UNDO logs still growing for write-intensive workloads

-

- Closed

-

-

MDEV-32588 InnoDB may hang when running out of buffer pool

-

- Closed

-

-

MDEV-32820 Race condition between trx_purge_free_segment() and trx_undo_create()

-

- Closed

-

-

MDEV-32873 Test innodb.innodb-index-online occasionally fails

-

- Closed

-

-

MDEV-33213 History list is not shrunk unless there is a pause in the workload

-

- Closed

-

-

MDEV-34259 Optimization in row_purge_poss_sec Function for Undo Purge Process

-

- Closed

-

-

MDEV-35895 purge_sys_t::close_and_reopen() can busy loop for a long time

-

- Open

-

-

MDEV-14602 Reduce malloc()/free() usage in InnoDB

-

- Confirmed

-

-

MDEV-18746 Reduce the amount of mem_heap_create() or malloc()

-

- Open

-

-

MDEV-26356 Performance regression after dict_sys.mutex removal

-

- Closed

-

-

MDEV-32622 [mariadb-galera] The undo log is very large, how can there be fewer undo log logs

-

- Closed

-

-

MDEV-33137 Assertion `end_lsn == page_lsn' failed in recv_recover_page

-

- Closed

-

- mentioned in

-

Page Loading...

Activity

| Field | Original Value | New Value |

|---|---|---|

| Link |

This issue relates to |

| Link |

This issue relates to |

| Summary | UNDO logs still growing for write-intesive workloads | UNDO logs still growing for write-intensive workloads |

| Link | This issue relates to PERF-411 [ PERF-411 ] |

| Affects Version/s | 11.2.0 [ 29033 ] | |

| Affects Version/s | 11.1.1 [ 28704 ] |

| Fix Version/s | 11.1 [ 28549 ] | |

| Fix Version/s | 11.2 [ 28603 ] |

| Assignee | Axel Schwenke [ axel ] | Marko Mäkelä [ marko ] |

| Description | The issue with growing innodb_history_length (aka growing disk footprint of UNDO logs) that was originally reported in |

| Description | The issue with growing innodb_history_length (aka growing disk footprint of UNDO logs) that was originally reported in |

The issue with growing innodb_history_length (aka growing disk footprint of UNDO logs) that was originally reported in |

| Link |

This issue relates to |

| Fix Version/s | 10.6 [ 24028 ] | |

| Fix Version/s | 10.10 [ 27530 ] | |

| Fix Version/s | 10.11 [ 27614 ] | |

| Fix Version/s | 11.0 [ 28320 ] | |

| Affects Version/s | 10.6 [ 24028 ] | |

| Affects Version/s | 10.7 [ 24805 ] | |

| Affects Version/s | 10.8 [ 26121 ] | |

| Affects Version/s | 10.9 [ 26905 ] | |

| Affects Version/s | 10.10 [ 27530 ] | |

| Affects Version/s | 10.11 [ 27614 ] | |

| Affects Version/s | 11.0 [ 28320 ] | |

| Affects Version/s | 11.1 [ 28549 ] | |

| Affects Version/s | 11.2 [ 28603 ] | |

| Labels | performance | |

| Priority | Major [ 3 ] | Critical [ 2 ] |

| Status | Open [ 1 ] | In Progress [ 3 ] |

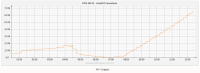

| Attachment | purge-bottleneck-5-10000-128-40s-50s.svg [ 72068 ] |

| Link |

This issue relates to |

| Link | This issue blocks MDEV-16260 [ MDEV-16260 ] |

| Status | In Progress [ 3 ] | In Testing [ 10301 ] |

| Assignee | Marko Mäkelä [ marko ] | Matthias Leich [ mleich ] |

| Assignee | Matthias Leich [ mleich ] | Marko Mäkelä [ marko ] |

| Status | In Testing [ 10301 ] | Stalled [ 10000 ] |

| Status | Stalled [ 10000 ] | In Testing [ 10301 ] |

| Assignee | Marko Mäkelä [ marko ] | Axel Schwenke [ axel ] |

| issue.field.resolutiondate | 2023-10-25 08:22:08.0 | 2023-10-25 08:22:08.354 |

| Fix Version/s | 10.6.16 [ 29014 ] | |

| Fix Version/s | 10.10.7 [ 29018 ] | |

| Fix Version/s | 10.11.6 [ 29020 ] | |

| Fix Version/s | 11.0.4 [ 29021 ] | |

| Fix Version/s | 11.1.3 [ 29023 ] | |

| Fix Version/s | 11.2.2 [ 29035 ] | |

| Fix Version/s | 10.6 [ 24028 ] | |

| Fix Version/s | 10.10 [ 27530 ] | |

| Fix Version/s | 10.11 [ 27614 ] | |

| Fix Version/s | 11.0 [ 28320 ] | |

| Fix Version/s | 11.1 [ 28549 ] | |

| Fix Version/s | 11.2 [ 28603 ] | |

| Assignee | Axel Schwenke [ axel ] | Marko Mäkelä [ marko ] |

| Resolution | Fixed [ 1 ] | |

| Status | In Testing [ 10301 ] | Closed [ 6 ] |

| Link |

This issue relates to |

| Link |

This issue relates to |

| Link |

This issue relates to |

| Link |

This issue causes |

| Link |

This issue relates to |

| Link | This issue relates to MDEV-17598 [ MDEV-17598 ] |

| Link |

This issue relates to |

| Remote Link | This issue links to "Page (MariaDB Confluence)" [ 36306 ] |

| Link | This issue relates to MDEV-14602 [ MDEV-14602 ] |

| Link |

This issue relates to |

| Link |

This issue relates to |

| Attachment | screenshot-1.png [ 72803 ] |

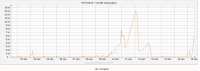

| Attachment | screenshot-2.png [ 72804 ] |

| Attachment | screenshot-3.png [ 72805 ] |

| Link | This issue causes MENT-2044 [ MENT-2044 ] |

| Link | This issue causes MENT-2044 [ MENT-2044 ] |

| Assignee | Marko Mäkelä [ marko ] | Valerii Kravchuk [ valerii ] |

| Assignee | Valerii Kravchuk [ valerii ] |

| Assignee | Marko Mäkelä [ marko ] |

| Link |

This issue relates to |

| Link |

This issue causes |

| Link |

This issue relates to |

| Link |

This issue relates to |

| Link |

This issue causes |

| Zendesk Related Tickets | 201658 108492 181007 130146 | |

| Zendesk active tickets | 201658 |

| Link | This issue relates to MDEV-18746 [ MDEV-18746 ] |

| Zendesk active tickets | 201658 | CS0000 201658 |

| Link | This issue relates to MDEV-35895 [ MDEV-35895 ] |

@axel said in slack to marko: