Details

-

Bug

-

Status: Closed (View Workflow)

-

Major

-

Resolution: Fixed

-

10.5

Description

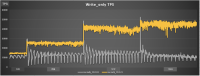

Workload: sysbench OLTP write-only, i.e. as it is used by the regression benchmark suite in t_writes_innodb_multi.

Setup: 16G buffer pool. 4G redo log. 4G or 8G data set. innodb_flush_neighbors = 0, innodb_io_capacity = 1000 (or 5000, 10000)

Observation: after starting high, performance drops after ~ 1 minute. If waiting long enough, one can see oscillations in throughput. This seems to be related to Innodb_checkpoint_age reaching Innodb_checkpoint_max_age. There seems to be no LRU flushing at all, only flush_list flushing.

Attachments

Issue Links

- blocks

-

MDEV-11659 Move the InnoDB doublewrite buffer to flat files

-

- Open

-

-

MDEV-23756 Implement event-driven innodb_adaptive_flushing=OFF that ignores innodb_io_capacity

-

- Open

-

-

MDEV-24000 Reduce fil_system.mutex contention

-

- Closed

-

- causes

-

MDEV-24049 InnoDB: Failing assertion: node->is_open() in fil_space_t::flush_low

-

- Closed

-

-

MDEV-24053 MSAN use-of-uninitialized-value in tpool::simulated_aio::simulated_aio_callback()

-

- Closed

-

-

MDEV-24101 innodb_random_read_ahead=ON causes hang on DDL or shutdown

-

- Closed

-

-

MDEV-24109 InnoDB hangs with innodb_flush_sync=OFF

-

- Closed

-

-

MDEV-24190 Accessing INFORMATION_SCHEMA.INNODB_TABLESPACES_ENCRYPTION may break innodb_open_files logic

-

- Closed

-

-

MDEV-24313 Hang with innodb_use_native_aio=0 and innodb_write_io_threads=1

-

- Closed

-

-

MDEV-24348 InnoDB shutdown hang with innodb_flush_sync=0

-

- Closed

-

-

MDEV-24391 heap-use-after-free in fil_space_t::flush_low()

-

- Closed

-

-

MDEV-24442 Assertion `space->referenced()' failed in fil_crypt_space_needs_rotation

-

- Closed

-

-

MDEV-24537 innodb_max_dirty_pages_pct_lwm=0 lost its special meaning

-

- Closed

-

-

MDEV-25093 Adaptive flushing fails to kick in even if innodb_adaptive_flushing_lwm is hit. (possible regression)

-

- Closed

-

-

MDEV-25110 [ERROR] [FATAL] InnoDB: Trying to write 16384 bytes at 1032192 outside the bounds

-

- Closed

-

-

MDEV-25214 Server crash in fil_space_t::try_to_close or Assertion `!node->is_open()' failed in fil_node_open_file_low

-

- Closed

-

-

MDEV-25215 Excessive logging "Cannot close file ./test/FTS_xxx.ibd because of n pending operations and pending fsync

-

- Closed

-

-

MDEV-25613 mariabackup.absolute_ibdata_paths caused signal 6 (assertion failure)

-

- Closed

-

-

MDEV-25948 Remove log_flush_task

-

- Closed

-

-

MDEV-26293 InnoDB: Failing assertion: space->is_ready_to_close() || space->purpose == FIL_TYPE_TEMPORARY || srv_fast_shutdown == 2 || !srv_was_started

-

- Closed

-

-

MDEV-30227 [ERROR] [FATAL] InnoDB: fdatasync() returned 9

-

- Closed

-

-

MDEV-30860 Race condition between buffer pool flush and log file deletion in mariadb-backup --prepare

-

- Closed

-

-

MDEV-31124 Innodb_data_written miscounts doublewrites

-

- Closed

-

-

MDEV-31347 fil_ibd_create() may hijack the file handle of an old file

-

- Closed

-

-

MDEV-32150 InnoDB reports corruption on 32-bit platforms with ibd files sizes > 4GB

-

- Closed

-

- is blocked by

-

MDEV-23986 [ERROR] [FATAL] InnoDB: Page ... name ... page_type ... key_version 1 lsn ... compressed_len ...

-

- Closed

-

-

MDEV-24012 Assertion `cursor->is_system() || srv_operation == SRV_OPERATION_RESTORE_DELTA || xb_close_files' failed.

-

- Closed

-

- relates to

-

MDEV-11384 AliSQL: [Feature] Issue#19 BUFFER POOL LIST SCAN OPTIMIZATION

-

- Closed

-

-

MDEV-15949 InnoDB: Failing assertion: space->n_pending_ops == 0 in fil_delete_tablespace upon DROP TABLE

-

- Closed

-

-

MDEV-20648 InnoDB: Failing assertion: !(*node)->being_extended, innodb.log_data_file_size failed in buildbot, assertion `!space->is_stopping()'

-

- Closed

-

-

MDEV-24350 buf_dblwr unnecessarily uses memory-intensive srv_stats counters

-

- Closed

-

-

MDEV-24661 The test innodb.innodb_wl6326_big often times out

-

- Closed

-

-

MDEV-24854 Change innodb_flush_method=O_DIRECT by default

-

- Closed

-

-

MDEV-24949 Enabling idle flushing (possible regression from MDEV-23855)

-

- Closed

-

-

MDEV-25425 Useless message "If the mysqld execution user is authorized page cleaner thread priority can be changed."

-

- Closed

-

-

MDEV-26855 Enable spinning for log_sys_mutex and log_flush_order_mutex

-

- Closed

-

-

MDEV-33770 Alter operation hangs when encryption thread works on the same tablespace

-

- Closed

-

-

MDEV-14462 Confusing error message: ib_logfiles are too small for innodb_thread_concurrency=0

-

- Closed

-

-

MDEV-15752 Possible race between DDL and accessing I_S.INNODB_TABLESPACES_ENCRYPTION

-

- Confirmed

-

-

MDEV-23399 10.5 performance regression with IO-bound tpcc

-

- Closed

-

-

MDEV-23929 innodb_flush_neighbors is not being ignored for system tablespace on SSD

-

- Closed

-

-

MDEV-25003 mtflush_service_io - workitem status is always SUCCESS even if pool flush fails

-

- Closed

-

-

MDEV-27295 MariaDB 10.5 does not do idle checkpoint (regression). Marked as fixed on 10.5.13 but it is not

-

- Closed

-

Activity

| Field | Original Value | New Value |

|---|---|---|

| Link |

This issue is caused by |

| Attachment | oltp_ts_64.png [ 54065 ] | |

| Attachment | oltp_ts_128.png [ 54066 ] | |

| Attachment | oltp_ts_256.png [ 54067 ] |

| Link | This issue blocks MDEV-23756 [ MDEV-23756 ] |

| Link |

This issue relates to |

| Link |

This issue is caused by |

| Status | Open [ 1 ] | In Progress [ 3 ] |

| Link |

This issue relates to |

| Assignee | Marko Mäkelä [ marko ] | Vladislav Vaintroub [ wlad ] |

| Status | In Progress [ 3 ] | In Review [ 10002 ] |

| Link |

This issue is blocked by |

| Attachment | Screen Shot 2020-10-20 at 5.02.56 PM.png [ 54439 ] |

| Attachment | Screen Shot 2020-10-20 at 5.02.56 PM.png [ 54439 ] |

| Attachment | Screen Shot 2020-10-20 at 5.59.20 PM.png [ 54440 ] |

| Link |

This issue blocks |

| Link |

This issue relates to |

| Link |

This issue is blocked by |

| Assignee | Vladislav Vaintroub [ wlad ] | Marko Mäkelä [ marko ] |

| issue.field.resolutiondate | 2020-10-26 16:06:16.0 | 2020-10-26 16:06:16.493 |

| Fix Version/s | 10.5.7 [ 25019 ] | |

| Fix Version/s | 10.5 [ 23123 ] | |

| Fix Version/s | 10.6 [ 24028 ] | |

| Resolution | Fixed [ 1 ] | |

| Status | In Review [ 10002 ] | Closed [ 6 ] |

| Link |

This issue relates to |

| Link |

This issue causes |

| Link |

This issue causes |

| Link |

This issue causes |

| Link |

This issue causes |

| Link |

This issue causes |

| Link |

This issue causes |

| Attachment | Innodb-max-dirty-pages-pct-lwm.png [ 54636 ] |

| Attachment | Innodb_io_capacity.png [ 54637 ] |

| Link |

This issue causes |

| Link |

This issue causes |

| Link |

This issue causes |

| Link |

This issue relates to |

| Link |

This issue causes |

| Link |

This issue relates to |

| Link |

This issue relates to |

| Link |

This issue causes |

| Link |

This issue causes |

| Link |

This issue relates to |

| Link |

This issue relates to |

| Link |

This issue relates to |

| Link |

This issue relates to |

| Link |

This issue relates to |

| Link |

This issue causes |

| Link | This issue blocks MDEV-11659 [ MDEV-11659 ] |

| Link |

This issue relates to |

| Link |

This issue relates to |

| Link |

This issue causes |

| Link | This issue blocks TODO-400 [ TODO-400 ] |

| Link | This issue blocks TODO-398 [ TODO-398 ] |

| Link |

This issue causes |

| Link |

This issue causes |

| Link | This issue relates to MDEV-15752 [ MDEV-15752 ] |

| Epic Link | MDEV-26620 [ 102791 ] |

| Link |

This issue causes |

| Link |

This issue relates to |

| Workflow | MariaDB v3 [ 114047 ] | MariaDB v4 [ 158419 ] |

| Link |

This issue relates to |

| Link |

This issue causes |

| Link |

This issue causes |

| Link |

This issue causes |

| Link | This issue relates to MENT-1726 [ MENT-1726 ] |

| Link |

This issue causes |

| Link |

This issue causes |

| Link |

This issue causes |

| Link |

This issue causes |

| Link |

This issue relates to |

The problem can be seen with MariaDB-10.5.3 too. It happens only at higher thread counts. Up to 64 threads everything is fine. With 128 threads we can see first small oscillations. With 256 threads everything goes down the drain.