Details

-

New Feature

-

Status: In Progress (View Workflow)

-

Critical

-

Resolution: Unresolved

-

None

-

Q1/2026 Server Maintenance

Description

The purpose of this work is to improve current situation around backups by implementing a SQL BACKUP command.

Interface between client and server

BACKUP command sends the file segments to the client via MySQL's protocol ResultSet, i.e client issues BACKUP statement, server sends a result set back

The ResultSet consists of following fields

- VARCHAR(N) filename

- INT(SET?) flags (OPEN,CLOSE, SPARSE, RENAME, DELETE, etc)

- BIGINT offset

- LONGBLOB data

The client

There will be a simple client program which will issue "BACKUP" statement ,read the result set from server, and apply it to create a directory with a copy of the database. Due to abiliity to use client API (in C, .NET, Java, etc), it should be relatively easy to write specialized backup applications for special needs (e.g "streaming to clould", distributing to multiple machines)

Interface between server and storage engines.

Most of the actual work is done by the storage engines, server initiates backup by calling storage engine's backup()_begin function. For every file chunk, storage engine calls server's backup_file(filename, flag, offset, length, data) function, that streams file chink as result set row to client. When backup is finished, engine calls `backup_end()` so that server would know when backup is finally done.

There default implementation of backup_start() enumerates engines own files, reads them, and signals backup_file() for every chunk read. FLUSH TABLES FOR BACKUP, must be issued for that.

Engines that support online backup, like Innodb would have much more complicated implementation.

Incremental and partial backups

Incremental/differential (changes since specific backup) and partial backups( only some tables) will be supported as well, and the client-server interface does not change much for that . For incremental backup, the client application might need to invent some "patch" format, or use some standard for binary diffs (rdiff? bsdiff? xdelta? ), and save diffs to be applied to "base backup" directory later.

Backup metadata.

There is a need to store different kind of metadata in the backup, usually binlog position, and some kind of timestamp (LSN) for incremental backup. This information can be stored as session variable available after BACKUP command, or send as extra result set after the backup one (protocol does support multi-result sets).

Restore

Unlike the current solution with mariabackup or xtrabackup, there won't be any kinds of "prepare" or "restore" application,

The directory that was made when full backup was stored should be usable for new database.

files from partial backups could just be copied to destination (and maybe "IMPORT TABLESPACE", if we still support that).

There should still be some kind of "patch" application for applying incremental backups to the base directory. Dependend on how our client stores incremental backup, we can do without it , and users would only need to use 3rd party bsdiff, rdiff,, mspatcha.

Attachments

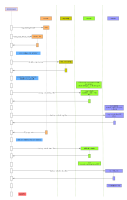

Issue Links

- includes

-

MDEV-37949 Implement innodb_log_archive

-

- In Progress

-

-

MDEV-38362 Develop an efficient alternative to mbstream

-

- In Progress

-

- is blocked by

-

MDEV-35248 in server backup: Research, scope & prototyping

-

- Closed

-

- relates to

-

MDEV-22096 Mariabackup copied too old page or too new checkpoint

-

- Closed

-

-

MDEV-23947 reflink support for mariabackup

-

- Open

-

-

MDEV-27424 mariabackup ignores physically corrupt first pages

-

- Closed

-

-

MDEV-27621 Backup fails with FATAL ERROR: Was only able to copy log from .. to .., not ..

-

- Closed

-

-

MDEV-27812 Allow innodb_log_file_size to change without server restart

-

- Closed

-

-

MDEV-28994 Backup produces garbage when using memory-mapped log (PMEM)

-

- Closed

-

-

MDEV-31166 Cleanup process for recovering from partial backup

-

- Open

-

-

MDEV-33980 mariadb-backup --backup is missing retry logic for undo tablespaces

-

- Closed

-

-

MDEV-34062 mariadb-backup --backup is extremely slow at copying ib_logfile0

-

- Closed

-

-

MDEV-35791 mariadb-backup 10.11.10 failed to create backup

-

- Closed

-

-

MDEV-36159 mariabackup failed after upgrade 10.11.10

-

- Closed

-

-

MDEV-5336 Implement BACKUP STAGE for safe external backups

-

- Closed

-

-

MDEV-7502 Automatic provisioning of slave

-

- Open

-

-

MDEV-13833 implement --innodb-track-changed-pages

-

- Open

-

-

MDEV-14425 Change the InnoDB redo log format to reduce write amplification

-

- Closed

-

-

MDEV-18336 Remove backup_fix_ddl() during backup

-

- Open

-

-

MDEV-18985 Remove support for XtraDB's changed page bitmap from Mariabackup in 10.2+

-

- Closed

-

-

MDEV-19492 Mariabackup hangs if table populated with INSERT... SELECT while it runs

-

- Stalled

-

-

MDEV-19749 MDL scalability regression after backup locks

-

- Closed

-

-

MDEV-21105 Port clone plugin API (MYSQL_CLONE_PLUGIN) from MySQL

-

- Closed

-

-

MDEV-27551 mariabackup --backup aborts if a file is deleted during enumerate_ibd_files()

-

- Open

-

-

MDEV-29115 mariabackup.mdev-14447 started failing in a new way in CIs

-

- Closed

-

-

MDEV-30026 incremental backup creates broken files if there is a high load during backup

-

- Closed

-

-

MDEV-31410 mariadb-backup prepare crash with InnoDB: Missing FILE_CREATE, FILE_DELETE or FILE_MODIFY before FILE_CHECKPOINT

-

- Closed

-

-

MDEV-31446 mariabackup loop on Read redo log up to LSN

-

- Open

-

-

MDEV-33367 FATAL ERROR: <time> Was only able to copy log from <seq_no> to <seq_no>, not <seq_no>; try increasing innodb_log_file_size

-

- Closed

-

-

MDEV-36159 mariabackup failed after upgrade 10.11.10

-

- Closed

-

- split to

-

MDEV-35248 in server backup: Research, scope & prototyping

-

- Closed

-

- mentioned in

-

Page Loading...