Details

-

Bug

-

Status: Closed (View Workflow)

-

Critical

-

Resolution: Fixed

-

10.6.12, 10.8.7, 10.9.5, 10.10.3, 10.6, 10.9(EOL), 10.10(EOL), 10.11

-

None

Description

In a woocommerce install the following table is somehow allowing duplicate entries into the unique indexed column on the slave, which breaks replication as this is detected on the slave side.

Table schema:

CREATE TABLE `wp_067d483e_woocommerce_sessions` ( |

`session_id` bigint(20) unsigned NOT NULL AUTO_INCREMENT, |

`session_key` char(32) NOT NULL, |

`session_value` longtext NOT NULL, |

`session_expiry` bigint(20) unsigned NOT NULL, |

PRIMARY KEY (`session_id`), |

UNIQUE KEY `session_key` (`session_key`) |

) ENGINE=InnoDB AUTO_INCREMENT=2642 DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_general_ci; |

Slave error:

Last_SQL_Error: Could not execute Write_rows_v1 event on table grao.wp_067d483e_woocommerce_sessions; Entrada duplicada '63966' para la clave 'session_key', Error_code: 1062; handler error HA_ERR_FOUND_DUPP_KEY; the event's master log db1-bin.000001, end_log_pos 109407704

|

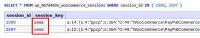

Screenshot attached showing the duplicate entry, confirmed by hex that it is the same data.

We are not sure how this is happening yet. We have been offered access to the system, I will notify the affected person of this ticket once it is created.

I have confirmed that `unique_checks` are on.

Attachments

Issue Links

- is caused by

-

MDEV-29603 btr_cur_open_at_index_side() is missing some consistency checks

-

- Closed

-

- is duplicated by

-

MDEV-31713 Duplicate rows for unique index after upgrading from 10.6.11 to 10.6.14

-

- Closed

-

- relates to

-

MDEV-27849 rpl.rpl_start_alter_7 (and 8, mysqbinlog_2) fail in buildbot, [ERROR] Slave SQL: Error during XID COMMIT: failed to update GTID state in mysql.gtid_slave_pos

-

- Closed

-

-

MDEV-31326 insert statement executed multiple times on slave

-

- Closed

-