Details

-

Bug

-

Status: Closed (View Workflow)

-

Major

-

Resolution: Fixed

-

10.5.11

-

Ubuntu 20.04 LTS

Description

Hi Team,

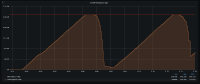

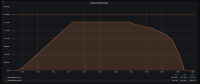

This issue is related to MDEV-25093. Adaptive flushing is not getting invoked after crossing the configured value of innodb_adaptive_flushing_lwm. I have attached the graph for checkpoint.

My setup is 3 node galera cluster running 10.5.11 version and 26.4.8-focal galera package installed on ubuntu focal 20.04.

MDEV-25093 is supposed to be fixed in 10.5.10, but I see a similar behaviour in all the mariadb versions starting from 10.5.7 to 10.5.11. I have shared the graph for 10.5.11 though.

Below are the configs I used.

|

|

MariaDB [(none)]> select version();

|

+-------------------------------------------+

|

| version() |

|

+-------------------------------------------+

|

| 10.5.11-MariaDB-1:10.5.11+maria~focal-log |

|

+-------------------------------------------+

|

|

|

|

|

MariaDB [(none)]> show global variables like '%innodb_max_dirty_pages_pct%';

|

+--------------------------------+-----------+

|

| Variable_name | Value |

|

+--------------------------------+-----------+

|

| innodb_max_dirty_pages_pct | 90.000000 |

|

| innodb_max_dirty_pages_pct_lwm | 10.000000 |

|

+--------------------------------+-----------+

|

|

|

MariaDB [(none)]> show global variables like '%innodb_adaptive_flushing%';

|

+------------------------------+-----------+

|

| Variable_name | Value |

|

+------------------------------+-----------+

|

| innodb_adaptive_flushing | ON |

|

| innodb_adaptive_flushing_lwm | 10.000000 |

|

+------------------------------+-----------+

|

|

|

MariaDB [(none)]> show variables like '%innodb_flush%';

|

+--------------------------------+----------+

|

| Variable_name | Value |

|

+--------------------------------+----------+

|

| innodb_flush_log_at_timeout | 1 |

|

| innodb_flush_log_at_trx_commit | 0 |

|

| innodb_flush_method | O_DIRECT |

|

| innodb_flush_neighbors | 1 |

|

| innodb_flush_sync | ON |

|

| innodb_flushing_avg_loops | 30 |

|

+--------------------------------+----------+

|

|

|

MariaDB [(none)]> show global variables like '%io_capa%';

|

+------------------------+-------+

|

| Variable_name | Value |

|

+------------------------+-------+

|

| innodb_io_capacity | 3000 |

|

| innodb_io_capacity_max | 5000 |

|

+------------------------+-------+

|

|

I tried by increasing/decreasing the innodb_adaptive_flushing_lwm and innodb_max_dirty_pages_pct_lwm but sill facing the same issue.

I also tweaked innodb_io_capacity and innodb_io_capacity_max but no luck, btw we are using NVMe disks for this setup.

Below are the status when Innodb_checkpoint_age reaches Innodb_checkpoint_max_age

MariaDB [(none)]> show status like 'Innodb_buffer_pool_pages%'; show status like 'Innodb_checkpoint_%';

|

|

|

+-----------------------------------------+-----------+

|

| Variable_name | Value |

|

+-----------------------------------------+-----------+

|

| Innodb_buffer_pool_pages_data | 10654068 |

|

| Innodb_buffer_pool_pages_dirty | 9425873 |

|

| Innodb_buffer_pool_pages_flushed | 100225151 |

|

| Innodb_buffer_pool_pages_free | 16726607 |

|

| Innodb_buffer_pool_pages_made_not_young | 12247924 |

|

| Innodb_buffer_pool_pages_made_young | 565734067 |

|

| Innodb_buffer_pool_pages_misc | 0 |

|

| Innodb_buffer_pool_pages_old | 3932829 |

|

| Innodb_buffer_pool_pages_total | 27380675 |

|

| Innodb_buffer_pool_pages_lru_flushed | 0 |

|

+-----------------------------------------+-----------+

|

10 rows in set (0.000 sec)

|

|

|

+---------------------------+-------------+

|

| Variable_name | Value |

|

+---------------------------+-------------+

|

| Innodb_checkpoint_age | 26090991889 |

|

| Innodb_checkpoint_max_age | 26091216876 |

|

+---------------------------+-------------+

|

|

Iostat

|

|

|

Device r/s rkB/s rrqm/s %rrqm r_await rareq-sz w/s wkB/s wrqm/s %wrqm w_await wareq-sz d/s dkB/s drqm/s %drqm d_await dareq-sz aqu-sz %util

|

dm-0 28.80 460.80 0.00 0.00 0.22 16.00 30756.60 992005.60 0.00 0.00 0.04 32.25 0.00 0.00 0.00 0.00 0.00 0.00 1.27 99.68

|

dm-1 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

|

dm-2 0.00 0.00 0.00 0.00 0.00 0.00 1.80 21.60 0.00 0.00 0.00 12.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.48

|

dm-3 0.00 0.00 0.00 0.00 0.00 0.00 13.60 31140.80 0.00 0.00 1.29 2289.76 0.00 0.00 0.00 0.00 0.00 0.00 0.02 6.32

|

sda 0.00 0.00 0.00 0.00 0.00 0.00 0.80 27.20 6.00 88.24 0.25 34.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.24

|

sdb 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

|

sdc 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

|

sfd0n1 28.80 460.80 0.00 0.00 0.29 16.00 32618.60 1023168.00 0.00 0.00 0.05 31.37 0.00 0.00 0.00 0.00 0.00 0.00 0.16 99.68

|

QPS around the issue

[ 1252s ] thds: 256 tps: 69863.98 qps: 69863.98 (r/w/o: 0.00/49843.99/20019.99) lat (ms,99%): 5.88 err/s: 0.00 reconn/s: 0.00

|

[ 1253s ] thds: 256 tps: 20750.41 qps: 20750.41 (r/w/o: 0.00/14817.86/5932.55) lat (ms,99%): 6.09 err/s: 0.00 reconn/s: 0.00

|

[ 1254s ] thds: 256 tps: 0.00 qps: 0.00 (r/w/o: 0.00/0.00/0.00) lat (ms,99%): 0.00 err/s: 0.00 reconn/s: 0.00

|

[ 1255s ] thds: 256 tps: 58797.66 qps: 58797.66 (r/w/o: 0.00/41615.64/17182.02) lat (ms,99%): 7.98 err/s: 0.00 reconn/s: 0.00

|

[ 1256s ] thds: 256 tps: 67527.27 qps: 67527.27 (r/w/o: 0.00/48173.05/19354.22) lat (ms,99%): 7.43 err/s: 0.00 reconn/s: 0.00

|

[ 1257s ] thds: 256 tps: 58384.64 qps: 58384.64 (r/w/o: 0.00/41503.59/16881.05) lat (ms,99%): 7.43 err/s: 0.00 reconn/s: 0.00

|

|

|

[ 1273s ] thds: 256 tps: 69908.70 qps: 69908.70 (r/w/o: 0.00/49996.07/19912.63) lat (ms,99%): 6.09 err/s: 0.00 reconn/s: 0.00

|

[ 1274s ] thds: 256 tps: 43327.15 qps: 43327.15 (r/w/o: 0.00/30641.10/12686.04) lat (ms,99%): 167.44 err/s: 0.00 reconn/s: 0.00

|

[ 1275s ] thds: 256 tps: 32849.45 qps: 32849.45 (r/w/o: 0.00/23436.04/9413.42) lat (ms,99%): 118.92 err/s: 0.00 reconn/s: 0.00

|

[ 1276s ] thds: 256 tps: 38600.51 qps: 38600.51 (r/w/o: 0.00/27316.24/11284.27) lat (ms,99%): 223.34 err/s: 0.00 reconn/s: 0.00

|

[ 1277s ] thds: 256 tps: 41558.22 qps: 41558.22 (r/w/o: 0.00/29635.58/11922.64) lat (ms,99%): 15.83 err/s: 0.00 reconn/s: 0.00

|

[ 1278s ] thds: 256 tps: 49127.92 qps: 49127.92 (r/w/o: 0.00/35045.52/14082.40) lat (ms,99%): 183.21 err/s: 0.00 reconn/s: 0.00

|

[ 1279s ] thds: 256 tps: 47763.57 qps: 47763.57 (r/w/o: 0.00/33943.82/13819.74) lat (ms,99%): 15.27 err/s: 0.00 reconn/s: 0.00

|

[ 1280s ] thds: 256 tps: 41276.96 qps: 41276.96 (r/w/o: 0.00/29521.97/11754.99) lat (ms,99%): 164.45 err/s: 0.00 reconn/s: 0.00

|

[ 1281s ] thds: 256 tps: 40896.95 qps: 40896.95 (r/w/o: 0.00/29010.55/11886.41) lat (ms,99%): 164.45 err/s: 0.00 reconn/s: 0.00

|

[ 1282s ] thds: 256 tps: 51294.76 qps: 51294.76 (r/w/o: 0.00/36633.83/14660.93) lat (ms,99%): 16.12 err/s: 0.00 reconn/s: 0.00

|

[ 1283s ] thds: 256 tps: 36653.38 qps: 36653.38 (r/w/o: 0.00/26246.55/10406.82) lat (ms,99%): 227.40 err/s: 0.00 reconn/s: 0.00

|

[ 1284s ] thds: 256 tps: 47463.39 qps: 47463.39 (r/w/o: 0.00/33757.41/13705.98) lat (ms,99%): 8.43 err/s: 0.00 reconn/s: 0.00

|

[ 1285s ] thds: 256 tps: 44322.09 qps: 44322.09 (r/w/o: 0.00/31595.92/12726.16) lat (ms,99%): 176.73 err/s: 0.00 reconn/s: 0.00

|

[ 1286s ] thds: 256 tps: 45233.40 qps: 45233.40 (r/w/o: 0.00/32176.58/13056.83) lat (ms,99%): 139.85 err/s: 0.00 reconn/s: 0.00

|

[ 1287s ] thds: 256 tps: 43981.65 qps: 43981.65 (r/w/o: 0.00/31424.61/12557.04) lat (ms,99%): 137.35 err/s: 0.00 reconn/s: 0.00

|

[ 1288s ] thds: 256 tps: 45362.25 qps: 45362.25 (r/w/o: 0.00/32337.89/13024.36) lat (ms,99%): 164.45 err/s: 0.00 reconn/s: 0.00

|

[ 1289s ] thds: 256 tps: 47941.21 qps: 47941.21 (r/w/o: 0.00/34073.42/13867.80) lat (ms,99%): 15.55 err/s: 0.00 reconn/s: 0.00

|

[ 1290s ] thds: 256 tps: 48869.94 qps: 48869.94 (r/w/o: 0.00/34709.96/14159.98) lat (ms,99%): 10.09 err/s: 0.00 reconn/s: 0.00

|

[ 1291s ] thds: 256 tps: 46091.53 qps: 46091.53 (r/w/o: 0.00/32806.82/13284.71) lat (ms,99%): 10.09 err/s: 0.00 reconn/s: 0.00

|

[ 1292s ] thds: 256 tps: 46741.92 qps: 46741.92 (r/w/o: 0.00/33258.23/13483.69) lat (ms,99%): 73.13 err/s: 0.00 reconn/s: 0.00

|

[ 1293s ] thds: 256 tps: 49509.12 qps: 49509.12 (r/w/o: 0.00/35481.93/14027.18) lat (ms,99%): 66.84 err/s: 0.00 reconn/s: 0.00

|

[ 1294s ] thds: 256 tps: 43450.71 qps: 43450.71 (r/w/o: 0.00/30950.49/12500.22) lat (ms,99%): 15.83 err/s: 0.00 reconn/s: 0.00

|

[ 1295s ] thds: 256 tps: 48791.38 qps: 48791.38 (r/w/o: 0.00/34645.01/14146.37) lat (ms,99%): 183.21 err/s: 0.00 reconn/s: 0.00

|

[ 1296s ] thds: 256 tps: 42562.93 qps: 42562.93 (r/w/o: 0.00/30257.95/12304.98) lat (ms,99%): 16.41 err/s: 0.00 reconn/s: 0.00

|

[ 1297s ] thds: 256 tps: 65641.78 qps: 65641.78 (r/w/o: 0.00/46758.54/18883.24) lat (ms,99%): 7.56 err/s: 0.00 reconn/s: 0.00

|

[ 1298s ] thds: 256 tps: 38736.94 qps: 38736.94 (r/w/o: 0.00/27624.96/11111.98) lat (ms,99%): 15.55 err/s: 0.00 reconn/s: 0.00

|

[ 1299s ] thds: 256 tps: 51147.80 qps: 51147.80 (r/w/o: 0.00/36503.14/14644.66) lat (ms,99%): 16.12 err/s: 0.00 reconn/s: 0.00

|

[ 1300s ] thds: 256 tps: 34908.85 qps: 34908.85 (r/w/o: 0.00/24826.60/10082.24) lat (ms,99%): 16.12 err/s: 0.00 reconn/s: 0.00

|

[ 1301s ] thds: 256 tps: 60257.94 qps: 60257.94 (r/w/o: 0.00/42931.96/17325.98) lat (ms,99%): 13.46 err/s: 0.00 reconn/s: 0.00

|

[ 1302s ] thds: 256 tps: 44607.33 qps: 44607.33 (r/w/o: 0.00/31644.10/12963.22) lat (ms,99%): 179.94 err/s: 0.00 reconn/s: 0.00

|

[ 1303s ] thds: 256 tps: 52189.03 qps: 52189.03 (r/w/o: 0.00/37171.75/15017.28) lat (ms,99%): 7.43 err/s: 0.00 reconn/s: 0.00

|

|

|

[ 1319s ] thds: 256 tps: 67309.85 qps: 67309.85 (r/w/o: 0.00/47982.61/19327.24) lat (ms,99%): 9.39 err/s: 0.00 reconn/s: 0.00

|

[ 1320s ] thds: 256 tps: 6857.52 qps: 6857.52 (r/w/o: 0.00/4928.37/1929.15) lat (ms,99%): 189.93 err/s: 0.00 reconn/s: 0.00

|

[ 1321s ] thds: 256 tps: 6017.51 qps: 6017.51 (r/w/o: 0.00/4291.65/1725.86) lat (ms,99%): 1618.78 err/s: 0.00 reconn/s: 0.00

|

[ 1322s ] thds: 256 tps: 64763.63 qps: 64763.63 (r/w/o: 0.00/46201.32/18562.32) lat (ms,99%): 7.98 err/s: 0.00 reconn/s: 0.00

|

|

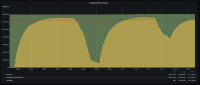

QPS dips, disk util increases when Mariadb starts to do the furious flushing .

Attachments

Issue Links

- blocks

-

MDEV-26827 Make page flushing even faster

-

- Closed

-

-

MDEV-33053 InnoDB LRU flushing does not run before running out of buffer pool

-

- Closed

-

- causes

-

MDEV-31939 Adaptive flush recommendation ignores dirty ratio and checkpoint age

-

- Closed

-

-

MDEV-32861 InnoDB hangs when running out of I/O slots

-

- Closed

-

-

MDEV-37946 Deadlock when reading an encrypted page during SET GLOBAL innodb_read_reads or innodb_write_threads

-

- Open

-

- is caused by

-

MDEV-24369 Page cleaner sleeps indefinitely despite innodb_max_dirty_pages_pct_lwm being exceeded

-

- Closed

-

- relates to

-

MDEV-6932 Enable Lazy Flushing

-

- Closed

-

-

MDEV-31956 SSD based InnoDB buffer pool extension

-

- In Testing

-

-

MDEV-25093 Adaptive flushing fails to kick in even if innodb_adaptive_flushing_lwm is hit. (possible regression)

-

- Closed

-

-

MDEV-26004 Excessive wait times in buf_LRU_get_free_block()

-

- Closed

-

-

MDEV-29967 innodb_read_ahead_threshold (linear read-ahead) does not work

-

- Closed

-

-

MDEV-32681 Test case innodb.undo_truncate_recover frequent failure

-

- Closed

-

-

MDEV-33656 MariaDB unresponsive and in error log file --Thread 139894688810752 has waited at srv0srv.cc line 1554

-

- Closed

-