Details

-

Bug

-

Status: Open (View Workflow)

-

Major

-

Resolution: Unresolved

-

10.4.31, 10.5, 10.6, 10.7(EOL), 10.8(EOL), 10.9(EOL), 10.10(EOL), 10.11, 11.0(EOL), 11.1(EOL), 11.2(EOL)

-

None

-

Ubuntu 20.04

Description

I am trying to troubleshoot an issue in a magento 2 website. It seems that at random times there comes a situation where mariadb seems to go into a deadlock situation and slowly stops serving requests. I end up with a state where every query is stuck in a waiting state. In the end some queries time out, I receive notifications about lock wait timeout exceeded and the queries eventually finish executing.

I'm trying to deduce what's happening and how to solve this but I'm stuck and need some help understanding the cause of this issue so I ended up in dba.stackexchange.com where it was suggested that I open a bug report. You can see the full thread here

And there's also some extra info here about the issue I'm encountering

https://github.com/magento/magento2/issues/36667

Here's the current config

~$ my_print_defaults --mysqld

|

--user=mysql

|

--pid-file=/run/mysqld/mysqld.pid

|

--socket=/run/mysqld/mysqld.sock

|

--basedir=/usr

|

--datadir=/var/lib/mysql

|

--tmpdir=/tmp

|

--lc-messages-dir=/usr/share/mysql

|

--bind-address=0.0.0.0

|

--key_buffer_size=64M

|

--max_allowed_packet=1G

|

--max_connections=300

|

--query_cache_size=0

|

--log_error=/var/log/mysql/error.log

|

--expire_logs_days=10

|

--character-set-server=utf8mb4

|

--collation-server=utf8mb4_general_ci

|

--query_cache_size=0

|

--query_cache_type=0

|

--query_cache_limit=0

|

--join_buffer_size=512K

|

--tmp_table_size=128M

|

--max_heap_table_size=128M

|

--innodb_buffer_pool_size=32G

|

--innodb_buffer_pool_instances=16

|

--innodb_log_file_size=4G

|

--optimizer_use_condition_selectivity=1

|

--optimizer_switch=optimize_join_buffer_size=on

|

--in_predicate_conversion_threshold=4294967295

|

--innodb_data_home_dir=/var/lib/mysql/

|

--innodb_data_file_path=ibdata1:10M:autoextend

|

--innodb_log_group_home_dir=/var/lib/mysql/

|

--innodb_lock_wait_timeout=50

|

--innodb_file_per_table=1

|

--innodb_log_buffer_size=4M

|

--performance_schema

|

--optimizer_switch=rowid_filter=off

|

--optimizer_use_condition_selectivity=1

|

--optimizer_search_depth=0

|

--slow_query_log

|

--slow_query_log_file=/var/log/mysql/mariadb-slow.log

|

--long_query_time=20.0

|

--innodb_print_all_deadlocks=1

|

--lock_wait_timeout=240

|

--bind-address=0.0.0.0

|

--sql_mode=

|

--local-infile=0

|

--innodb_open_files=4000

|

--table_open_cache=3000

|

--table_definition_cache=3000

|

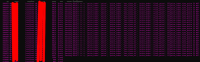

I'm attaching the image from what I see in mytop during the occurence and the results of

1. the full process list,

2. the mariadb full backtrace of all running threads and

3. the result of `show engine innodb status`

However I wasn't able to acquire them all together because I have to be connected during the issue and the duration is not the same everytime. Sometimes things resolve within a minute, sometimes within 10 minutes.

So they might have some different queries inside but I'm hoping we can find out the reason for this issue

Attachments

Issue Links

- relates to

-

MDEV-14486 InnoDB hang on shutdown

-

- Closed

-

It seems it's the optimizer's fault in the particular query. For some reason sometimes it will plan the query perfectly fine by limiting the rows properly, and sometimes it will do something weird taking ages to finish.

By removing the double join on is_salable, it seems the optimizer plans the query perfectly without issues. However sometimes we may need to have the same table joined twice due to a different condition so I'm not sure why this should be a problem.

Any ideas why it picks different plans for the same query randomly?