Details

-

Bug

-

Status: Confirmed (View Workflow)

-

Critical

-

Resolution: Unresolved

-

10.6.12

-

None

-

OS - Debian Bullseye

MariaDB - 10.6.12

Virtual Machine

Total Memory - 96 GB

CPUs -

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

Address sizes: 45 bits physical, 48 bits virtual

CPU(s): 12

On-line CPU(s) list: 0-11

Thread(s) per core: 1

Core(s) per socket: 12

Socket(s): 1

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 85

Model name: Intel(R) Xeon(R) Platinum 8358 CPU @ 2.60GHz

Stepping: 7

CPU MHz: 2593.905

BogoMIPS: 5187.81

Hypervisor vendor: VMware

Virtualization type: full

L1d cache: 576 KiB

L1i cache: 384 KiB

L2 cache: 15 MiB

L3 cache: 48 MiB

NUMA node0 CPU(s): 0-11

OS - Debian Bullseye MariaDB - 10.6.12 Virtual Machine Total Memory - 96 GB CPUs - Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian Address sizes: 45 bits physical, 48 bits virtual CPU(s): 12 On-line CPU(s) list: 0-11 Thread(s) per core: 1 Core(s) per socket: 12 Socket(s): 1 NUMA node(s): 1 Vendor ID: GenuineIntel CPU family: 6 Model: 85 Model name: Intel(R) Xeon(R) Platinum 8358 CPU @ 2.60GHz Stepping: 7 CPU MHz: 2593.905 BogoMIPS: 5187.81 Hypervisor vendor: VMware Virtualization type: full L1d cache: 576 KiB L1i cache: 384 KiB L2 cache: 15 MiB L3 cache: 48 MiB NUMA node0 CPU(s): 0-11

Description

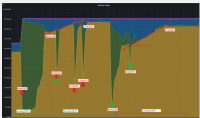

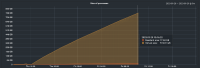

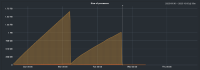

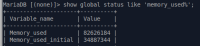

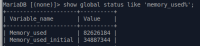

The MariaDB consumes all memory allocated on the server and eventually crashes due to OOM

Mar 17 18:35:35 <server_name> kernel: [2871555.121737] Swap cache stats: add 28523916, delete 28524014, find 47249895/50419652

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121737] Free swap = 0kB

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121738] Total swap = 999420kB

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121738] 18874238 pages RAM

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121738] 0 pages HighMem/MovableOnly

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121739] 319662 pages reserved

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121739] 0 pages hwpoisoned

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121740] Tasks state (memory values in pages):

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121740] [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121747] [ 400] 0 400 41263 206 208896 604 -250 systemd-journal

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121749] [ 425] 0 425 6003 38 69632 383 -1000 systemd-udevd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121751] [ 585] 0 585 12424 0 81920 403 0 VGAuthService

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121752] [ 586] 0 586 43443 242 94208 181 0 vmtoolsd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121754] [ 603] 105 603 7654 181 73728 98 -900 dbus-daemon

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121755] [ 609] 112 609 549788 4955 372736 1872 0 prometheus-node

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121756] [ 611] 0 611 73164 530 102400 574 0 rsyslogd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121758] [ 898] 0 898 807 37 32768 17 0 caagentd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121759] [ 1720] 0 1720 6279 149 77824 153 0 systemd-logind

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121760] [ 3043] 110 3043 4705 70 77824 182 0 lldpd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121762] [ 3050] 0 3050 27667 12 110592 2130 0 unattended-upgr

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121763] [ 3057] 108 3057 21943 51 69632 167 0 ntpd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121764] [ 3058] 0 3058 3347 45 65536 201 -1000 sshd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121765] [ 3061] 110 3061 4728 104 77824 214 0 lldpd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121767] [ 3294] 0 3294 4472 25 61440 86 0 cron

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121768] [ 3298] 109 3298 2111 31 53248 163 -500 nrpe

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121769] [ 3304] 0 3304 1416 12 49152 75 0 atd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121771] [ 3318] 0 3318 1987 0 45056 76 0 agetty

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121772] [ 828871] 0 828871 28669 9 176128 1035 0 sssd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121773] [ 828872] 0 828872 38730 2757 200704 1102 0 sssd_be

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121774] [ 828873] 0 828873 33879 726 249856 787 0 sssd_nss

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121776] [ 828874] 0 828874 27486 994 200704 843 0 sssd_pam

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121777] [ 828875] 0 828875 25646 168 167936 824 0 sssd_pac

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121778] [ 828876] 0 828876 27065 669 188416 845 0 sssd_sudo

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121779] [3867706] 0 3867706 781525 11410 1392640 8840 0 pmm-agent

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121781] [3867999] 0 3867999 182337 4007 180224 288 0 node_exporter

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121782] [4185979] 0 4185979 181211 3798 176128 148 0 mysqld_exporter

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121784] [4186027] 0 4186027 215617 39736 520192 2276 0 vmagent

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121785] [ 786172] 0 786172 12905 72 90112 284 0 sshd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121786] [ 786225] 85018827 786225 3855 277 69632 87 0 systemd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121787] [ 786226] 85018827 786226 48988 316 106496 609 0 (sd-pam)

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121788] [ 786245] 85018827 786245 12905 99 86016 261 0 sshd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121790] [ 786246] 85018827 786246 4840 1 69632 438 0 bash

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121791] [ 786356] 85018827 786356 9670 2 77824 216 0 sudo

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121792] [ 786357] 0 786357 7678 1 69632 171 0 su

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121793] [ 786358] 0 786358 2335 93 53248 118 0 bash

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121795] [1821490] 0 1821490 12906 154 90112 203 0 sshd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121796] [1821502] 85018827 1821502 12906 171 86016 189 0 sshd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121797] [1821503] 85018827 1821503 4840 238 65536 202 0 bash

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121799] [1821609] 85018827 1821609 5423 115 69632 102 0 ssh

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121800] [2488524] 0 2488524 12915 209 86016 159 0 sshd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121802] [2488532] 85018904 2488532 3856 367 77824 0 0 systemd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121803] [2488533] 85018904 2488533 48988 576 106496 349 0 (sd-pam)

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121804] [2488551] 85018904 2488551 12955 288 86016 140 0 sshd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121805] [2488552] 85018904 2488552 4840 418 65536 24 0 bash

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121806] [2488812] 85018904 2488812 9681 217 65536 13 0 sudo

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121807] [2488813] 0 2488813 7678 40 69632 132 0 su

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121808] [2488814] 0 2488814 2310 164 57344 26 0 bash

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121810] [2508581] 0 2508581 10619 168 81920 171 0 sshd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121811] [2508598] 85018916 2508598 3856 347 69632 4 0 systemd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121812] [2508599] 85018916 2508599 49038 643 106496 285 0 (sd-pam)

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121813] [2508618] 85018916 2508618 10619 210 81920 131 0 sshd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121815] [2508619] 85018916 2508619 4840 431 61440 7 0 bash

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121817] [2508729] 85018916 2508729 9673 199 77824 22 0 sudo

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121818] [2508730] 0 2508730 2568 419 57344 22 0 bash

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121815] [2508619] 85018916 2508619 4840 431 61440 7 0 bash

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121817] [2508729] 85018916 2508729 9673 199 77824 22 0 sudo

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121818] [2508730] 0 2508730 2568 419 57344 22 0 bash

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121819] [2508834] 0 2508834 9657 205 73728 0 0 sudo

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121820] [2508835] 0 2508835 2596 446 61440 0 0 bash

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121821] [2522285] 0 2522285 12907 293 94208 66 0 sshd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121822] [2522296] 85018827 2522296 12907 299 81920 63 0 sshd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121823] [2522297] 85018827 2522297 4840 435 65536 3 0 bash

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121825] [2522425] 85018827 2522425 9670 219 81920 0 0 sudo

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121826] [2522426] 0 2522426 7678 173 73728 0 0 su

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121827] [2522427] 0 2522427 2335 195 65536 0 0 bash

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121828] [2525571] 0 2525571 1350 17 49152 0 0 tail

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121829] [2529115] 0 2529115 12906 358 94208 0 0 sshd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121830] [2529159] 113 2529159 19180640 18261859 149372928 221370 0 mariadbd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121832] [2529169] 85018827 2529169 12906 361 86016 1 0 sshd

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121833] [2529170] 85018827 2529170 4840 370 61440 70 0 bash

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121834] [2529349] 85018827 2529349 9670 215 73728 3 0 sudo

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121835] [2529350] 0 2529350 7678 172 73728 0 0 su

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121836] [2529351] 0 2529351 2310 191 53248 0 0 bash

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121838] [2549755] 0 2549755 5428 325 69632 0 0 top

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121840] [2555764] 109 2555764 2144 102 53248 107 -500 nrpe

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121841] [2555765] 109 2555765 2144 109 49152 100 -500 nrpe

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121843] [2555766] 109 2555766 955 60 45056 0 -500 check_ntp_in_mi

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121844] [2555770] 109 2555770 955 63 45056 0 -500 check_ntp_in_mi

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121845] [2555771] 109 2555771 1924 86 45056 0 -500 ntpq

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121846] [2555772] 109 2555772 811 32 45056 0 -500 grep

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121847] [2555773] 109 2555773 2057 80 61440 0 -500 awk

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121848] [2555775] 0 2555775 4669 104 61440 44 0 cron

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121849] [2555776] 109 2555776 2111 53 53248 141 -500 nrpe

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121850] [2555777] 109 2555777 2111 42 49152 152 -500 nrpe

|

Mar 17 18:35:35 <server_name> kernel: [2871555.121851] oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=/,mems_allowed=0-1,global_oom,task_memcg=/system.slice/mariadb.service,task=mariadbd,pid=2529159,uid=113

|

Mar 17 19:25:37 <server_name> rsyslogd: action 'action-0-builtin:omfwd' suspended (module 'builtin:omfwd'), retry 0. There should be messages before this one giving the reason for suspension. [v8.2102.0 try https://www.rsyslog.com/e/2007 ]

|

Mar 17 19:25:37 <server_name> rsyslogd: action 'action-0-builtin:omfwd' resumed (module 'builtin:omfwd') [v8.2102.0 try https://www.rsyslog.com/e/2359 ]

|

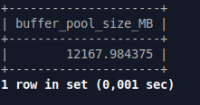

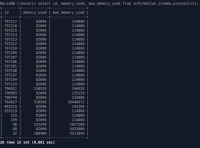

*Memory Alloccation*

|

|

|

@@key_buffer_size / 1048576: 32.0000

|

@@query_cache_size / 1048576: 50.0000

|

@@innodb_buffer_pool_size / 1048576: 78848.0000

|

@@innodb_log_buffer_size / 1048576: 16.0000

|

@@max_connections: 500

|

@@read_buffer_size / 1048576: 0.1250

|

@@read_rnd_buffer_size / 1048576: 0.2500

|

@@sort_buffer_size / 1048576: 2.0000

|

@@join_buffer_size / 1048576: 0.2500

|

@@binlog_cache_size / 1048576: 0.0313

|

@@thread_stack / 1048576: 0.2852

|

@@tmp_table_size / 1048576: 32.0000

|

MAX_MEMORY_GB: 94.1569

|

Attachments

Issue Links

- relates to

-

MDEV-14050 Memory not freed on memory table drop

-

- Closed

-

-

MDEV-14602 Reduce malloc()/free() usage in InnoDB

-

- Confirmed

-

-

MDEV-27785 Stored Routine overhead is high in benchmarks

-

- Open

-

-

MDEV-28820 MyISAM wrong server status flags

-

- Closed

-

-

MDEV-36543 Memory fragmentation detection

-

- Open

-

-

MDEV-36544 estimate possibility and time of memory fragmentation detection

-

- Open

-

-

MDEV-14959 Control over memory allocated for SP/PS

-

- Closed

-

-

MDEV-29988 Major performance regression with 10.6.11

-

- Closed

-

-

MDEV-31127 Possible memory leak on running insert in PS mode against a table with a trigger that fires creation of a new partiton

-

- Open

-

Activity

| Field | Original Value | New Value |

|---|---|---|

| Description |

The MariaDB consumes all memory allocated on the server and eventually crashes due to OOM

Mar 17 18:35:35 nynyvs113 kernel: [2871555.121737] Swap cache stats: add 28523916, delete 28524014, find 47249895/50419652 Mar 17 18:35:35 nynyvs113 kernel: [2871555.121737] Free swap = 0kB Mar 17 18:35:35 nynyvs113 kernel: [2871555.121738] Total swap = 999420kB Mar 17 18:35:35 nynyvs113 kernel: [2871555.121738] 18874238 pages RAM Mar 17 18:35:35 nynyvs113 kernel: [2871555.121738] 0 pages HighMem/MovableOnly Mar 17 18:35:35 nynyvs113 kernel: [2871555.121739] 319662 pages reserved Mar 17 18:35:35 nynyvs113 kernel: [2871555.121739] 0 pages hwpoisoned Mar 17 18:35:35 nynyvs113 kernel: [2871555.121740] Tasks state (memory values in pages): Mar 17 18:35:35 nynyvs113 kernel: [2871555.121740] [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name Mar 17 18:35:35 nynyvs113 kernel: [2871555.121747] [ 400] 0 400 41263 206 208896 604 -250 systemd-journal Mar 17 18:35:35 nynyvs113 kernel: [2871555.121749] [ 425] 0 425 6003 38 69632 383 -1000 systemd-udevd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121751] [ 585] 0 585 12424 0 81920 403 0 VGAuthService Mar 17 18:35:35 nynyvs113 kernel: [2871555.121752] [ 586] 0 586 43443 242 94208 181 0 vmtoolsd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121754] [ 603] 105 603 7654 181 73728 98 -900 dbus-daemon Mar 17 18:35:35 nynyvs113 kernel: [2871555.121755] [ 609] 112 609 549788 4955 372736 1872 0 prometheus-node Mar 17 18:35:35 nynyvs113 kernel: [2871555.121756] [ 611] 0 611 73164 530 102400 574 0 rsyslogd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121758] [ 898] 0 898 807 37 32768 17 0 caagentd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121759] [ 1720] 0 1720 6279 149 77824 153 0 systemd-logind Mar 17 18:35:35 nynyvs113 kernel: [2871555.121760] [ 3043] 110 3043 4705 70 77824 182 0 lldpd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121762] [ 3050] 0 3050 27667 12 110592 2130 0 unattended-upgr Mar 17 18:35:35 nynyvs113 kernel: [2871555.121763] [ 3057] 108 3057 21943 51 69632 167 0 ntpd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121764] [ 3058] 0 3058 3347 45 65536 201 -1000 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121765] [ 3061] 110 3061 4728 104 77824 214 0 lldpd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121767] [ 3294] 0 3294 4472 25 61440 86 0 cron Mar 17 18:35:35 nynyvs113 kernel: [2871555.121768] [ 3298] 109 3298 2111 31 53248 163 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121769] [ 3304] 0 3304 1416 12 49152 75 0 atd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121771] [ 3318] 0 3318 1987 0 45056 76 0 agetty Mar 17 18:35:35 nynyvs113 kernel: [2871555.121772] [ 828871] 0 828871 28669 9 176128 1035 0 sssd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121773] [ 828872] 0 828872 38730 2757 200704 1102 0 sssd_be Mar 17 18:35:35 nynyvs113 kernel: [2871555.121774] [ 828873] 0 828873 33879 726 249856 787 0 sssd_nss Mar 17 18:35:35 nynyvs113 kernel: [2871555.121776] [ 828874] 0 828874 27486 994 200704 843 0 sssd_pam Mar 17 18:35:35 nynyvs113 kernel: [2871555.121777] [ 828875] 0 828875 25646 168 167936 824 0 sssd_pac Mar 17 18:35:35 nynyvs113 kernel: [2871555.121778] [ 828876] 0 828876 27065 669 188416 845 0 sssd_sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121779] [3867706] 0 3867706 781525 11410 1392640 8840 0 pmm-agent Mar 17 18:35:35 nynyvs113 kernel: [2871555.121781] [3867999] 0 3867999 182337 4007 180224 288 0 node_exporter Mar 17 18:35:35 nynyvs113 kernel: [2871555.121782] [4185979] 0 4185979 181211 3798 176128 148 0 mysqld_exporter Mar 17 18:35:35 nynyvs113 kernel: [2871555.121784] [4186027] 0 4186027 215617 39736 520192 2276 0 vmagent Mar 17 18:35:35 nynyvs113 kernel: [2871555.121785] [ 786172] 0 786172 12905 72 90112 284 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121786] [ 786225] 85018827 786225 3855 277 69632 87 0 systemd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121787] [ 786226] 85018827 786226 48988 316 106496 609 0 (sd-pam) Mar 17 18:35:35 nynyvs113 kernel: [2871555.121788] [ 786245] 85018827 786245 12905 99 86016 261 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121790] [ 786246] 85018827 786246 4840 1 69632 438 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121791] [ 786356] 85018827 786356 9670 2 77824 216 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121792] [ 786357] 0 786357 7678 1 69632 171 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121793] [ 786358] 0 786358 2335 93 53248 118 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121795] [1821490] 0 1821490 12906 154 90112 203 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121796] [1821502] 85018827 1821502 12906 171 86016 189 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121797] [1821503] 85018827 1821503 4840 238 65536 202 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121799] [1821609] 85018827 1821609 5423 115 69632 102 0 ssh Mar 17 18:35:35 nynyvs113 kernel: [2871555.121800] [2488524] 0 2488524 12915 209 86016 159 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121802] [2488532] 85018904 2488532 3856 367 77824 0 0 systemd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121803] [2488533] 85018904 2488533 48988 576 106496 349 0 (sd-pam) Mar 17 18:35:35 nynyvs113 kernel: [2871555.121804] [2488551] 85018904 2488551 12955 288 86016 140 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121805] [2488552] 85018904 2488552 4840 418 65536 24 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121806] [2488812] 85018904 2488812 9681 217 65536 13 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121807] [2488813] 0 2488813 7678 40 69632 132 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121808] [2488814] 0 2488814 2310 164 57344 26 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121810] [2508581] 0 2508581 10619 168 81920 171 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121811] [2508598] 85018916 2508598 3856 347 69632 4 0 systemd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121812] [2508599] 85018916 2508599 49038 643 106496 285 0 (sd-pam) Mar 17 18:35:35 nynyvs113 kernel: [2871555.121813] [2508618] 85018916 2508618 10619 210 81920 131 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121815] [2508619] 85018916 2508619 4840 431 61440 7 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121817] [2508729] 85018916 2508729 9673 199 77824 22 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121818] [2508730] 0 2508730 2568 419 57344 22 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121815] [2508619] 85018916 2508619 4840 431 61440 7 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121817] [2508729] 85018916 2508729 9673 199 77824 22 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121818] [2508730] 0 2508730 2568 419 57344 22 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121819] [2508834] 0 2508834 9657 205 73728 0 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121820] [2508835] 0 2508835 2596 446 61440 0 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121821] [2522285] 0 2522285 12907 293 94208 66 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121822] [2522296] 85018827 2522296 12907 299 81920 63 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121823] [2522297] 85018827 2522297 4840 435 65536 3 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121825] [2522425] 85018827 2522425 9670 219 81920 0 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121826] [2522426] 0 2522426 7678 173 73728 0 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121827] [2522427] 0 2522427 2335 195 65536 0 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121828] [2525571] 0 2525571 1350 17 49152 0 0 tail Mar 17 18:35:35 nynyvs113 kernel: [2871555.121829] [2529115] 0 2529115 12906 358 94208 0 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121830] [2529159] 113 2529159 19180640 18261859 149372928 221370 0 mariadbd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121832] [2529169] 85018827 2529169 12906 361 86016 1 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121833] [2529170] 85018827 2529170 4840 370 61440 70 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121834] [2529349] 85018827 2529349 9670 215 73728 3 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121835] [2529350] 0 2529350 7678 172 73728 0 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121836] [2529351] 0 2529351 2310 191 53248 0 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121838] [2549755] 0 2549755 5428 325 69632 0 0 top Mar 17 18:35:35 nynyvs113 kernel: [2871555.121840] [2555764] 109 2555764 2144 102 53248 107 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121841] [2555765] 109 2555765 2144 109 49152 100 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121843] [2555766] 109 2555766 955 60 45056 0 -500 check_ntp_in_mi Mar 17 18:35:35 nynyvs113 kernel: [2871555.121844] [2555770] 109 2555770 955 63 45056 0 -500 check_ntp_in_mi Mar 17 18:35:35 nynyvs113 kernel: [2871555.121845] [2555771] 109 2555771 1924 86 45056 0 -500 ntpq Mar 17 18:35:35 nynyvs113 kernel: [2871555.121846] [2555772] 109 2555772 811 32 45056 0 -500 grep Mar 17 18:35:35 nynyvs113 kernel: [2871555.121847] [2555773] 109 2555773 2057 80 61440 0 -500 awk Mar 17 18:35:35 nynyvs113 kernel: [2871555.121848] [2555775] 0 2555775 4669 104 61440 44 0 cron Mar 17 18:35:35 nynyvs113 kernel: [2871555.121849] [2555776] 109 2555776 2111 53 53248 141 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121850] [2555777] 109 2555777 2111 42 49152 152 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121851] oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=/,mems_allowed=0-1,global_oom,task_memcg=/system.slice/mariadb.service,task=mariadbd,pid=2529159,uid=113 Mar 17 19:25:37 nynyvs113 rsyslogd: action 'action-0-builtin:omfwd' suspended (module 'builtin:omfwd'), retry 0. There should be messages before this one giving the reason for suspension. [v8.2102.0 try https://www.rsyslog.com/e/2007 ] Mar 17 19:25:37 nynyvs113 rsyslogd: action 'action-0-builtin:omfwd' resumed (module 'builtin:omfwd') [v8.2102.0 try https://www.rsyslog.com/e/2359 ] Memory allocation: *************************** 1. row *************************** @@key_buffer_size / 1048576: 32.0000 @@query_cache_size / 1048576: 50.0000 @@innodb_buffer_pool_size / 1048576: 78848.0000 @@innodb_log_buffer_size / 1048576: 16.0000 @@max_connections: 500 @@read_buffer_size / 1048576: 0.1250 @@read_rnd_buffer_size / 1048576: 0.2500 @@sort_buffer_size / 1048576: 2.0000 @@join_buffer_size / 1048576: 0.2500 @@binlog_cache_size / 1048576: 0.0313 @@thread_stack / 1048576: 0.2852 @@tmp_table_size / 1048576: 32.0000 MAX_MEMORY_GB: 94.1569 1 row in set (0.000 sec) |

The MariaDB consumes all memory allocated on the server and eventually crashes due to OOM

Mar 17 18:35:35 nynyvs113 kernel: [2871555.121737] Swap cache stats: add 28523916, delete 28524014, find 47249895/50419652 Mar 17 18:35:35 nynyvs113 kernel: [2871555.121737] Free swap = 0kB Mar 17 18:35:35 nynyvs113 kernel: [2871555.121738] Total swap = 999420kB Mar 17 18:35:35 nynyvs113 kernel: [2871555.121738] 18874238 pages RAM Mar 17 18:35:35 nynyvs113 kernel: [2871555.121738] 0 pages HighMem/MovableOnly Mar 17 18:35:35 nynyvs113 kernel: [2871555.121739] 319662 pages reserved Mar 17 18:35:35 nynyvs113 kernel: [2871555.121739] 0 pages hwpoisoned Mar 17 18:35:35 nynyvs113 kernel: [2871555.121740] Tasks state (memory values in pages): Mar 17 18:35:35 nynyvs113 kernel: [2871555.121740] [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name Mar 17 18:35:35 nynyvs113 kernel: [2871555.121747] [ 400] 0 400 41263 206 208896 604 -250 systemd-journal Mar 17 18:35:35 nynyvs113 kernel: [2871555.121749] [ 425] 0 425 6003 38 69632 383 -1000 systemd-udevd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121751] [ 585] 0 585 12424 0 81920 403 0 VGAuthService Mar 17 18:35:35 nynyvs113 kernel: [2871555.121752] [ 586] 0 586 43443 242 94208 181 0 vmtoolsd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121754] [ 603] 105 603 7654 181 73728 98 -900 dbus-daemon Mar 17 18:35:35 nynyvs113 kernel: [2871555.121755] [ 609] 112 609 549788 4955 372736 1872 0 prometheus-node Mar 17 18:35:35 nynyvs113 kernel: [2871555.121756] [ 611] 0 611 73164 530 102400 574 0 rsyslogd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121758] [ 898] 0 898 807 37 32768 17 0 caagentd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121759] [ 1720] 0 1720 6279 149 77824 153 0 systemd-logind Mar 17 18:35:35 nynyvs113 kernel: [2871555.121760] [ 3043] 110 3043 4705 70 77824 182 0 lldpd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121762] [ 3050] 0 3050 27667 12 110592 2130 0 unattended-upgr Mar 17 18:35:35 nynyvs113 kernel: [2871555.121763] [ 3057] 108 3057 21943 51 69632 167 0 ntpd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121764] [ 3058] 0 3058 3347 45 65536 201 -1000 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121765] [ 3061] 110 3061 4728 104 77824 214 0 lldpd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121767] [ 3294] 0 3294 4472 25 61440 86 0 cron Mar 17 18:35:35 nynyvs113 kernel: [2871555.121768] [ 3298] 109 3298 2111 31 53248 163 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121769] [ 3304] 0 3304 1416 12 49152 75 0 atd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121771] [ 3318] 0 3318 1987 0 45056 76 0 agetty Mar 17 18:35:35 nynyvs113 kernel: [2871555.121772] [ 828871] 0 828871 28669 9 176128 1035 0 sssd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121773] [ 828872] 0 828872 38730 2757 200704 1102 0 sssd_be Mar 17 18:35:35 nynyvs113 kernel: [2871555.121774] [ 828873] 0 828873 33879 726 249856 787 0 sssd_nss Mar 17 18:35:35 nynyvs113 kernel: [2871555.121776] [ 828874] 0 828874 27486 994 200704 843 0 sssd_pam Mar 17 18:35:35 nynyvs113 kernel: [2871555.121777] [ 828875] 0 828875 25646 168 167936 824 0 sssd_pac Mar 17 18:35:35 nynyvs113 kernel: [2871555.121778] [ 828876] 0 828876 27065 669 188416 845 0 sssd_sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121779] [3867706] 0 3867706 781525 11410 1392640 8840 0 pmm-agent Mar 17 18:35:35 nynyvs113 kernel: [2871555.121781] [3867999] 0 3867999 182337 4007 180224 288 0 node_exporter Mar 17 18:35:35 nynyvs113 kernel: [2871555.121782] [4185979] 0 4185979 181211 3798 176128 148 0 mysqld_exporter Mar 17 18:35:35 nynyvs113 kernel: [2871555.121784] [4186027] 0 4186027 215617 39736 520192 2276 0 vmagent Mar 17 18:35:35 nynyvs113 kernel: [2871555.121785] [ 786172] 0 786172 12905 72 90112 284 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121786] [ 786225] 85018827 786225 3855 277 69632 87 0 systemd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121787] [ 786226] 85018827 786226 48988 316 106496 609 0 (sd-pam) Mar 17 18:35:35 nynyvs113 kernel: [2871555.121788] [ 786245] 85018827 786245 12905 99 86016 261 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121790] [ 786246] 85018827 786246 4840 1 69632 438 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121791] [ 786356] 85018827 786356 9670 2 77824 216 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121792] [ 786357] 0 786357 7678 1 69632 171 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121793] [ 786358] 0 786358 2335 93 53248 118 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121795] [1821490] 0 1821490 12906 154 90112 203 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121796] [1821502] 85018827 1821502 12906 171 86016 189 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121797] [1821503] 85018827 1821503 4840 238 65536 202 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121799] [1821609] 85018827 1821609 5423 115 69632 102 0 ssh Mar 17 18:35:35 nynyvs113 kernel: [2871555.121800] [2488524] 0 2488524 12915 209 86016 159 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121802] [2488532] 85018904 2488532 3856 367 77824 0 0 systemd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121803] [2488533] 85018904 2488533 48988 576 106496 349 0 (sd-pam) Mar 17 18:35:35 nynyvs113 kernel: [2871555.121804] [2488551] 85018904 2488551 12955 288 86016 140 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121805] [2488552] 85018904 2488552 4840 418 65536 24 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121806] [2488812] 85018904 2488812 9681 217 65536 13 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121807] [2488813] 0 2488813 7678 40 69632 132 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121808] [2488814] 0 2488814 2310 164 57344 26 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121810] [2508581] 0 2508581 10619 168 81920 171 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121811] [2508598] 85018916 2508598 3856 347 69632 4 0 systemd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121812] [2508599] 85018916 2508599 49038 643 106496 285 0 (sd-pam) Mar 17 18:35:35 nynyvs113 kernel: [2871555.121813] [2508618] 85018916 2508618 10619 210 81920 131 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121815] [2508619] 85018916 2508619 4840 431 61440 7 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121817] [2508729] 85018916 2508729 9673 199 77824 22 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121818] [2508730] 0 2508730 2568 419 57344 22 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121815] [2508619] 85018916 2508619 4840 431 61440 7 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121817] [2508729] 85018916 2508729 9673 199 77824 22 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121818] [2508730] 0 2508730 2568 419 57344 22 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121819] [2508834] 0 2508834 9657 205 73728 0 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121820] [2508835] 0 2508835 2596 446 61440 0 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121821] [2522285] 0 2522285 12907 293 94208 66 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121822] [2522296] 85018827 2522296 12907 299 81920 63 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121823] [2522297] 85018827 2522297 4840 435 65536 3 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121825] [2522425] 85018827 2522425 9670 219 81920 0 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121826] [2522426] 0 2522426 7678 173 73728 0 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121827] [2522427] 0 2522427 2335 195 65536 0 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121828] [2525571] 0 2525571 1350 17 49152 0 0 tail Mar 17 18:35:35 nynyvs113 kernel: [2871555.121829] [2529115] 0 2529115 12906 358 94208 0 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121830] [2529159] 113 2529159 19180640 18261859 149372928 221370 0 mariadbd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121832] [2529169] 85018827 2529169 12906 361 86016 1 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121833] [2529170] 85018827 2529170 4840 370 61440 70 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121834] [2529349] 85018827 2529349 9670 215 73728 3 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121835] [2529350] 0 2529350 7678 172 73728 0 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121836] [2529351] 0 2529351 2310 191 53248 0 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121838] [2549755] 0 2549755 5428 325 69632 0 0 top Mar 17 18:35:35 nynyvs113 kernel: [2871555.121840] [2555764] 109 2555764 2144 102 53248 107 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121841] [2555765] 109 2555765 2144 109 49152 100 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121843] [2555766] 109 2555766 955 60 45056 0 -500 check_ntp_in_mi Mar 17 18:35:35 nynyvs113 kernel: [2871555.121844] [2555770] 109 2555770 955 63 45056 0 -500 check_ntp_in_mi Mar 17 18:35:35 nynyvs113 kernel: [2871555.121845] [2555771] 109 2555771 1924 86 45056 0 -500 ntpq Mar 17 18:35:35 nynyvs113 kernel: [2871555.121846] [2555772] 109 2555772 811 32 45056 0 -500 grep Mar 17 18:35:35 nynyvs113 kernel: [2871555.121847] [2555773] 109 2555773 2057 80 61440 0 -500 awk Mar 17 18:35:35 nynyvs113 kernel: [2871555.121848] [2555775] 0 2555775 4669 104 61440 44 0 cron Mar 17 18:35:35 nynyvs113 kernel: [2871555.121849] [2555776] 109 2555776 2111 53 53248 141 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121850] [2555777] 109 2555777 2111 42 49152 152 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121851] oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=/,mems_allowed=0-1,global_oom,task_memcg=/system.slice/mariadb.service,task=mariadbd,pid=2529159,uid=113 Mar 17 19:25:37 nynyvs113 rsyslogd: action 'action-0-builtin:omfwd' suspended (module 'builtin:omfwd'), retry 0. There should be messages before this one giving the reason for suspension. [v8.2102.0 try https://www.rsyslog.com/e/2007 ] Mar 17 19:25:37 nynyvs113 rsyslogd: action 'action-0-builtin:omfwd' resumed (module 'builtin:omfwd') [v8.2102.0 try https://www.rsyslog.com/e/2359 ] @@key_buffer_size / 1048576: 32.0000 @@query_cache_size / 1048576: 50.0000 @@innodb_buffer_pool_size / 1048576: 78848.0000 @@innodb_log_buffer_size / 1048576: 16.0000 @@max_connections: 500 @@read_buffer_size / 1048576: 0.1250 @@read_rnd_buffer_size / 1048576: 0.2500 @@sort_buffer_size / 1048576: 2.0000 @@join_buffer_size / 1048576: 0.2500 @@binlog_cache_size / 1048576: 0.0313 @@thread_stack / 1048576: 0.2852 @@tmp_table_size / 1048576: 32.0000 MAX_MEMORY_GB: 94.1569 |

| Description |

The MariaDB consumes all memory allocated on the server and eventually crashes due to OOM

Mar 17 18:35:35 nynyvs113 kernel: [2871555.121737] Swap cache stats: add 28523916, delete 28524014, find 47249895/50419652 Mar 17 18:35:35 nynyvs113 kernel: [2871555.121737] Free swap = 0kB Mar 17 18:35:35 nynyvs113 kernel: [2871555.121738] Total swap = 999420kB Mar 17 18:35:35 nynyvs113 kernel: [2871555.121738] 18874238 pages RAM Mar 17 18:35:35 nynyvs113 kernel: [2871555.121738] 0 pages HighMem/MovableOnly Mar 17 18:35:35 nynyvs113 kernel: [2871555.121739] 319662 pages reserved Mar 17 18:35:35 nynyvs113 kernel: [2871555.121739] 0 pages hwpoisoned Mar 17 18:35:35 nynyvs113 kernel: [2871555.121740] Tasks state (memory values in pages): Mar 17 18:35:35 nynyvs113 kernel: [2871555.121740] [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name Mar 17 18:35:35 nynyvs113 kernel: [2871555.121747] [ 400] 0 400 41263 206 208896 604 -250 systemd-journal Mar 17 18:35:35 nynyvs113 kernel: [2871555.121749] [ 425] 0 425 6003 38 69632 383 -1000 systemd-udevd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121751] [ 585] 0 585 12424 0 81920 403 0 VGAuthService Mar 17 18:35:35 nynyvs113 kernel: [2871555.121752] [ 586] 0 586 43443 242 94208 181 0 vmtoolsd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121754] [ 603] 105 603 7654 181 73728 98 -900 dbus-daemon Mar 17 18:35:35 nynyvs113 kernel: [2871555.121755] [ 609] 112 609 549788 4955 372736 1872 0 prometheus-node Mar 17 18:35:35 nynyvs113 kernel: [2871555.121756] [ 611] 0 611 73164 530 102400 574 0 rsyslogd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121758] [ 898] 0 898 807 37 32768 17 0 caagentd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121759] [ 1720] 0 1720 6279 149 77824 153 0 systemd-logind Mar 17 18:35:35 nynyvs113 kernel: [2871555.121760] [ 3043] 110 3043 4705 70 77824 182 0 lldpd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121762] [ 3050] 0 3050 27667 12 110592 2130 0 unattended-upgr Mar 17 18:35:35 nynyvs113 kernel: [2871555.121763] [ 3057] 108 3057 21943 51 69632 167 0 ntpd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121764] [ 3058] 0 3058 3347 45 65536 201 -1000 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121765] [ 3061] 110 3061 4728 104 77824 214 0 lldpd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121767] [ 3294] 0 3294 4472 25 61440 86 0 cron Mar 17 18:35:35 nynyvs113 kernel: [2871555.121768] [ 3298] 109 3298 2111 31 53248 163 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121769] [ 3304] 0 3304 1416 12 49152 75 0 atd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121771] [ 3318] 0 3318 1987 0 45056 76 0 agetty Mar 17 18:35:35 nynyvs113 kernel: [2871555.121772] [ 828871] 0 828871 28669 9 176128 1035 0 sssd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121773] [ 828872] 0 828872 38730 2757 200704 1102 0 sssd_be Mar 17 18:35:35 nynyvs113 kernel: [2871555.121774] [ 828873] 0 828873 33879 726 249856 787 0 sssd_nss Mar 17 18:35:35 nynyvs113 kernel: [2871555.121776] [ 828874] 0 828874 27486 994 200704 843 0 sssd_pam Mar 17 18:35:35 nynyvs113 kernel: [2871555.121777] [ 828875] 0 828875 25646 168 167936 824 0 sssd_pac Mar 17 18:35:35 nynyvs113 kernel: [2871555.121778] [ 828876] 0 828876 27065 669 188416 845 0 sssd_sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121779] [3867706] 0 3867706 781525 11410 1392640 8840 0 pmm-agent Mar 17 18:35:35 nynyvs113 kernel: [2871555.121781] [3867999] 0 3867999 182337 4007 180224 288 0 node_exporter Mar 17 18:35:35 nynyvs113 kernel: [2871555.121782] [4185979] 0 4185979 181211 3798 176128 148 0 mysqld_exporter Mar 17 18:35:35 nynyvs113 kernel: [2871555.121784] [4186027] 0 4186027 215617 39736 520192 2276 0 vmagent Mar 17 18:35:35 nynyvs113 kernel: [2871555.121785] [ 786172] 0 786172 12905 72 90112 284 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121786] [ 786225] 85018827 786225 3855 277 69632 87 0 systemd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121787] [ 786226] 85018827 786226 48988 316 106496 609 0 (sd-pam) Mar 17 18:35:35 nynyvs113 kernel: [2871555.121788] [ 786245] 85018827 786245 12905 99 86016 261 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121790] [ 786246] 85018827 786246 4840 1 69632 438 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121791] [ 786356] 85018827 786356 9670 2 77824 216 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121792] [ 786357] 0 786357 7678 1 69632 171 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121793] [ 786358] 0 786358 2335 93 53248 118 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121795] [1821490] 0 1821490 12906 154 90112 203 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121796] [1821502] 85018827 1821502 12906 171 86016 189 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121797] [1821503] 85018827 1821503 4840 238 65536 202 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121799] [1821609] 85018827 1821609 5423 115 69632 102 0 ssh Mar 17 18:35:35 nynyvs113 kernel: [2871555.121800] [2488524] 0 2488524 12915 209 86016 159 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121802] [2488532] 85018904 2488532 3856 367 77824 0 0 systemd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121803] [2488533] 85018904 2488533 48988 576 106496 349 0 (sd-pam) Mar 17 18:35:35 nynyvs113 kernel: [2871555.121804] [2488551] 85018904 2488551 12955 288 86016 140 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121805] [2488552] 85018904 2488552 4840 418 65536 24 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121806] [2488812] 85018904 2488812 9681 217 65536 13 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121807] [2488813] 0 2488813 7678 40 69632 132 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121808] [2488814] 0 2488814 2310 164 57344 26 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121810] [2508581] 0 2508581 10619 168 81920 171 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121811] [2508598] 85018916 2508598 3856 347 69632 4 0 systemd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121812] [2508599] 85018916 2508599 49038 643 106496 285 0 (sd-pam) Mar 17 18:35:35 nynyvs113 kernel: [2871555.121813] [2508618] 85018916 2508618 10619 210 81920 131 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121815] [2508619] 85018916 2508619 4840 431 61440 7 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121817] [2508729] 85018916 2508729 9673 199 77824 22 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121818] [2508730] 0 2508730 2568 419 57344 22 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121815] [2508619] 85018916 2508619 4840 431 61440 7 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121817] [2508729] 85018916 2508729 9673 199 77824 22 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121818] [2508730] 0 2508730 2568 419 57344 22 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121819] [2508834] 0 2508834 9657 205 73728 0 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121820] [2508835] 0 2508835 2596 446 61440 0 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121821] [2522285] 0 2522285 12907 293 94208 66 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121822] [2522296] 85018827 2522296 12907 299 81920 63 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121823] [2522297] 85018827 2522297 4840 435 65536 3 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121825] [2522425] 85018827 2522425 9670 219 81920 0 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121826] [2522426] 0 2522426 7678 173 73728 0 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121827] [2522427] 0 2522427 2335 195 65536 0 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121828] [2525571] 0 2525571 1350 17 49152 0 0 tail Mar 17 18:35:35 nynyvs113 kernel: [2871555.121829] [2529115] 0 2529115 12906 358 94208 0 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121830] [2529159] 113 2529159 19180640 18261859 149372928 221370 0 mariadbd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121832] [2529169] 85018827 2529169 12906 361 86016 1 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121833] [2529170] 85018827 2529170 4840 370 61440 70 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121834] [2529349] 85018827 2529349 9670 215 73728 3 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121835] [2529350] 0 2529350 7678 172 73728 0 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121836] [2529351] 0 2529351 2310 191 53248 0 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121838] [2549755] 0 2549755 5428 325 69632 0 0 top Mar 17 18:35:35 nynyvs113 kernel: [2871555.121840] [2555764] 109 2555764 2144 102 53248 107 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121841] [2555765] 109 2555765 2144 109 49152 100 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121843] [2555766] 109 2555766 955 60 45056 0 -500 check_ntp_in_mi Mar 17 18:35:35 nynyvs113 kernel: [2871555.121844] [2555770] 109 2555770 955 63 45056 0 -500 check_ntp_in_mi Mar 17 18:35:35 nynyvs113 kernel: [2871555.121845] [2555771] 109 2555771 1924 86 45056 0 -500 ntpq Mar 17 18:35:35 nynyvs113 kernel: [2871555.121846] [2555772] 109 2555772 811 32 45056 0 -500 grep Mar 17 18:35:35 nynyvs113 kernel: [2871555.121847] [2555773] 109 2555773 2057 80 61440 0 -500 awk Mar 17 18:35:35 nynyvs113 kernel: [2871555.121848] [2555775] 0 2555775 4669 104 61440 44 0 cron Mar 17 18:35:35 nynyvs113 kernel: [2871555.121849] [2555776] 109 2555776 2111 53 53248 141 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121850] [2555777] 109 2555777 2111 42 49152 152 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121851] oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=/,mems_allowed=0-1,global_oom,task_memcg=/system.slice/mariadb.service,task=mariadbd,pid=2529159,uid=113 Mar 17 19:25:37 nynyvs113 rsyslogd: action 'action-0-builtin:omfwd' suspended (module 'builtin:omfwd'), retry 0. There should be messages before this one giving the reason for suspension. [v8.2102.0 try https://www.rsyslog.com/e/2007 ] Mar 17 19:25:37 nynyvs113 rsyslogd: action 'action-0-builtin:omfwd' resumed (module 'builtin:omfwd') [v8.2102.0 try https://www.rsyslog.com/e/2359 ] @@key_buffer_size / 1048576: 32.0000 @@query_cache_size / 1048576: 50.0000 @@innodb_buffer_pool_size / 1048576: 78848.0000 @@innodb_log_buffer_size / 1048576: 16.0000 @@max_connections: 500 @@read_buffer_size / 1048576: 0.1250 @@read_rnd_buffer_size / 1048576: 0.2500 @@sort_buffer_size / 1048576: 2.0000 @@join_buffer_size / 1048576: 0.2500 @@binlog_cache_size / 1048576: 0.0313 @@thread_stack / 1048576: 0.2852 @@tmp_table_size / 1048576: 32.0000 MAX_MEMORY_GB: 94.1569 |

The MariaDB consumes all memory allocated on the server and eventually crashes due to OOM

Mar 17 18:35:35 nynyvs113 kernel: [2871555.121737] Swap cache stats: add 28523916, delete 28524014, find 47249895/50419652 Mar 17 18:35:35 nynyvs113 kernel: [2871555.121737] Free swap = 0kB Mar 17 18:35:35 nynyvs113 kernel: [2871555.121738] Total swap = 999420kB Mar 17 18:35:35 nynyvs113 kernel: [2871555.121738] 18874238 pages RAM Mar 17 18:35:35 nynyvs113 kernel: [2871555.121738] 0 pages HighMem/MovableOnly Mar 17 18:35:35 nynyvs113 kernel: [2871555.121739] 319662 pages reserved Mar 17 18:35:35 nynyvs113 kernel: [2871555.121739] 0 pages hwpoisoned Mar 17 18:35:35 nynyvs113 kernel: [2871555.121740] Tasks state (memory values in pages): Mar 17 18:35:35 nynyvs113 kernel: [2871555.121740] [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name Mar 17 18:35:35 nynyvs113 kernel: [2871555.121747] [ 400] 0 400 41263 206 208896 604 -250 systemd-journal Mar 17 18:35:35 nynyvs113 kernel: [2871555.121749] [ 425] 0 425 6003 38 69632 383 -1000 systemd-udevd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121751] [ 585] 0 585 12424 0 81920 403 0 VGAuthService Mar 17 18:35:35 nynyvs113 kernel: [2871555.121752] [ 586] 0 586 43443 242 94208 181 0 vmtoolsd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121754] [ 603] 105 603 7654 181 73728 98 -900 dbus-daemon Mar 17 18:35:35 nynyvs113 kernel: [2871555.121755] [ 609] 112 609 549788 4955 372736 1872 0 prometheus-node Mar 17 18:35:35 nynyvs113 kernel: [2871555.121756] [ 611] 0 611 73164 530 102400 574 0 rsyslogd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121758] [ 898] 0 898 807 37 32768 17 0 caagentd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121759] [ 1720] 0 1720 6279 149 77824 153 0 systemd-logind Mar 17 18:35:35 nynyvs113 kernel: [2871555.121760] [ 3043] 110 3043 4705 70 77824 182 0 lldpd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121762] [ 3050] 0 3050 27667 12 110592 2130 0 unattended-upgr Mar 17 18:35:35 nynyvs113 kernel: [2871555.121763] [ 3057] 108 3057 21943 51 69632 167 0 ntpd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121764] [ 3058] 0 3058 3347 45 65536 201 -1000 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121765] [ 3061] 110 3061 4728 104 77824 214 0 lldpd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121767] [ 3294] 0 3294 4472 25 61440 86 0 cron Mar 17 18:35:35 nynyvs113 kernel: [2871555.121768] [ 3298] 109 3298 2111 31 53248 163 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121769] [ 3304] 0 3304 1416 12 49152 75 0 atd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121771] [ 3318] 0 3318 1987 0 45056 76 0 agetty Mar 17 18:35:35 nynyvs113 kernel: [2871555.121772] [ 828871] 0 828871 28669 9 176128 1035 0 sssd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121773] [ 828872] 0 828872 38730 2757 200704 1102 0 sssd_be Mar 17 18:35:35 nynyvs113 kernel: [2871555.121774] [ 828873] 0 828873 33879 726 249856 787 0 sssd_nss Mar 17 18:35:35 nynyvs113 kernel: [2871555.121776] [ 828874] 0 828874 27486 994 200704 843 0 sssd_pam Mar 17 18:35:35 nynyvs113 kernel: [2871555.121777] [ 828875] 0 828875 25646 168 167936 824 0 sssd_pac Mar 17 18:35:35 nynyvs113 kernel: [2871555.121778] [ 828876] 0 828876 27065 669 188416 845 0 sssd_sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121779] [3867706] 0 3867706 781525 11410 1392640 8840 0 pmm-agent Mar 17 18:35:35 nynyvs113 kernel: [2871555.121781] [3867999] 0 3867999 182337 4007 180224 288 0 node_exporter Mar 17 18:35:35 nynyvs113 kernel: [2871555.121782] [4185979] 0 4185979 181211 3798 176128 148 0 mysqld_exporter Mar 17 18:35:35 nynyvs113 kernel: [2871555.121784] [4186027] 0 4186027 215617 39736 520192 2276 0 vmagent Mar 17 18:35:35 nynyvs113 kernel: [2871555.121785] [ 786172] 0 786172 12905 72 90112 284 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121786] [ 786225] 85018827 786225 3855 277 69632 87 0 systemd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121787] [ 786226] 85018827 786226 48988 316 106496 609 0 (sd-pam) Mar 17 18:35:35 nynyvs113 kernel: [2871555.121788] [ 786245] 85018827 786245 12905 99 86016 261 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121790] [ 786246] 85018827 786246 4840 1 69632 438 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121791] [ 786356] 85018827 786356 9670 2 77824 216 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121792] [ 786357] 0 786357 7678 1 69632 171 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121793] [ 786358] 0 786358 2335 93 53248 118 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121795] [1821490] 0 1821490 12906 154 90112 203 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121796] [1821502] 85018827 1821502 12906 171 86016 189 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121797] [1821503] 85018827 1821503 4840 238 65536 202 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121799] [1821609] 85018827 1821609 5423 115 69632 102 0 ssh Mar 17 18:35:35 nynyvs113 kernel: [2871555.121800] [2488524] 0 2488524 12915 209 86016 159 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121802] [2488532] 85018904 2488532 3856 367 77824 0 0 systemd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121803] [2488533] 85018904 2488533 48988 576 106496 349 0 (sd-pam) Mar 17 18:35:35 nynyvs113 kernel: [2871555.121804] [2488551] 85018904 2488551 12955 288 86016 140 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121805] [2488552] 85018904 2488552 4840 418 65536 24 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121806] [2488812] 85018904 2488812 9681 217 65536 13 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121807] [2488813] 0 2488813 7678 40 69632 132 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121808] [2488814] 0 2488814 2310 164 57344 26 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121810] [2508581] 0 2508581 10619 168 81920 171 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121811] [2508598] 85018916 2508598 3856 347 69632 4 0 systemd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121812] [2508599] 85018916 2508599 49038 643 106496 285 0 (sd-pam) Mar 17 18:35:35 nynyvs113 kernel: [2871555.121813] [2508618] 85018916 2508618 10619 210 81920 131 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121815] [2508619] 85018916 2508619 4840 431 61440 7 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121817] [2508729] 85018916 2508729 9673 199 77824 22 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121818] [2508730] 0 2508730 2568 419 57344 22 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121815] [2508619] 85018916 2508619 4840 431 61440 7 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121817] [2508729] 85018916 2508729 9673 199 77824 22 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121818] [2508730] 0 2508730 2568 419 57344 22 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121819] [2508834] 0 2508834 9657 205 73728 0 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121820] [2508835] 0 2508835 2596 446 61440 0 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121821] [2522285] 0 2522285 12907 293 94208 66 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121822] [2522296] 85018827 2522296 12907 299 81920 63 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121823] [2522297] 85018827 2522297 4840 435 65536 3 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121825] [2522425] 85018827 2522425 9670 219 81920 0 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121826] [2522426] 0 2522426 7678 173 73728 0 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121827] [2522427] 0 2522427 2335 195 65536 0 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121828] [2525571] 0 2525571 1350 17 49152 0 0 tail Mar 17 18:35:35 nynyvs113 kernel: [2871555.121829] [2529115] 0 2529115 12906 358 94208 0 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121830] [2529159] 113 2529159 19180640 18261859 149372928 221370 0 mariadbd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121832] [2529169] 85018827 2529169 12906 361 86016 1 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121833] [2529170] 85018827 2529170 4840 370 61440 70 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121834] [2529349] 85018827 2529349 9670 215 73728 3 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121835] [2529350] 0 2529350 7678 172 73728 0 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121836] [2529351] 0 2529351 2310 191 53248 0 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121838] [2549755] 0 2549755 5428 325 69632 0 0 top Mar 17 18:35:35 nynyvs113 kernel: [2871555.121840] [2555764] 109 2555764 2144 102 53248 107 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121841] [2555765] 109 2555765 2144 109 49152 100 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121843] [2555766] 109 2555766 955 60 45056 0 -500 check_ntp_in_mi Mar 17 18:35:35 nynyvs113 kernel: [2871555.121844] [2555770] 109 2555770 955 63 45056 0 -500 check_ntp_in_mi Mar 17 18:35:35 nynyvs113 kernel: [2871555.121845] [2555771] 109 2555771 1924 86 45056 0 -500 ntpq Mar 17 18:35:35 nynyvs113 kernel: [2871555.121846] [2555772] 109 2555772 811 32 45056 0 -500 grep Mar 17 18:35:35 nynyvs113 kernel: [2871555.121847] [2555773] 109 2555773 2057 80 61440 0 -500 awk Mar 17 18:35:35 nynyvs113 kernel: [2871555.121848] [2555775] 0 2555775 4669 104 61440 44 0 cron Mar 17 18:35:35 nynyvs113 kernel: [2871555.121849] [2555776] 109 2555776 2111 53 53248 141 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121850] [2555777] 109 2555777 2111 42 49152 152 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121851] oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=/,mems_allowed=0-1,global_oom,task_memcg=/system.slice/mariadb.service,task=mariadbd,pid=2529159,uid=113 Mar 17 19:25:37 nynyvs113 rsyslogd: action 'action-0-builtin:omfwd' suspended (module 'builtin:omfwd'), retry 0. There should be messages before this one giving the reason for suspension. [v8.2102.0 try https://www.rsyslog.com/e/2007 ] Mar 17 19:25:37 nynyvs113 rsyslogd: action 'action-0-builtin:omfwd' resumed (module 'builtin:omfwd') [v8.2102.0 try https://www.rsyslog.com/e/2359 ] *Memory Alloccation* @@key_buffer_size / 1048576: 32.0000 @@query_cache_size / 1048576: 50.0000 @@innodb_buffer_pool_size / 1048576: 78848.0000 @@innodb_log_buffer_size / 1048576: 16.0000 @@max_connections: 500 @@read_buffer_size / 1048576: 0.1250 @@read_rnd_buffer_size / 1048576: 0.2500 @@sort_buffer_size / 1048576: 2.0000 @@join_buffer_size / 1048576: 0.2500 @@binlog_cache_size / 1048576: 0.0313 @@thread_stack / 1048576: 0.2852 @@tmp_table_size / 1048576: 32.0000 MAX_MEMORY_GB: 94.1569 |

| Description |

The MariaDB consumes all memory allocated on the server and eventually crashes due to OOM

Mar 17 18:35:35 nynyvs113 kernel: [2871555.121737] Swap cache stats: add 28523916, delete 28524014, find 47249895/50419652 Mar 17 18:35:35 nynyvs113 kernel: [2871555.121737] Free swap = 0kB Mar 17 18:35:35 nynyvs113 kernel: [2871555.121738] Total swap = 999420kB Mar 17 18:35:35 nynyvs113 kernel: [2871555.121738] 18874238 pages RAM Mar 17 18:35:35 nynyvs113 kernel: [2871555.121738] 0 pages HighMem/MovableOnly Mar 17 18:35:35 nynyvs113 kernel: [2871555.121739] 319662 pages reserved Mar 17 18:35:35 nynyvs113 kernel: [2871555.121739] 0 pages hwpoisoned Mar 17 18:35:35 nynyvs113 kernel: [2871555.121740] Tasks state (memory values in pages): Mar 17 18:35:35 nynyvs113 kernel: [2871555.121740] [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name Mar 17 18:35:35 nynyvs113 kernel: [2871555.121747] [ 400] 0 400 41263 206 208896 604 -250 systemd-journal Mar 17 18:35:35 nynyvs113 kernel: [2871555.121749] [ 425] 0 425 6003 38 69632 383 -1000 systemd-udevd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121751] [ 585] 0 585 12424 0 81920 403 0 VGAuthService Mar 17 18:35:35 nynyvs113 kernel: [2871555.121752] [ 586] 0 586 43443 242 94208 181 0 vmtoolsd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121754] [ 603] 105 603 7654 181 73728 98 -900 dbus-daemon Mar 17 18:35:35 nynyvs113 kernel: [2871555.121755] [ 609] 112 609 549788 4955 372736 1872 0 prometheus-node Mar 17 18:35:35 nynyvs113 kernel: [2871555.121756] [ 611] 0 611 73164 530 102400 574 0 rsyslogd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121758] [ 898] 0 898 807 37 32768 17 0 caagentd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121759] [ 1720] 0 1720 6279 149 77824 153 0 systemd-logind Mar 17 18:35:35 nynyvs113 kernel: [2871555.121760] [ 3043] 110 3043 4705 70 77824 182 0 lldpd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121762] [ 3050] 0 3050 27667 12 110592 2130 0 unattended-upgr Mar 17 18:35:35 nynyvs113 kernel: [2871555.121763] [ 3057] 108 3057 21943 51 69632 167 0 ntpd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121764] [ 3058] 0 3058 3347 45 65536 201 -1000 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121765] [ 3061] 110 3061 4728 104 77824 214 0 lldpd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121767] [ 3294] 0 3294 4472 25 61440 86 0 cron Mar 17 18:35:35 nynyvs113 kernel: [2871555.121768] [ 3298] 109 3298 2111 31 53248 163 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121769] [ 3304] 0 3304 1416 12 49152 75 0 atd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121771] [ 3318] 0 3318 1987 0 45056 76 0 agetty Mar 17 18:35:35 nynyvs113 kernel: [2871555.121772] [ 828871] 0 828871 28669 9 176128 1035 0 sssd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121773] [ 828872] 0 828872 38730 2757 200704 1102 0 sssd_be Mar 17 18:35:35 nynyvs113 kernel: [2871555.121774] [ 828873] 0 828873 33879 726 249856 787 0 sssd_nss Mar 17 18:35:35 nynyvs113 kernel: [2871555.121776] [ 828874] 0 828874 27486 994 200704 843 0 sssd_pam Mar 17 18:35:35 nynyvs113 kernel: [2871555.121777] [ 828875] 0 828875 25646 168 167936 824 0 sssd_pac Mar 17 18:35:35 nynyvs113 kernel: [2871555.121778] [ 828876] 0 828876 27065 669 188416 845 0 sssd_sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121779] [3867706] 0 3867706 781525 11410 1392640 8840 0 pmm-agent Mar 17 18:35:35 nynyvs113 kernel: [2871555.121781] [3867999] 0 3867999 182337 4007 180224 288 0 node_exporter Mar 17 18:35:35 nynyvs113 kernel: [2871555.121782] [4185979] 0 4185979 181211 3798 176128 148 0 mysqld_exporter Mar 17 18:35:35 nynyvs113 kernel: [2871555.121784] [4186027] 0 4186027 215617 39736 520192 2276 0 vmagent Mar 17 18:35:35 nynyvs113 kernel: [2871555.121785] [ 786172] 0 786172 12905 72 90112 284 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121786] [ 786225] 85018827 786225 3855 277 69632 87 0 systemd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121787] [ 786226] 85018827 786226 48988 316 106496 609 0 (sd-pam) Mar 17 18:35:35 nynyvs113 kernel: [2871555.121788] [ 786245] 85018827 786245 12905 99 86016 261 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121790] [ 786246] 85018827 786246 4840 1 69632 438 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121791] [ 786356] 85018827 786356 9670 2 77824 216 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121792] [ 786357] 0 786357 7678 1 69632 171 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121793] [ 786358] 0 786358 2335 93 53248 118 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121795] [1821490] 0 1821490 12906 154 90112 203 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121796] [1821502] 85018827 1821502 12906 171 86016 189 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121797] [1821503] 85018827 1821503 4840 238 65536 202 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121799] [1821609] 85018827 1821609 5423 115 69632 102 0 ssh Mar 17 18:35:35 nynyvs113 kernel: [2871555.121800] [2488524] 0 2488524 12915 209 86016 159 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121802] [2488532] 85018904 2488532 3856 367 77824 0 0 systemd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121803] [2488533] 85018904 2488533 48988 576 106496 349 0 (sd-pam) Mar 17 18:35:35 nynyvs113 kernel: [2871555.121804] [2488551] 85018904 2488551 12955 288 86016 140 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121805] [2488552] 85018904 2488552 4840 418 65536 24 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121806] [2488812] 85018904 2488812 9681 217 65536 13 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121807] [2488813] 0 2488813 7678 40 69632 132 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121808] [2488814] 0 2488814 2310 164 57344 26 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121810] [2508581] 0 2508581 10619 168 81920 171 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121811] [2508598] 85018916 2508598 3856 347 69632 4 0 systemd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121812] [2508599] 85018916 2508599 49038 643 106496 285 0 (sd-pam) Mar 17 18:35:35 nynyvs113 kernel: [2871555.121813] [2508618] 85018916 2508618 10619 210 81920 131 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121815] [2508619] 85018916 2508619 4840 431 61440 7 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121817] [2508729] 85018916 2508729 9673 199 77824 22 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121818] [2508730] 0 2508730 2568 419 57344 22 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121815] [2508619] 85018916 2508619 4840 431 61440 7 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121817] [2508729] 85018916 2508729 9673 199 77824 22 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121818] [2508730] 0 2508730 2568 419 57344 22 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121819] [2508834] 0 2508834 9657 205 73728 0 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121820] [2508835] 0 2508835 2596 446 61440 0 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121821] [2522285] 0 2522285 12907 293 94208 66 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121822] [2522296] 85018827 2522296 12907 299 81920 63 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121823] [2522297] 85018827 2522297 4840 435 65536 3 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121825] [2522425] 85018827 2522425 9670 219 81920 0 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121826] [2522426] 0 2522426 7678 173 73728 0 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121827] [2522427] 0 2522427 2335 195 65536 0 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121828] [2525571] 0 2525571 1350 17 49152 0 0 tail Mar 17 18:35:35 nynyvs113 kernel: [2871555.121829] [2529115] 0 2529115 12906 358 94208 0 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121830] [2529159] 113 2529159 19180640 18261859 149372928 221370 0 mariadbd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121832] [2529169] 85018827 2529169 12906 361 86016 1 0 sshd Mar 17 18:35:35 nynyvs113 kernel: [2871555.121833] [2529170] 85018827 2529170 4840 370 61440 70 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121834] [2529349] 85018827 2529349 9670 215 73728 3 0 sudo Mar 17 18:35:35 nynyvs113 kernel: [2871555.121835] [2529350] 0 2529350 7678 172 73728 0 0 su Mar 17 18:35:35 nynyvs113 kernel: [2871555.121836] [2529351] 0 2529351 2310 191 53248 0 0 bash Mar 17 18:35:35 nynyvs113 kernel: [2871555.121838] [2549755] 0 2549755 5428 325 69632 0 0 top Mar 17 18:35:35 nynyvs113 kernel: [2871555.121840] [2555764] 109 2555764 2144 102 53248 107 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121841] [2555765] 109 2555765 2144 109 49152 100 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121843] [2555766] 109 2555766 955 60 45056 0 -500 check_ntp_in_mi Mar 17 18:35:35 nynyvs113 kernel: [2871555.121844] [2555770] 109 2555770 955 63 45056 0 -500 check_ntp_in_mi Mar 17 18:35:35 nynyvs113 kernel: [2871555.121845] [2555771] 109 2555771 1924 86 45056 0 -500 ntpq Mar 17 18:35:35 nynyvs113 kernel: [2871555.121846] [2555772] 109 2555772 811 32 45056 0 -500 grep Mar 17 18:35:35 nynyvs113 kernel: [2871555.121847] [2555773] 109 2555773 2057 80 61440 0 -500 awk Mar 17 18:35:35 nynyvs113 kernel: [2871555.121848] [2555775] 0 2555775 4669 104 61440 44 0 cron Mar 17 18:35:35 nynyvs113 kernel: [2871555.121849] [2555776] 109 2555776 2111 53 53248 141 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121850] [2555777] 109 2555777 2111 42 49152 152 -500 nrpe Mar 17 18:35:35 nynyvs113 kernel: [2871555.121851] oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=/,mems_allowed=0-1,global_oom,task_memcg=/system.slice/mariadb.service,task=mariadbd,pid=2529159,uid=113 Mar 17 19:25:37 nynyvs113 rsyslogd: action 'action-0-builtin:omfwd' suspended (module 'builtin:omfwd'), retry 0. There should be messages before this one giving the reason for suspension. [v8.2102.0 try https://www.rsyslog.com/e/2007 ] Mar 17 19:25:37 nynyvs113 rsyslogd: action 'action-0-builtin:omfwd' resumed (module 'builtin:omfwd') [v8.2102.0 try https://www.rsyslog.com/e/2359 ] *Memory Alloccation* @@key_buffer_size / 1048576: 32.0000 @@query_cache_size / 1048576: 50.0000 @@innodb_buffer_pool_size / 1048576: 78848.0000 @@innodb_log_buffer_size / 1048576: 16.0000 @@max_connections: 500 @@read_buffer_size / 1048576: 0.1250 @@read_rnd_buffer_size / 1048576: 0.2500 @@sort_buffer_size / 1048576: 2.0000 @@join_buffer_size / 1048576: 0.2500 @@binlog_cache_size / 1048576: 0.0313 @@thread_stack / 1048576: 0.2852 @@tmp_table_size / 1048576: 32.0000 MAX_MEMORY_GB: 94.1569 |

The MariaDB consumes all memory allocated on the server and eventually crashes due to OOM

Mar 17 18:35:35 <server_name> kernel: [2871555.121737] Swap cache stats: add 28523916, delete 28524014, find 47249895/50419652 Mar 17 18:35:35 <server_name> kernel: [2871555.121737] Free swap = 0kB Mar 17 18:35:35 <server_name> kernel: [2871555.121738] Total swap = 999420kB Mar 17 18:35:35 <server_name> kernel: [2871555.121738] 18874238 pages RAM Mar 17 18:35:35 <server_name> kernel: [2871555.121738] 0 pages HighMem/MovableOnly Mar 17 18:35:35 <server_name> kernel: [2871555.121739] 319662 pages reserved Mar 17 18:35:35 <server_name> kernel: [2871555.121739] 0 pages hwpoisoned Mar 17 18:35:35 <server_name> kernel: [2871555.121740] Tasks state (memory values in pages): Mar 17 18:35:35 <server_name> kernel: [2871555.121740] [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name Mar 17 18:35:35 <server_name> kernel: [2871555.121747] [ 400] 0 400 41263 206 208896 604 -250 systemd-journal Mar 17 18:35:35 <server_name> kernel: [2871555.121749] [ 425] 0 425 6003 38 69632 383 -1000 systemd-udevd Mar 17 18:35:35 <server_name> kernel: [2871555.121751] [ 585] 0 585 12424 0 81920 403 0 VGAuthService Mar 17 18:35:35 <server_name> kernel: [2871555.121752] [ 586] 0 586 43443 242 94208 181 0 vmtoolsd Mar 17 18:35:35 <server_name> kernel: [2871555.121754] [ 603] 105 603 7654 181 73728 98 -900 dbus-daemon Mar 17 18:35:35 <server_name> kernel: [2871555.121755] [ 609] 112 609 549788 4955 372736 1872 0 prometheus-node Mar 17 18:35:35 <server_name> kernel: [2871555.121756] [ 611] 0 611 73164 530 102400 574 0 rsyslogd Mar 17 18:35:35 <server_name> kernel: [2871555.121758] [ 898] 0 898 807 37 32768 17 0 caagentd Mar 17 18:35:35 <server_name> kernel: [2871555.121759] [ 1720] 0 1720 6279 149 77824 153 0 systemd-logind Mar 17 18:35:35 <server_name> kernel: [2871555.121760] [ 3043] 110 3043 4705 70 77824 182 0 lldpd Mar 17 18:35:35 <server_name> kernel: [2871555.121762] [ 3050] 0 3050 27667 12 110592 2130 0 unattended-upgr Mar 17 18:35:35 <server_name> kernel: [2871555.121763] [ 3057] 108 3057 21943 51 69632 167 0 ntpd Mar 17 18:35:35 <server_name> kernel: [2871555.121764] [ 3058] 0 3058 3347 45 65536 201 -1000 sshd Mar 17 18:35:35 <server_name> kernel: [2871555.121765] [ 3061] 110 3061 4728 104 77824 214 0 lldpd Mar 17 18:35:35 <server_name> kernel: [2871555.121767] [ 3294] 0 3294 4472 25 61440 86 0 cron Mar 17 18:35:35 <server_name> kernel: [2871555.121768] [ 3298] 109 3298 2111 31 53248 163 -500 nrpe Mar 17 18:35:35 <server_name> kernel: [2871555.121769] [ 3304] 0 3304 1416 12 49152 75 0 atd Mar 17 18:35:35 <server_name> kernel: [2871555.121771] [ 3318] 0 3318 1987 0 45056 76 0 agetty Mar 17 18:35:35 <server_name> kernel: [2871555.121772] [ 828871] 0 828871 28669 9 176128 1035 0 sssd Mar 17 18:35:35 <server_name> kernel: [2871555.121773] [ 828872] 0 828872 38730 2757 200704 1102 0 sssd_be Mar 17 18:35:35 <server_name> kernel: [2871555.121774] [ 828873] 0 828873 33879 726 249856 787 0 sssd_nss Mar 17 18:35:35 <server_name> kernel: [2871555.121776] [ 828874] 0 828874 27486 994 200704 843 0 sssd_pam Mar 17 18:35:35 <server_name> kernel: [2871555.121777] [ 828875] 0 828875 25646 168 167936 824 0 sssd_pac Mar 17 18:35:35 <server_name> kernel: [2871555.121778] [ 828876] 0 828876 27065 669 188416 845 0 sssd_sudo Mar 17 18:35:35 <server_name> kernel: [2871555.121779] [3867706] 0 3867706 781525 11410 1392640 8840 0 pmm-agent Mar 17 18:35:35 <server_name> kernel: [2871555.121781] [3867999] 0 3867999 182337 4007 180224 288 0 node_exporter Mar 17 18:35:35 <server_name> kernel: [2871555.121782] [4185979] 0 4185979 181211 3798 176128 148 0 mysqld_exporter Mar 17 18:35:35 <server_name> kernel: [2871555.121784] [4186027] 0 4186027 215617 39736 520192 2276 0 vmagent Mar 17 18:35:35 <server_name> kernel: [2871555.121785] [ 786172] 0 786172 12905 72 90112 284 0 sshd Mar 17 18:35:35 <server_name> kernel: [2871555.121786] [ 786225] 85018827 786225 3855 277 69632 87 0 systemd Mar 17 18:35:35 <server_name> kernel: [2871555.121787] [ 786226] 85018827 786226 48988 316 106496 609 0 (sd-pam) Mar 17 18:35:35 <server_name> kernel: [2871555.121788] [ 786245] 85018827 786245 12905 99 86016 261 0 sshd Mar 17 18:35:35 <server_name> kernel: [2871555.121790] [ 786246] 85018827 786246 4840 1 69632 438 0 bash Mar 17 18:35:35 <server_name> kernel: [2871555.121791] [ 786356] 85018827 786356 9670 2 77824 216 0 sudo Mar 17 18:35:35 <server_name> kernel: [2871555.121792] [ 786357] 0 786357 7678 1 69632 171 0 su Mar 17 18:35:35 <server_name> kernel: [2871555.121793] [ 786358] 0 786358 2335 93 53248 118 0 bash Mar 17 18:35:35 <server_name> kernel: [2871555.121795] [1821490] 0 1821490 12906 154 90112 203 0 sshd Mar 17 18:35:35 <server_name> kernel: [2871555.121796] [1821502] 85018827 1821502 12906 171 86016 189 0 sshd Mar 17 18:35:35 <server_name> kernel: [2871555.121797] [1821503] 85018827 1821503 4840 238 65536 202 0 bash Mar 17 18:35:35 <server_name> kernel: [2871555.121799] [1821609] 85018827 1821609 5423 115 69632 102 0 ssh Mar 17 18:35:35 <server_name> kernel: [2871555.121800] [2488524] 0 2488524 12915 209 86016 159 0 sshd Mar 17 18:35:35 <server_name> kernel: [2871555.121802] [2488532] 85018904 2488532 3856 367 77824 0 0 systemd Mar 17 18:35:35 <server_name> kernel: [2871555.121803] [2488533] 85018904 2488533 48988 576 106496 349 0 (sd-pam) Mar 17 18:35:35 <server_name> kernel: [2871555.121804] [2488551] 85018904 2488551 12955 288 86016 140 0 sshd Mar 17 18:35:35 <server_name> kernel: [2871555.121805] [2488552] 85018904 2488552 4840 418 65536 24 0 bash Mar 17 18:35:35 <server_name> kernel: [2871555.121806] [2488812] 85018904 2488812 9681 217 65536 13 0 sudo Mar 17 18:35:35 <server_name> kernel: [2871555.121807] [2488813] 0 2488813 7678 40 69632 132 0 su Mar 17 18:35:35 <server_name> kernel: [2871555.121808] [2488814] 0 2488814 2310 164 57344 26 0 bash Mar 17 18:35:35 <server_name> kernel: [2871555.121810] [2508581] 0 2508581 10619 168 81920 171 0 sshd Mar 17 18:35:35 <server_name> kernel: [2871555.121811] [2508598] 85018916 2508598 3856 347 69632 4 0 systemd Mar 17 18:35:35 <server_name> kernel: [2871555.121812] [2508599] 85018916 2508599 49038 643 106496 285 0 (sd-pam) Mar 17 18:35:35 <server_name> kernel: [2871555.121813] [2508618] 85018916 2508618 10619 210 81920 131 0 sshd Mar 17 18:35:35 <server_name> kernel: [2871555.121815] [2508619] 85018916 2508619 4840 431 61440 7 0 bash Mar 17 18:35:35 <server_name> kernel: [2871555.121817] [2508729] 85018916 2508729 9673 199 77824 22 0 sudo Mar 17 18:35:35 <server_name> kernel: [2871555.121818] [2508730] 0 2508730 2568 419 57344 22 0 bash Mar 17 18:35:35 <server_name> kernel: [2871555.121815] [2508619] 85018916 2508619 4840 431 61440 7 0 bash Mar 17 18:35:35 <server_name> kernel: [2871555.121817] [2508729] 85018916 2508729 9673 199 77824 22 0 sudo Mar 17 18:35:35 <server_name> kernel: [2871555.121818] [2508730] 0 2508730 2568 419 57344 22 0 bash Mar 17 18:35:35 <server_name> kernel: [2871555.121819] [2508834] 0 2508834 9657 205 73728 0 0 sudo Mar 17 18:35:35 <server_name> kernel: [2871555.121820] [2508835] 0 2508835 2596 446 61440 0 0 bash Mar 17 18:35:35 <server_name> kernel: [2871555.121821] [2522285] 0 2522285 12907 293 94208 66 0 sshd Mar 17 18:35:35 <server_name> kernel: [2871555.121822] [2522296] 85018827 2522296 12907 299 81920 63 0 sshd Mar 17 18:35:35 <server_name> kernel: [2871555.121823] [2522297] 85018827 2522297 4840 435 65536 3 0 bash Mar 17 18:35:35 <server_name> kernel: [2871555.121825] [2522425] 85018827 2522425 9670 219 81920 0 0 sudo Mar 17 18:35:35 <server_name> kernel: [2871555.121826] [2522426] 0 2522426 7678 173 73728 0 0 su Mar 17 18:35:35 <server_name> kernel: [2871555.121827] [2522427] 0 2522427 2335 195 65536 0 0 bash Mar 17 18:35:35 <server_name> kernel: [2871555.121828] [2525571] 0 2525571 1350 17 49152 0 0 tail Mar 17 18:35:35 <server_name> kernel: [2871555.121829] [2529115] 0 2529115 12906 358 94208 0 0 sshd Mar 17 18:35:35 <server_name> kernel: [2871555.121830] [2529159] 113 2529159 19180640 18261859 149372928 221370 0 mariadbd Mar 17 18:35:35 <server_name> kernel: [2871555.121832] [2529169] 85018827 2529169 12906 361 86016 1 0 sshd Mar 17 18:35:35 <server_name> kernel: [2871555.121833] [2529170] 85018827 2529170 4840 370 61440 70 0 bash Mar 17 18:35:35 <server_name> kernel: [2871555.121834] [2529349] 85018827 2529349 9670 215 73728 3 0 sudo Mar 17 18:35:35 <server_name> kernel: [2871555.121835] [2529350] 0 2529350 7678 172 73728 0 0 su Mar 17 18:35:35 <server_name> kernel: [2871555.121836] [2529351] 0 2529351 2310 191 53248 0 0 bash Mar 17 18:35:35 <server_name> kernel: [2871555.121838] [2549755] 0 2549755 5428 325 69632 0 0 top Mar 17 18:35:35 <server_name> kernel: [2871555.121840] [2555764] 109 2555764 2144 102 53248 107 -500 nrpe Mar 17 18:35:35 <server_name> kernel: [2871555.121841] [2555765] 109 2555765 2144 109 49152 100 -500 nrpe Mar 17 18:35:35 <server_name> kernel: [2871555.121843] [2555766] 109 2555766 955 60 45056 0 -500 check_ntp_in_mi Mar 17 18:35:35 <server_name> kernel: [2871555.121844] [2555770] 109 2555770 955 63 45056 0 -500 check_ntp_in_mi Mar 17 18:35:35 <server_name> kernel: [2871555.121845] [2555771] 109 2555771 1924 86 45056 0 -500 ntpq Mar 17 18:35:35 <server_name> kernel: [2871555.121846] [2555772] 109 2555772 811 32 45056 0 -500 grep Mar 17 18:35:35 <server_name> kernel: [2871555.121847] [2555773] 109 2555773 2057 80 61440 0 -500 awk Mar 17 18:35:35 <server_name> kernel: [2871555.121848] [2555775] 0 2555775 4669 104 61440 44 0 cron Mar 17 18:35:35 <server_name> kernel: [2871555.121849] [2555776] 109 2555776 2111 53 53248 141 -500 nrpe Mar 17 18:35:35 <server_name> kernel: [2871555.121850] [2555777] 109 2555777 2111 42 49152 152 -500 nrpe Mar 17 18:35:35 <server_name> kernel: [2871555.121851] oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=/,mems_allowed=0-1,global_oom,task_memcg=/system.slice/mariadb.service,task=mariadbd,pid=2529159,uid=113 Mar 17 19:25:37 <server_name> rsyslogd: action 'action-0-builtin:omfwd' suspended (module 'builtin:omfwd'), retry 0. There should be messages before this one giving the reason for suspension. [v8.2102.0 try https://www.rsyslog.com/e/2007 ] Mar 17 19:25:37 <server_name> rsyslogd: action 'action-0-builtin:omfwd' resumed (module 'builtin:omfwd') [v8.2102.0 try https://www.rsyslog.com/e/2359 ] *Memory Alloccation* @@key_buffer_size / 1048576: 32.0000 @@query_cache_size / 1048576: 50.0000 @@innodb_buffer_pool_size / 1048576: 78848.0000 @@innodb_log_buffer_size / 1048576: 16.0000 @@max_connections: 500 @@read_buffer_size / 1048576: 0.1250 @@read_rnd_buffer_size / 1048576: 0.2500 @@sort_buffer_size / 1048576: 2.0000 @@join_buffer_size / 1048576: 0.2500 @@binlog_cache_size / 1048576: 0.0313 @@thread_stack / 1048576: 0.2852 @@tmp_table_size / 1048576: 32.0000 MAX_MEMORY_GB: 94.1569 |

| Comment | [ [^my.cnf] [^mariadb.cnf] ] |

| Attachment | screenshot-1.png [ 69082 ] |

| Status | Open [ 1 ] | Needs Feedback [ 10501 ] |

| Assignee | Oleksandr Byelkin [ sanja ] | |

| Status | Needs Feedback [ 10501 ] | Open [ 1 ] |

| Link |

This issue relates to |

| Component/s | Stored routines [ 13905 ] |

| Fix Version/s | 10.4 [ 22408 ] | |

| Fix Version/s | 10.5 [ 23123 ] | |

| Fix Version/s | 10.6 [ 24028 ] | |

| Fix Version/s | 10.3 [ 22126 ] |

| Fix Version/s | 10.3 [ 22126 ] | |

| Fix Version/s | 10.4 [ 22408 ] | |

| Fix Version/s | 10.5 [ 23123 ] |

| Description |

The MariaDB consumes all memory allocated on the server and eventually crashes due to OOM