I attached the patch where lsn_lock is just pthread_mutex (with non-portable adaptive attribute) lsn_lock_is_pthread_mutex.diff , applied to commit bbe99cd4e2d7c83a06dd93ea88af97f2d5796810 (current 10.9)

, applied to commit bbe99cd4e2d7c83a06dd93ea88af97f2d5796810 (current 10.9)

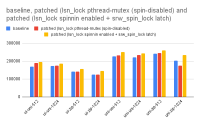

that performs better for me 141851.87 tps without the patch, vs 152514.50 with the patch

in a 30 seconds update_index standoff , and this is on Intel. Perhaps ARM can also benefit from it.

I used 1000 clients , 8 tables x 1500000 rows, large redo log and buffer pool, --innodb-flush-log-at-trx-commit=2 --thread-handling=pool-of-threads #

And it performs good on Windows, too

Update : However, if I run this on Linux on 2 NUMA nodes, results are not so good, it is 83495.47 without the patch vs 65638.71 with the patch. If you notice the single NUMA node numbers, yes that's how the NUMA performs on that box I have, I give it 2x the CPUs, and it makes the server half as fast (therefore I almost never do any NUMA test). That's an old 3.10 kernel, so maybe things are better for someone else.

Bug

Major

MDEV-27774 Reduce scalability bottlenecks in mtr_t::commit()

MDEV-32374 log_sys.lsn_lock is a performance hog

MDEV-33515 log_sys.lsn_lock causes excessive context switching

wlad, thank you for experimenting and benchmarking.

I checked your lsn_lock_is_pthread_mutex.diff , and I am glad to see that it will not only avoid any PERFORMANCE_SCHEMA overhead, but that sizeof(log_sys.lsn_lock) is only 40 on my system. I thought that the size was 44 bytes on IA-32 and 48 bytes on AMD64, but it looks like I got it off by 8.

, and I am glad to see that it will not only avoid any PERFORMANCE_SCHEMA overhead, but that sizeof(log_sys.lsn_lock) is only 40 on my system. I thought that the size was 44 bytes on IA-32 and 48 bytes on AMD64, but it looks like I got it off by 8.

My main concern regarding the mutex size is that the data fields that follow the mutex should best be allocated in the same cache line (typically, 64 bytes on IA-32 or AMD64). That is definitely the case here.

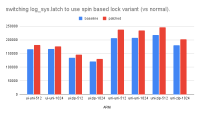

Please commit that change, as well as krunalbauskar’s pull request to enable log_sys.latch spinning on ARMv8.