Details

-

Task

-

Status: Closed (View Workflow)

-

Major

-

Resolution: Fixed

-

None

Description

When InnoDB starts up, it can be limited by innodb_io_capacity (or innodb_io_capacity_max). Currently, users can increase these temporarily at startup, but this is not ideal, and neither should be left elevated.

Based on the discussion at https://mariadb.zulipchat.com/#narrow/stream/118759-general/topic/InnoDB.20tuning/near/234486913, this task is to add a new parameter for use in buffer pool load (and read-ahead).

Attachments

Issue Links

- is blocked by

-

MDEV-26547 Restoring InnoDB buffer pool dump is single-threaded for no reason

-

- Closed

-

- relates to

-

MDEV-29343 MariaDB 10.6.x slower mysqldump etc.

-

- Closed

-

-

MDEV-9930 Allow to choose an aggressive InnoDB buffer pool load mode

-

- Closed

-

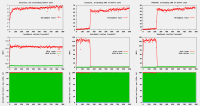

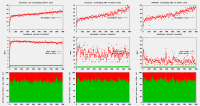

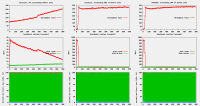

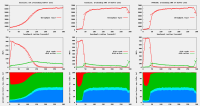

The change (removing the function buf_load_throttle_if_needed()) looks OK to me, but I think that we need to test the performance before applying this change.

I would not target 10.3, but rather 10.5 or 10.6. The buffer pool I/O layer works quite differently before 10.5 switched to a single buffer pool (

MDEV-15058) and optimized the page cleaner thread (MDEV-23399,MDEV-23855).