Axel,

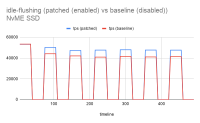

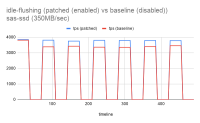

I have been investigating this issue that tends to suggest that idle-flushing slows down the select workload.

It seems like any parallel flushing in the background could have the same effect on the select workload.

Let's say we have 1000 pages with innodb_max_dirty_pages_pct_lwm = 25% that means threshold for idle flushing is 250. Now let's assume the read-write workload modifies 700 pages. On completion of read-write workload before the idle flushing kicks in, the existing algorithm will carry out adaptive flushing to flush for the range from 700-250. If we try to track select qps during this time it would be the same as that between 0-250.

Let me share an example:

Block-1: adaptive flushing

Block-2: Idle flushing

Block-3: No flushing

block-1:

[ 1s ] thds: 16 tps: 10801.51 qps: 172968.90 (r/w/o: 151349.91/0.00/21618.99) lat (ms,95%): 1.73 err/s: 0.00 reconn/s: 0.00

[ 2s ] thds: 16 tps: 10665.98 qps: 170672.69 (r/w/o: 149341.73/0.00/21330.96) lat (ms,95%): 1.79 err/s: 0.00 reconn/s: 0.00

[ 3s ] thds: 16 tps: 10361.11 qps: 165755.69 (r/w/o: 145033.48/0.00/20722.21) lat (ms,95%): 2.00 err/s: 0.00 reconn/s: 0.00

[ 4s ] thds: 16 tps: 10395.87 qps: 166338.97 (r/w/o: 145547.23/0.00/20791.75) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 5s ] thds: 16 tps: 10357.03 qps: 165701.55 (r/w/o: 144987.49/0.00/20714.07) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 6s ] thds: 16 tps: 10384.01 qps: 166172.21 (r/w/o: 145403.18/0.00/20769.03) lat (ms,95%): 1.89 err/s: 0.00 reconn/s: 0.00

[ 7s ] thds: 16 tps: 10386.08 qps: 166167.24 (r/w/o: 145395.09/0.00/20772.16) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 8s ] thds: 16 tps: 10386.07 qps: 166160.18 (r/w/o: 145389.03/0.00/20771.15) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 9s ] thds: 16 tps: 10381.97 qps: 166159.48 (r/w/o: 145394.54/0.00/20764.93) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 10s ] thds: 16 tps: 10305.99 qps: 164843.91 (r/w/o: 144231.92/0.00/20611.99) lat (ms,95%): 1.89 err/s: 0.00 reconn/s: 0.00

[ 11s ] thds: 16 tps: 10345.98 qps: 165590.70 (r/w/o: 144898.74/0.00/20691.96) lat (ms,95%): 1.89 err/s: 0.00 reconn/s: 0.00

[ 12s ] thds: 16 tps: 10377.01 qps: 166040.23 (r/w/o: 145286.20/0.00/20754.03) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 13s ] thds: 16 tps: 10396.99 qps: 166285.85 (r/w/o: 145491.87/0.00/20793.98) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 14s ] thds: 16 tps: 10383.95 qps: 166176.24 (r/w/o: 145408.34/0.00/20767.91) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 15s ] thds: 16 tps: 10343.14 qps: 165495.22 (r/w/o: 144809.94/0.00/20685.28) lat (ms,95%): 1.96 err/s: 0.00 reconn/s: 0.00

==== average tps during adpative flushing: 165K-166K

block-2:

[ 16s ] thds: 16 tps: 10288.87 qps: 164613.94 (r/w/o: 144036.20/0.00/20577.74) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 17s ] thds: 16 tps: 10283.08 qps: 164521.28 (r/w/o: 143955.12/0.00/20566.16) lat (ms,95%): 1.89 err/s: 0.00 reconn/s: 0.00

[ 18s ] thds: 16 tps: 10336.92 qps: 165395.71 (r/w/o: 144720.87/0.00/20674.84) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 19s ] thds: 16 tps: 10437.94 qps: 167011.10 (r/w/o: 146135.21/0.00/20875.89) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 20s ] thds: 16 tps: 10370.09 qps: 165904.50 (r/w/o: 145165.31/0.00/20739.19) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 21s ] thds: 16 tps: 10429.01 qps: 166865.11 (r/w/o: 146006.10/0.00/20859.01) lat (ms,95%): 1.89 err/s: 0.00 reconn/s: 0.00

[ 22s ] thds: 16 tps: 10393.06 qps: 166281.96 (r/w/o: 145495.84/0.00/20786.12) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 23s ] thds: 16 tps: 10356.96 qps: 165759.33 (r/w/o: 145045.41/0.00/20713.92) lat (ms,95%): 1.96 err/s: 0.00 reconn/s: 0.00

[ 24s ] thds: 16 tps: 10366.98 qps: 165863.68 (r/w/o: 145129.72/0.00/20733.96) lat (ms,95%): 1.96 err/s: 0.00 reconn/s: 0.00

[ 25s ] thds: 16 tps: 10366.00 qps: 165843.99 (r/w/o: 145111.99/0.00/20732.00) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 26s ] thds: 16 tps: 10347.85 qps: 165534.55 (r/w/o: 144838.86/0.00/20695.69) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 27s ] thds: 16 tps: 10356.13 qps: 165729.08 (r/w/o: 145018.82/0.00/20710.26) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 28s ] thds: 16 tps: 10446.03 qps: 167094.52 (r/w/o: 146200.46/0.00/20894.07) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 29s ] thds: 16 tps: 10392.93 qps: 166323.91 (r/w/o: 145538.05/0.00/20785.86) lat (ms,95%): 1.89 err/s: 0.00 reconn/s: 0.00

[ 30s ] thds: 16 tps: 10289.15 qps: 164644.36 (r/w/o: 144066.06/0.00/20578.29) lat (ms,95%): 1.89 err/s: 0.00 reconn/s: 0.00

[ 31s ] thds: 16 tps: 10384.98 qps: 166156.68 (r/w/o: 145386.72/0.00/20769.96) lat (ms,95%): 1.89 err/s: 0.00 reconn/s: 0.00

[ 32s ] thds: 16 tps: 10355.93 qps: 165668.84 (r/w/o: 144956.99/0.00/20711.86) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 33s ] thds: 16 tps: 10340.00 qps: 165431.99 (r/w/o: 144751.99/0.00/20680.00) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 34s ] thds: 16 tps: 10358.99 qps: 165757.88 (r/w/o: 145039.90/0.00/20717.99) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 35s ] thds: 16 tps: 10344.93 qps: 165515.92 (r/w/o: 144827.06/0.00/20688.87) lat (ms,95%): 1.93 err/s: 0.00 reconn/s: 0.00

[ 36s ] thds: 16 tps: 10387.07 qps: 166156.14 (r/w/o: 145382.00/0.00/20774.14) lat (ms,95%): 1.89 err/s: 0.00 reconn/s: 0.00

==== average tps during idle flushing: 165K-166K

block-3:

[ 37s ] thds: 16 tps: 10526.01 qps: 168472.21 (r/w/o: 147419.18/0.00/21053.03) lat (ms,95%): 1.89 err/s: 0.00 reconn/s: 0.00

[ 38s ] thds: 16 tps: 10748.06 qps: 171991.89 (r/w/o: 150495.78/0.00/21496.11) lat (ms,95%): 1.79 err/s: 0.00 reconn/s: 0.00

[ 39s ] thds: 16 tps: 10654.91 qps: 170454.53 (r/w/o: 149144.71/0.00/21309.82) lat (ms,95%): 1.76 err/s: 0.00 reconn/s: 0.00

[ 40s ] thds: 16 tps: 10669.93 qps: 170714.83 (r/w/o: 149374.98/0.00/21339.85) lat (ms,95%): 1.76 err/s: 0.00 reconn/s: 0.00

[ 41s ] thds: 16 tps: 10661.98 qps: 170590.61 (r/w/o: 149267.66/0.00/21322.95) lat (ms,95%): 1.76 err/s: 0.00 reconn/s: 0.00

[ 42s ] thds: 16 tps: 10667.20 qps: 170680.16 (r/w/o: 149345.77/0.00/21334.40) lat (ms,95%): 1.76 err/s: 0.00 reconn/s: 0.00

==== average tps during no flushing: 170K

------------------------------------------------------------------------------------------------------------------------

If we keep the idle flushing aside for a minute then the problem could be also re-defined as parallel adaptive flushing during select workload slows down select workload (by 3% in the said case).

This should be looked upon separately. I presume there is mutex contention that we are hitting given pages are being flushed and also being read from the same buffer pool.

Bug

Major

MDEV-25113 Reduce effect of parallel background flush on select workload

MDEV-27295 MariaDB 10.5 does not do idle checkpoint (regression). Marked as fixed on 10.5.13 but it is not

MDEV-23855 InnoDB log checkpointing causes regression for write-heavy OLTP

MDEV-24537 innodb_max_dirty_pages_pct_lwm=0 lost its special meaning

MDEV-25093 Adaptive flushing fails to kick in even if innodb_adaptive_flushing_lwm is hit. (possible regression)

MDEV-30000 make mariadb-backup to force an innodb checkpoint

Posting the possible patch: https://github.com/mysqlonarm/server/commit/b5cf788d0f27a1fbebb3ccc489783efeb3c270e1