This change to mysql-test/unstable-tests was lost in a merge commit. The fix to this is trivial: add back the line in mysql-test/unstable-tests.

However, the underlying issue here is that current MariaDB Server practices allow Travis-CI to be broken, and effectively after that:

All new and updated pull requests at https://github.com/MariaDB/server/pulls will start to fail, communicating indirectly to both contributors and reviewers that the code is broken and not worth reviewing until at least the CI passes

Any new contributors branching of the latest development git branch will have a failing CI as the starting point, which most likely puts them off.

Quality deteriorates, since once the CI starts failing, people start to ignore all results from the CI and more and more failures start to creep in.

And so on. I hope you get the point why failing CI is bad and how it is counter-productive and wastes a lot of human resources that is away from productive development.

Now what can be do about this?

Is there a need for more education? Travis-CI was added as the first and only CI system accessible to outside contributors in August 2016. Surely all developers have had a chance to learn about it? Or is there some obstacles? Should we maybe organize a webinar where we quickly go through what Travis-CI is, what the lines in .travis.yml mean and how to browser Travis-CI.org to look at build results or debug them?

I think the underlying problem here is the same reason why there are so many failures on buildbot.askmonty.org and buildbot.mariadb.org as well. Way too many people are taking the wrong tradeoff in the decision about "Just get it done and move on, don't wait for tests" vs "Work on something else, only merge once tests complete".

What do you think? What should be done about this to improve the situation, to improve the quality of MariaDB both by current developers and future contributors, and speed up the progress by having less breakage and steps backwards?

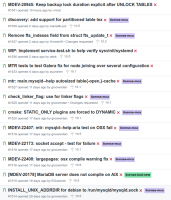

Screenshot to illustrate how it looks like when the CI is permanently broken and all Pull Requests are deemed failing on automatic tests:

Note the red cross next to titles.

Otto Kekäläinen

added a comment - Commit https://github.com/MariaDB/server/pull/1463/commits/5d85bc08c6412d067a69d2c1354a10f9a803b332 will make Travis-CI green again, but the root problem here remains, thus not closing issue.

Screenshot to illustrate how it looks like when the CI is permanently broken and all Pull Requests are deemed failing on automatic tests:

Note the red cross next to titles.

If developers don't use Travis CI, they won't look to see if it's broken.

Sergei Golubchik

added a comment - The issue is, as you know, that out CI at the moment is https://buildbot.askmonty.org/buildbot/

If developers don't use Travis CI, they won't look to see if it's broken.

Travis CI is integrated into Github, and visible there in every

commit, so one kind of need to actively ignore it not to see it.

Travis CI can also send out email automatically if a build fails to

the committer's email, so there should not be any extra burden to look

at a website all the time.

The only burden is the need to wait for it to complete, which it does

in about the same time as when you wait for buildbot results to

complete.

Shouldn't developers be happy if some system automatically detects a

mistake they made so they can avoid it?

Otto Kekäläinen

added a comment -

Travis CI is integrated into Github, and visible there in every

commit, so one kind of need to actively ignore it not to see it.

Travis CI can also send out email automatically if a build fails to

the committer's email, so there should not be any extra burden to look

at a website all the time.

The only burden is the need to wait for it to complete, which it does

in about the same time as when you wait for buildbot results to

complete.

Shouldn't developers be happy if some system automatically detects a

mistake they made so they can avoid it?

I cared enough to close MDEV-21976 and merge it up to 10.5. In the merge to 10.5, I also removed main.udf from mysql-test/unstable-tests, because that file in 10.5 is still based on the 10.4 version. In stable releases, that file will be updated based on observed test failures or recent changes to tests.

Marko Mäkelä

added a comment - I cared enough to close MDEV-21976 and merge it up to 10.5. In the merge to 10.5 , I also removed main.udf from mysql-test/unstable-tests , because that file in 10.5 is still based on the 10.4 version. In stable releases, that file will be updated based on observed test failures or recent changes to tests.

arm64, ppc64le, s390x all have multiple space/quota issues reported to travis - https://travis-ci.community/c/environments/multi-cpu-arch/96 and largely ignored (arm64 I think got a quote bump at some point, though I suspect its there's an aspect of it is never getting reset back to a number at the start of the build, or residual allocation somehow).

Daniel Black

added a comment - arm64, ppc64le, s390x all have multiple space/quota issues reported to travis - https://travis-ci.community/c/environments/multi-cpu-arch/96 and largely ignored (arm64 I think got a quote bump at some point, though I suspect its there's an aspect of it is never getting reset back to a number at the start of the build, or residual allocation somehow).

This is not always related to the install - just installing apt dependencies - https://travis-ci.org/github/MariaDB/server/jobs/687470429 triggered this failure. I'm fairly sure the repo isn't even cloned at this point.

After my merge to 10.5, apart from the quota issues that danblack mentioned, it looks like we only got a Mac OS X failure that ought to be fixed when MDEV-22173 is finally merged.

Marko Mäkelä

added a comment - - edited After my merge to 10.5, apart from the quota issues that danblack mentioned, it looks like we only got a Mac OS X failure that ought to be fixed when MDEV-22173 is finally merged.

danblack If there are problems it particular test jobs being unstable and producing false positives, then we can just exclude or ignore them so that the build is green. So far my own experience is that when I branch off from a master branch and start developing something, I need to debug and report a bunch or real test failures first which have been ignored before I actually get to test the thing I am changing myself.

Otto Kekäläinen

added a comment - danblack If there are problems it particular test jobs being unstable and producing false positives, then we can just exclude or ignore them so that the build is green. So far my own experience is that when I branch off from a master branch and start developing something, I need to debug and report a bunch or real test failures first which have been ignored before I actually get to test the thing I am changing myself.

The 10.3 was green but 2 most recent builds turned red:

In 10.2 the build has been broken for a longer while, so maybe just delete the .travis-ci.yml file from that branch to avoid spending time on that one? It's not helping any testing now.

Otto Kekäläinen

added a comment - Currently these branches at https://travis-ci.org/github/MariaDB/server/branches are green:

10.6

10.5

10.4

The 10.3 was green but 2 most recent builds turned red:

In 10.2 the build has been broken for a longer while, so maybe just delete the .travis-ci.yml file from that branch to avoid spending time on that one? It's not helping any testing now.

Warnings generated in error logs during shutdown after running tests: sys_vars.thread_pool_size_high

Warning: Memory not freed: 38408

There does not appear to be any bug report for this memory leak yet.

In my opinion, the proverb that I heard at the compulsory service of the Finnish defence force applies: "Valvomaton käsky on kasku." (An unenforced order is a joke.) If nobody spends effort on monitoring Travis test failures, they are going to be rather useless. Build failures probably do bring some more value (if someone notices them before the breakage reaches a release).

Marko Mäkelä

added a comment - - edited otto , for a recent 10.3 build https://travis-ci.org/github/MariaDB/server/builds/714262380 I see two failures apparently due to bad connectivity when trying to download clang :

Could not connect to apt.llvm.org:80 (199.232.66.49), connection timed out

E: Unable to locate package clang-5.0

Maybe we should try to figure out a solution that allows such build-time dependencies to be cached? The commit was only disabling a test (no code changes) .

For a 10.2 build , I see something else:

The job exceeded the maximum time limit for jobs, and has been terminated. (Do we really have to spend time on building TokuDB on Travis? It is not getting updates, and was finally removed in 10.5/10.6 by MDEV-19780 .)

Errors/warnings were found in logfiles during server shutdown that I have also seen on http://buildbot.askmonty.org from time to time:

10.2 dc716da4571465af3adadcd2c471f11fef3a2191

Warnings generated in error logs during shutdown after running tests: sys_vars.thread_pool_size_high

Warning: Memory not freed: 38408

There does not appear to be any bug report for this memory leak yet.

In my opinion, the proverb that I heard at the compulsory service of the Finnish defence force applies: "Valvomaton käsky on kasku." (An unenforced order is a joke.) If nobody spends effort on monitoring Travis test failures, they are going to be rather useless. Build failures probably do bring some more value (if someone notices them before the breakage reaches a release).

Otto Kekäläinen

added a comment - If there are a lot of false positives, then I suggest we simply disable those tests. It will also make the suite run faster.

Once Ubuntu 20.04 is available on Travis-CI we can get rid of those extra dependencies and thus streamline the config. WIP at https://github.com/MariaDB/server/pull/1507

Otto Kekäläinen

added a comment - Nice to see that nowadays Travis-CI seems to be all green and people are not ignoring the results of it!

Thanks!

Screenshots from https://travis-ci.org/github/MariaDB/server/branches

Commit https://github.com/MariaDB/server/pull/1463/commits/5d85bc08c6412d067a69d2c1354a10f9a803b332 will make Travis-CI green again, but the root problem here remains, thus not closing issue.

Screenshot to illustrate how it looks like when the CI is permanently broken and all Pull Requests are deemed failing on automatic tests:

Note the red cross next to titles.