Details

Description

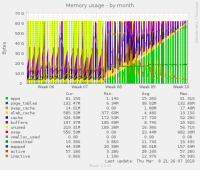

Hi, after we upgraded our servers to use latest 10.2 version it started to use all RAM in very short time on some servers, on older relese on same server there was never problems, even servers with 256GB of RAM have problems now and we never expected this. For example we moved server from 64 to 128GB of RAM and now RAM usage is over 100GB where on 64 it was about 50% with all same sites, same setup, only diffrent release of Maria.

Only what I found similar is this: MDEV-13403

Any help advice what to do, because, we need to restart MySQL on same servers every several days because of this.

In attachment is my.cnf file

cPanel say it is related to MariaDB and that they can not help.

Again this is normal cPanel setup, on CentOS 7.4 and all memory problems started after upgarde to 10.2 and we never have this kind of problems with any older version of Maria

Any help would be great.

Attachments

Issue Links

- duplicates

-

MDEV-33279 Disable transparent huge pages after page buffers has been allocated

-

- Closed

-

- relates to

-

MDEV-18866 Chewing Through Swap

-

- Open

-