Details

-

Bug

-

Status: Closed (View Workflow)

-

Critical

-

Resolution: Fixed

-

5.5.32

-

None

-

None

-

Debian Wheezy x86_64

Linux greeneggs.lentz.com.au 3.9.3-x86_64-linode33 #1 SMP Mon May 20 10:22:57 EDT 2013 x86_64 GNU/Linux

Description

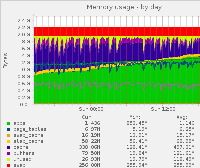

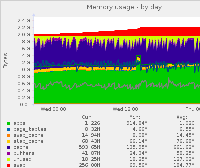

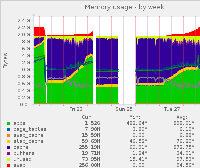

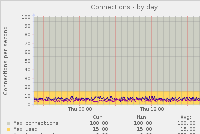

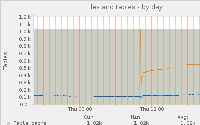

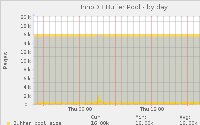

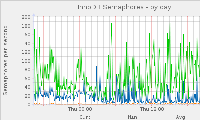

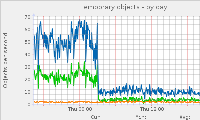

After running mariadb-5.5.32 in a multimaster for a few days its almost out of memory on the active master (the one getting all the reads).

The replication slave (same version) doesn't suffer the memory leak (with or without the replication filters defined).

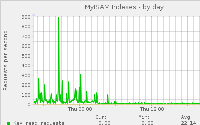

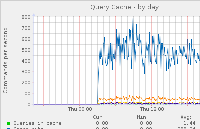

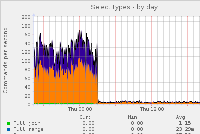

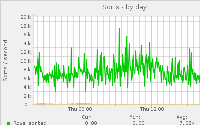

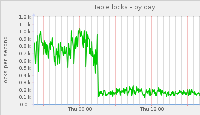

Disabling the query cache on the active master may (was slightly off peak) have slowed the memory leak however it wasn't stopped. In the graph attached the query cache was disabled from Wed 5:30 to Thursday 03:00

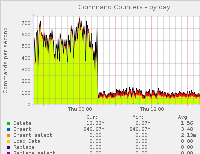

For greeneggs-mysql_commands-day.png the first drop is when query cache was turned back on. At the end I moved the active master to the other server. Other graphs are for this same time interval.

Memory usage calculation:

From http://dev.mysql.com/doc/refman/5.5/en/memory-use.html

per connection:

@read_buffer_size + @read_rnd_buffer_size + @sort_buffer_size + @join_buffer_size + @binlog_cache_size + @thread_stack + @@tmp_table_size = 19070976

Max_used_connections 15

Static component:

@key_buffer_size + @query_cache_size + @innodb_buffer_pool_size + @innodb_additional_mem_pool_size + @@innodb_log_buffer_size

322961408

select 15 * 19070976 + 322961408; = 609026048

609M max

From top:

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

4532 mysql 20 0 1999m 1.4g 6072 S 4.0 71.9 1017:21 mysqld

I've still got the server running if more status is required.

show engine innodb status

=====================================

130830 0:46:23 INNODB MONITOR OUTPUT

=====================================

Per second averages calculated from the last 17 seconds

-----------------

BACKGROUND THREAD

-----------------

srv_master_thread loops: 372124 1_second, 372076 sleeps, 37113 10_second, 1716 background, 1715 flush

srv_master_thread log flush and writes: 350597

----------

SEMAPHORES

----------

OS WAIT ARRAY INFO: reservation count 999301, signal count 1149136

Mutex spin waits 3647275, rounds 12020660, OS waits 198769

RW-shared spins 896419, rounds 19893071, OS waits 574516

RW-excl spins 68006, rounds 6887165, OS waits 204958

Spin rounds per wait: 3.30 mutex, 22.19 RW-shared, 101.27 RW-excl

--------

FILE I/O

--------

I/O thread 0 state: waiting for completed aio requests (insert buffer thread)

I/O thread 1 state: waiting for completed aio requests (log thread)

I/O thread 2 state: waiting for completed aio requests (read thread)

I/O thread 3 state: waiting for completed aio requests (read thread)

I/O thread 4 state: waiting for completed aio requests (write thread)

I/O thread 5 state: waiting for completed aio requests (write thread)

Pending normal aio reads: 0 [0, 0] , aio writes: 0 [0, 0] ,

ibuf aio reads: 0, log i/o's: 0, sync i/o's: 0

Pending flushes (fsync) log: 0; buffer pool: 0

7906118 OS file reads, 39186717 OS file writes, 23493355 OS fsyncs

0.65 reads/s, 16384 avg bytes/read, 66.29 writes/s, 29.06 fsyncs/s

-------------------------------------

INSERT BUFFER AND ADAPTIVE HASH INDEX

-------------------------------------

Ibuf: size 1, free list len 287, seg size 289, 111478 merges

merged operations:

insert 106175, delete mark 100854, delete 13481

discarded operations:

insert 0, delete mark 0, delete 0

Hash table size 553229, node heap has 584 buffer(s)

244.87 hash searches/s, 22.53 non-hash searches/s

—

LOG

—

Log sequence number 1981376581482

Log flushed up to 1981376581482

Last checkpoint at 1981376562791

Max checkpoint age 84223550

Checkpoint age target 81591565

Modified age 18691

Checkpoint age 18691

0 pending log writes, 0 pending chkp writes

22419510 log i/o's done, 26.00 log i/o's/second

----------------------

BUFFER POOL AND MEMORY

----------------------

Total memory allocated 275644416; in additional pool allocated 0

Total memory allocated by read views 136

Internal hash tables (constant factor + variable factor)

Adaptive hash index 13998304 (4425832 + 9572472)

Page hash 277432 (buffer pool 0 only)

Dictionary cache 10287074 (1107952 + 9179122)

File system 648160 (82672 + 565488)

Lock system 665688 (664936 + 752)

Recovery system 0 (0 + 0)

Dictionary memory allocated 9179122

Buffer pool size 16383

Buffer pool size, bytes 268419072

Free buffers 1

Database pages 15798

Old database pages 5811

Modified db pages 97

Pending reads 0

Pending writes: LRU 0, flush list 0, single page 0

Pages made young 10760065, not young 0

0.53 youngs/s, 0.00 non-youngs/s

Pages read 7903370, created 882751, written 16452888

0.65 reads/s, 0.00 creates/s, 39.47 writes/s

Buffer pool hit rate 1000 / 1000, young-making rate 0 / 1000 not 0 / 1000

Pages read ahead 0.00/s, evicted without access 0.00/s, Random read ahead 0.00/s

LRU len: 15798, unzip_LRU len: 0

I/O sum[1550]:cur[322], unzip sum[0]:cur[0]

--------------

ROW OPERATIONS

--------------

0 queries inside InnoDB, 0 queries in queue

1 read views open inside InnoDB

0 transactions active inside InnoDB

0 out of 1000 descriptors used

--OLDEST VIEW--

Normal read view

Read view low limit trx n:o 7D2D2F07

Read view up limit trx id 7D2D2F07

Read view low limit trx id 7D2D2F07

Read view individually stored trx ids:

-----------------

Main thread process no. 4532, id 140201824495360, state: sleeping

Number of rows inserted 1308400, updated 9736429, deleted 1227755, read 34888786828

1.00 inserts/s, 11.71 updates/s, 0.00 deletes/s, 249.22 reads/s

------------------------

LATEST DETECTED DEADLOCK

------------------------

130830 0:27:15

-

-

- (1) TRANSACTION:

TRANSACTION 7D2C63D3, ACTIVE 0 sec starting index read

- (1) TRANSACTION:

-

| Variable_name | Value |

| Aborted_clients | 57 |

| Aborted_connects | 0 |

| Access_denied_errors | 0 |

| Aria_pagecache_blocks_not_flushed | 0 |

| Aria_pagecache_blocks_unused | 15737 |

| Aria_pagecache_blocks_used | 3127 |

| Aria_pagecache_read_requests | 262055553 |

| Aria_pagecache_reads | 107163 |

| Aria_pagecache_write_requests | 69866678 |

| Aria_pagecache_writes | 0 |

| Aria_transaction_log_syncs | 0 |

| Binlog_commits | 12529081 |

| Binlog_group_commits | 12486299 |

| Binlog_snapshot_file | mariadb-bin.001408 |

| Binlog_snapshot_position | 57228334 |

| Binlog_bytes_written | 43273655871 |

| Binlog_cache_disk_use | 768128 |

| Binlog_cache_use | 12510967 |

| Binlog_stmt_cache_disk_use | 24 |

| Binlog_stmt_cache_use | 18077 |

| Busy_time | 0.000000 |

| Bytes_received | 60129853267 |

| Bytes_sent | 691695773018 |

| Com_admin_commands | 3809 |

| Com_assign_to_keycache | 0 |

| Com_alter_db | 0 |

| Com_alter_db_upgrade | 0 |

| Com_alter_event | 0 |

| Com_alter_function | 0 |

| Com_alter_procedure | 0 |

| Com_alter_server | 0 |

| Com_alter_table | 114 |

| Com_alter_tablespace | 0 |

| Com_analyze | 0 |

| Com_begin | 62278 |

| Com_binlog | 0 |

| Com_call_procedure | 0 |

| Com_change_db | 69728 |

| Com_change_master | 0 |

| Com_check | 1426 |

| Com_checksum | 0 |

| Com_commit | 45717 |

| Com_create_db | 1 |

| Com_create_event | 0 |

| Com_create_function | 0 |

| Com_create_index | 0 |

| Com_create_procedure | 1 |

| Com_create_server | 0 |

| Com_create_table | 42 |

| Com_create_trigger | 0 |

| Com_create_udf | 0 |

| Com_create_user | 0 |

| Com_create_view | 0 |

| Com_dealloc_sql | 24 |

| Com_delete | 388136 |

| Com_delete_multi | 5 |

| Com_do | 0 |

| Com_drop_db | 1 |

| Com_drop_event | 0 |

| Com_drop_function | 0 |

| Com_drop_index | 0 |

| Com_drop_procedure | 2 |

| Com_drop_server | 0 |

| Com_drop_table | 1 |

| Com_drop_trigger | 0 |

| Com_drop_user | 0 |

| Com_drop_view | 0 |

| Com_empty_query | 0 |

| Com_execute_sql | 24 |

| Com_flush | 6 |

| Com_grant | 0 |

| Com_ha_close | 0 |

| Com_ha_open | 0 |

| Com_ha_read | 0 |

| Com_help | 2 |

| Com_insert | 847948 |

| Com_insert_select | 551 |

| Com_install_plugin | 0 |

| Com_kill | 50 |

| Com_load | 0 |

| Com_lock_tables | 0 |

| Com_optimize | 0 |

| Com_preload_keys | 0 |

| Com_prepare_sql | 24 |

| Com_purge | 0 |

| Com_purge_before_date | 0 |

| Com_release_savepoint | 0 |

| Com_rename_table | 0 |

| Com_rename_user | 0 |

| Com_repair | 0 |

| Com_replace | 0 |

| Com_replace_select | 0 |

| Com_reset | 0 |

| Com_resignal | 0 |

| Com_revoke | 0 |

| Com_revoke_all | 0 |

| Com_rollback | 3 |

| Com_rollback_to_savepoint | 0 |

| Com_savepoint | 0 |

| Com_select | 47174988 |

| Com_set_option | 1189075 |

| Com_signal | 0 |

| Com_show_authors | 0 |

| Com_show_binlog_events | 3 |

| Com_show_binlogs | 1166 |

| Com_show_charsets | 0 |

| Com_show_client_statistics | 0 |

| Com_show_collations | 0 |

| Com_show_contributors | 0 |

| Com_show_create_db | 115 |

| Com_show_create_event | 0 |

| Com_show_create_func | 0 |

| Com_show_create_proc | 0 |

| Com_show_create_table | 4387 |

| Com_show_create_trigger | 0 |

| Com_show_databases | 7 |

| Com_show_engine_logs | 0 |

| Com_show_engine_mutex | 0 |

| Com_show_engine_status | 1167 |

| Com_show_events | 0 |

| Com_show_errors | 0 |

| Com_show_fields | 5721 |

| Com_show_function_status | 0 |

| Com_show_grants | 0 |

| Com_show_keys | 0 |

| Com_show_index_statistics | 0 |

| Com_show_master_status | 1 |

| Com_show_open_tables | 0 |

| Com_show_plugins | 0 |

| Com_show_privileges | 0 |

| Com_show_procedure_status | 0 |

| Com_show_processlist | 1039 |

| Com_show_profile | 0 |

| Com_show_profiles | 0 |

| Com_show_relaylog_events | 0 |

| Com_show_slave_hosts | 0 |

| Com_show_slave_status | 140780 |

| Com_show_status | 12670 |

| Com_show_storage_engines | 0 |

| Com_show_table_statistics | 0 |

| Com_show_table_status | 4287 |

| Com_show_tables | 6794 |

| Com_show_triggers | 4280 |

| Com_show_user_statistics | 0 |

| Com_show_variables | 2175 |

| Com_show_warnings | 0 |

| Com_slave_start | 10 |

| Com_slave_stop | 4 |

| Com_stmt_close | 24 |

| Com_stmt_execute | 24 |

| Com_stmt_fetch | 0 |

| Com_stmt_prepare | 24 |

| Com_stmt_reprepare | 0 |

| Com_stmt_reset | 0 |

| Com_stmt_send_long_data | 0 |

| Com_truncate | 0 |

| Com_uninstall_plugin | 0 |

| Com_unlock_tables | 2 |

| Com_update | 7082015 |

| Com_update_multi | 1 |

| Com_xa_commit | 0 |

| Com_xa_end | 0 |

| Com_xa_prepare | 0 |

| Com_xa_recover | 0 |

| Com_xa_rollback | 0 |

| Com_xa_start | 0 |

| Compression | OFF |

| Connections | 1164212 |

| Cpu_time | 0.000000 |

| Created_tmp_disk_tables | 2202858 |

| Created_tmp_files | 420678 |

| Created_tmp_tables | 5264753 |

| Delayed_errors | 0 |

| Delayed_insert_threads | 0 |

| Delayed_writes | 0 |

| Empty_queries | 14900340 |

| Executed_events | 0 |

| Executed_triggers | 0 |

| Feature_dynamic_columns | 0 |

| Feature_fulltext | 2 |

| Feature_gis | 28 |

| Feature_locale | 0 |

| Feature_subquery | 135848 |

| Feature_timezone | 6850 |

| Feature_trigger | 586 |

| Feature_xml | 0 |

| Flush_commands | 4 |

| Handler_commit | 63318523 |

| Handler_delete | 748245 |

| Handler_discover | 0 |

| Handler_icp_attempts | 71118314 |

| Handler_icp_match | 71108704 |

| Handler_mrr_init | 0 |

| Handler_mrr_key_refills | 0 |

| Handler_mrr_rowid_refills | 0 |

| Handler_prepare | 14968970 |

| Handler_read_first | 1155850 |

| Handler_read_key | 466199920 |

| Handler_read_last | 55346 |

| Handler_read_next | 27258307498 |

| Handler_read_prev | 8491322 |

| Handler_read_rnd | 66364460 |

| Handler_read_rnd_deleted | 1194 |

| Handler_read_rnd_next | 8174479457 |

| Handler_rollback | 21990 |

| Handler_savepoint | 0 |

| Handler_savepoint_rollback | 0 |

| Handler_tmp_update | 15018397 |

| Handler_tmp_write | 515819305 |

| Handler_update | 6481729 |

| Handler_write | 849123 |

| Innodb_adaptive_hash_cells | 553229 |

| Innodb_adaptive_hash_heap_buffers | 581 |

| Innodb_adaptive_hash_hash_searches | 1107671046 |

| Innodb_adaptive_hash_non_hash_searches | 249488632 |

| Innodb_background_log_sync | 350697 |

| Innodb_buffer_pool_pages_data | 15800 |

| Innodb_buffer_pool_bytes_data | 258867200 |

| Innodb_buffer_pool_pages_dirty | 295 |

| Innodb_buffer_pool_bytes_dirty | 4833280 |

| Innodb_buffer_pool_pages_flushed | 16456234 |

| Innodb_buffer_pool_pages_LRU_flushed | 48422 |

| Innodb_buffer_pool_pages_free | 1 |

| Innodb_buffer_pool_pages_made_not_young | 0 |

| Innodb_buffer_pool_pages_made_young | 10760117 |

| Innodb_buffer_pool_pages_misc | 582 |

| Innodb_buffer_pool_pages_old | 5812 |

| Innodb_buffer_pool_pages_total | 16383 |

| Innodb_buffer_pool_read_ahead_rnd | 0 |

| Innodb_buffer_pool_read_ahead | 2072088 |

| Innodb_buffer_pool_read_ahead_evicted | 91126 |

| Innodb_buffer_pool_read_requests | 10973288017 |

| Innodb_buffer_pool_reads | 5715842 |

| Innodb_buffer_pool_wait_free | 9 |

| Innodb_buffer_pool_write_requests | 132620870 |

| Innodb_checkpoint_age | 88015 |

| Innodb_checkpoint_max_age | 84223550 |

| Innodb_checkpoint_target_age | 81591565 |

| Innodb_data_fsyncs | 23497491 |

| Innodb_data_pending_fsyncs | 0 |

| Innodb_data_pending_reads | 0 |

| Innodb_data_pending_writes | 0 |

| Innodb_data_read | 129493962752 |

| Innodb_data_reads | 7906173 |

| Innodb_data_writes | 39194030 |

| Innodb_data_written | 586483447808 |

| Innodb_dblwr_pages_written | 16456234 |

| Innodb_dblwr_writes | 182242 |

| Innodb_deadlocks | 432 |

| Innodb_dict_tables | 1315 |

| Innodb_have_atomic_builtins | ON |

| Innodb_history_list_length | 3523 |

| Innodb_ibuf_discarded_delete_marks | 0 |

| Innodb_ibuf_discarded_deletes | 0 |

| Innodb_ibuf_discarded_inserts | 0 |

| Innodb_ibuf_free_list | 287 |

| Innodb_ibuf_merged_delete_marks | 100855 |

| Innodb_ibuf_merged_deletes | 13481 |

| Innodb_ibuf_merged_inserts | 106192 |

| Innodb_ibuf_merges | 111495 |

| Innodb_ibuf_segment_size | 289 |

| Innodb_ibuf_size | 1 |

| Innodb_log_waits | 0 |

| Innodb_log_write_requests | 73751900 |

| Innodb_log_writes | 22385577 |

| Innodb_lsn_current | 1981377626218 |

| Innodb_lsn_flushed | 1981377626218 |

| Innodb_lsn_last_checkpoint | 1981377538203 |

| Innodb_master_thread_1_second_loops | 372239 |

| Innodb_master_thread_10_second_loops | 37124 |

| Innodb_master_thread_background_loops | 1716 |

| Innodb_master_thread_main_flush_loops | 1715 |

| Innodb_master_thread_sleeps | 372191 |

| Innodb_max_trx_id | 2100117045 |

| Innodb_mem_adaptive_hash | 13965536 |

| Innodb_mem_dictionary | 10287074 |

| Innodb_mem_total | 275644416 |

| Innodb_mutex_os_waits | 198788 |

| Innodb_mutex_spin_rounds | 12021403 |

| Innodb_mutex_spin_waits | 3647342 |

| Innodb_oldest_view_low_limit_trx_id | 2100116933 |

| Innodb_os_log_fsyncs | 22425791 |

| Innodb_os_log_pending_fsyncs | 0 |

| Innodb_os_log_pending_writes | 0 |

| Innodb_os_log_written | 47227117568 |

| Innodb_page_size | 16384 |

| Innodb_pages_created | 882752 |

| Innodb_pages_read | 7903425 |

| Innodb_pages_written | 16456234 |

| Innodb_purge_trx_id | 2100116933 |

| Innodb_purge_undo_no | 0 |

| Innodb_row_lock_current_waits | 0 |

| Innodb_current_row_locks | 0 |

| Innodb_row_lock_time | 3209772 |

| Innodb_row_lock_time_avg | 74 |

| Innodb_row_lock_time_max | 31935 |

| Innodb_row_lock_waits | 43208 |

| Innodb_rows_deleted | 1227755 |

| Innodb_rows_inserted | 1308502 |

| Innodb_rows_read | 34888819968 |

| Innodb_rows_updated | 9738262 |

| Innodb_read_views_memory | 136 |

| Innodb_descriptors_memory | 8000 |

| Innodb_s_lock_os_waits | 574527 |

| Innodb_s_lock_spin_rounds | 19893402 |

| Innodb_s_lock_spin_waits | 896432 |

| Innodb_truncated_status_writes | 0 |

| Innodb_x_lock_os_waits | 204987 |

| Innodb_x_lock_spin_rounds | 6888035 |

| Innodb_x_lock_spin_waits | 68006 |

| Key_blocks_not_flushed | 0 |

| Key_blocks_unused | 5353 |

| Key_blocks_used | 4170 |

| Key_blocks_warm | 141 |

| Key_read_requests | 6295929 |

| Key_reads | 9626 |

| Key_write_requests | 21159 |

| Key_writes | 10962 |

| Last_query_cost | 0.000000 |

| Max_used_connections | 15 |

| Not_flushed_delayed_rows | 0 |

| Open_files | 142 |

| Open_streams | 0 |

| Open_table_definitions | 397 |

| Open_tables | 595 |

| Opened_files | 9756380 |

| Opened_table_definitions | 6425 |

| Opened_tables | 8666 |

| Opened_views | 0 |

| Performance_schema_cond_classes_lost | 0 |

| Performance_schema_cond_instances_lost | 0 |

| Performance_schema_file_classes_lost | 0 |

| Performance_schema_file_handles_lost | 0 |

| Performance_schema_file_instances_lost | 0 |

| Performance_schema_locker_lost | 0 |

| Performance_schema_mutex_classes_lost | 0 |

| Performance_schema_mutex_instances_lost | 0 |

| Performance_schema_rwlock_classes_lost | 0 |

| Performance_schema_rwlock_instances_lost | 0 |

| Performance_schema_table_handles_lost | 0 |

| Performance_schema_table_instances_lost | 0 |

| Performance_schema_thread_classes_lost | 0 |

| Performance_schema_thread_instances_lost | 0 |

| Prepared_stmt_count | 0 |

| Qcache_free_blocks | 6616 |

| Qcache_free_memory | 18486664 |

| Qcache_hits | 75724207 |

| Qcache_inserts | 8304484 |

| Qcache_lowmem_prunes | 1719071 |

| Qcache_not_cached | 2443705 |

| Qcache_queries_in_cache | 8992 |

| Qcache_total_blocks | 24978 |

| Queries | 142821923 |

| Questions | 133827961 |

| Rows_read | 35275818740 |

| Rows_sent | 1787826753 |

| Rows_tmp_read | 592687087 |

| Rpl_status | AUTH_MASTER |

| Select_full_join | 268533 |

| Select_full_range_join | 6393 |

| Select_range | 6218451 |

| Select_range_check | 0 |

| Select_scan | 3587397 |

| Slave_heartbeat_period | 1800.000 |

| Slave_open_temp_tables | 0 |

| Slave_received_heartbeats | 0 |

| Slave_retried_transactions | 0 |

| Slave_running | ON |

| Slow_launch_threads | 0 |

| Slow_queries | 3796864 |

| Sort_merge_passes | 269513 |

| Sort_range | 170798 |

| Sort_rows | 1870364969 |

| Sort_scan | 4917255 |

| Sphinx_error | |

| Sphinx_time | |

| Sphinx_total | |

| Sphinx_total_found | |

| Sphinx_word_count | |

| Sphinx_words | |

| Ssl_accept_renegotiates | 0 |

| Ssl_accepts | 0 |

| Ssl_callback_cache_hits | 0 |

| Ssl_cipher | |

| Ssl_cipher_list | |

| Ssl_client_connects | 0 |

| Ssl_connect_renegotiates | 0 |

| Ssl_ctx_verify_depth | 0 |

| Ssl_ctx_verify_mode | 0 |

| Ssl_default_timeout | 0 |

| Ssl_finished_accepts | 0 |

| Ssl_finished_connects | 0 |

| Ssl_session_cache_hits | 0 |

| Ssl_session_cache_misses | 0 |

| Ssl_session_cache_mode | NONE |

| Ssl_session_cache_overflows | 0 |

| Ssl_session_cache_size | 0 |

| Ssl_session_cache_timeouts | 0 |

| Ssl_sessions_reused | 0 |

| Ssl_used_session_cache_entries | 0 |

| Ssl_verify_depth | 0 |

| Ssl_verify_mode | 0 |

| Ssl_version | |

| Subquery_cache_hit | 4512 |

| Subquery_cache_miss | 3930 |

| Syncs | 4171361 |

| Table_locks_immediate | 85865730 |

| Table_locks_waited | 15 |

| Tc_log_max_pages_used | 0 |

| Tc_log_page_size | 0 |

| Tc_log_page_waits | 46 |

| Threadpool_idle_threads | 0 |

| Threadpool_threads | 0 |

| Threads_cached | 13 |

| Threads_connected | 2 |

| Threads_created | 15 |

| Threads_running | 2 |

| Uptime | 349494 |

| Uptime_since_flush_status | 349494 |

| Variable_name | Value | |

| aria_block_size | 8192 | |

| aria_checkpoint_interval | 30 | |

| aria_checkpoint_log_activity | 1048576 | |

| aria_force_start_after_recovery_failures | 0 | |

| aria_group_commit | none | |

| aria_group_commit_interval | 0 | |

| aria_log_file_size | 1073741824 | |

| aria_log_purge_type | immediate | |

| aria_max_sort_file_size | 9223372036853727232 | |

| aria_page_checksum | ON | |

| aria_pagecache_age_threshold | 300 | |

| aria_pagecache_buffer_size | 134217728 | |

| aria_pagecache_division_limit | 100 | |

| aria_recover | NORMAL | |

| aria_repair_threads | 1 | |

| aria_sort_buffer_size | 134217728 | |

| aria_stats_method | nulls_unequal | |

| aria_sync_log_dir | NEWFILE | |

| aria_used_for_temp_tables | ON | |

| auto_increment_increment | 2 | |

| auto_increment_offset | 2 | |

| autocommit | ON | |

| automatic_sp_privileges | ON | |

| back_log | 50 | |

| basedir | /usr | |

| big_tables | OFF | |

| binlog_annotate_row_events | OFF | |

| binlog_cache_size | 32768 | |

| binlog_checksum | NONE | |

| binlog_direct_non_transactional_updates | OFF | |

| binlog_format | MIXED | |

| binlog_optimize_thread_scheduling | ON | |

| binlog_stmt_cache_size | 32768 | |

| bulk_insert_buffer_size | 1048576 | |

| character_set_client | latin1 | |

| character_set_connection | latin1 | |

| character_set_database | latin1 | |

| character_set_filesystem | binary | |

| character_set_results | latin1 | |

| character_set_server | latin1 | |

| character_set_system | utf8 | |

| character_sets_dir | /usr/share/mysql/charsets/ | |

| collation_connection | latin1_swedish_ci | |

| collation_database | latin1_swedish_ci | |

| collation_server | latin1_swedish_ci | |

| completion_type | NO_CHAIN | |

| concurrent_insert | ALWAYS | |

| connect_timeout | 5 | |

| datadir | /var/lib/mysql/ | |

| date_format | %Y-%m-%d | |

| datetime_format | %Y-%m-%d %H:%i:%s | |

| deadlock_search_depth_long | 15 | |

| deadlock_search_depth_short | 4 | |

| deadlock_timeout_long | 50000000 | |

| deadlock_timeout_short | 10000 | |

| debug_no_thread_alarm | OFF | |

| default_storage_engine | InnoDB | |

| default_week_format | 0 | |

| delay_key_write | ON | |

| delayed_insert_limit | 100 | |

| delayed_insert_timeout | 300 | |

| delayed_queue_size | 1000 | |

| div_precision_increment | 4 | |

| engine_condition_pushdown | OFF | |

| event_scheduler | OFF | |

| expensive_subquery_limit | 100 | |

| expire_logs_days | 3 | |

| extra_max_connections | 1 | |

| extra_port | 0 | |

| flush | OFF | |

| flush_time | 0 | |

| foreign_key_checks | ON | |

| ft_boolean_syntax | + -><()~*:""& | |

| ft_max_word_len | 84 | |

| ft_min_word_len | 4 | |

| ft_query_expansion_limit | 20 | |

| ft_stopword_file | (built-in) | |

| general_log | OFF | |

| general_log_file | greeneggs.log | |

| group_concat_max_len | 1024 | |

| have_compress | YES | |

| have_crypt | YES | |

| have_csv | YES | |

| have_dynamic_loading | YES | |

| have_geometry | YES | |

| have_innodb | YES | |

| have_ndbcluster | NO | |

| have_openssl | DISABLED | |

| have_partitioning | YES | |

| have_profiling | YES | |

| have_query_cache | YES | |

| have_rtree_keys | YES | |

| have_ssl | DISABLED | |

| have_symlink | YES | |

| hostname | greeneggs.lentz.com.au | |

| ignore_builtin_innodb | OFF | |

| ignore_db_dirs | ||

| init_connect | ||

| init_file | ||

| init_slave | ||

| innodb_adaptive_flushing | ON | |

| innodb_adaptive_flushing_method | estimate | |

| innodb_adaptive_hash_index | ON | |

| innodb_adaptive_hash_index_partitions | 1 | |

| innodb_additional_mem_pool_size | 8388608 | |

| innodb_autoextend_increment | 8 | |

| innodb_autoinc_lock_mode | 1 | |

| innodb_blocking_buffer_pool_restore | OFF | |

| innodb_buffer_pool_instances | 1 | |

| innodb_buffer_pool_populate | OFF | |

| innodb_buffer_pool_restore_at_startup | 600 | |

| innodb_buffer_pool_shm_checksum | ON | |

| innodb_buffer_pool_shm_key | 0 | |

| innodb_buffer_pool_size | 268435456 | |

| innodb_change_buffering | all | |

| innodb_checkpoint_age_target | 0 | |

| innodb_checksums | ON | |

| innodb_commit_concurrency | 0 | |

| innodb_concurrency_tickets | 500 | |

| innodb_corrupt_table_action | assert | |

| innodb_data_file_path | ibdata1:10M:autoextend | |

| innodb_data_home_dir | ||

| innodb_dict_size_limit | 0 | |

| innodb_doublewrite | ON | |

| innodb_doublewrite_file | ||

| innodb_fake_changes | OFF | |

| innodb_fast_checksum | OFF | |

| innodb_fast_shutdown | 1 | |

| innodb_file_format | Antelope | |

| innodb_file_format_check | ON | |

| innodb_file_format_max | Antelope | |

| innodb_file_per_table | ON | |

| innodb_flush_log_at_trx_commit | 1 | |

| innodb_flush_method | O_DIRECT | |

| innodb_flush_neighbor_pages | area | |

| innodb_force_load_corrupted | OFF | |

| innodb_force_recovery | 0 | |

| innodb_ibuf_accel_rate | 100 | |

| innodb_ibuf_active_contract | 1 | |

| innodb_ibuf_max_size | 134201344 | |

| innodb_import_table_from_xtrabackup | 0 | |

| innodb_io_capacity | 1000 | |

| innodb_kill_idle_transaction | 0 | |

| innodb_large_prefix | OFF | |

| innodb_lazy_drop_table | 0 | |

| innodb_lock_wait_timeout | 50 | |

| innodb_locking_fake_changes | ON | |

| innodb_locks_unsafe_for_binlog | OFF | |

| innodb_log_block_size | 512 | |

| innodb_log_buffer_size | 4194304 | |

| innodb_log_file_size | 52428800 | |

| innodb_log_files_in_group | 2 | |

| innodb_log_group_home_dir | ./ | |

| innodb_max_bitmap_file_size | 104857600 | |

| innodb_max_changed_pages | 1000000 | |

| innodb_max_dirty_pages_pct | 75 | |

| innodb_max_purge_lag | 0 | |

| innodb_merge_sort_block_size | 1048576 | |

| innodb_mirrored_log_groups | 1 | |

| innodb_old_blocks_pct | 37 | |

| innodb_old_blocks_time | 0 | |

| innodb_open_files | 400 | |

| innodb_page_size | 16384 | |

| innodb_print_all_deadlocks | OFF | |

| innodb_purge_batch_size | 20 | |

| innodb_purge_threads | 1 | |

| innodb_random_read_ahead | OFF | |

| innodb_read_ahead | linear | |

| innodb_read_ahead_threshold | 56 | |

| innodb_read_io_threads | 2 | |

| innodb_recovery_stats | OFF | |

| innodb_recovery_update_relay_log | OFF | |

| innodb_replication_delay | 0 | |

| innodb_rollback_on_timeout | OFF | |

| innodb_rollback_segments | 128 | |

| innodb_show_locks_held | 10 | |

| innodb_show_verbose_locks | 0 | |

| innodb_spin_wait_delay | 6 | |

| innodb_stats_auto_update | 1 | |

| innodb_stats_method | nulls_equal | |

| innodb_stats_on_metadata | ON | |

| innodb_stats_sample_pages | 8 | |

| innodb_stats_update_need_lock | 1 | |

| innodb_strict_mode | OFF | |

| innodb_support_xa | ON | |

| innodb_sync_spin_loops | 30 | |

| innodb_table_locks | ON | |

| innodb_thread_concurrency | 0 | |

| innodb_thread_concurrency_timer_based | OFF | |

| innodb_thread_sleep_delay | 10000 | |

| innodb_track_changed_pages | OFF | |

| innodb_use_atomic_writes | OFF | |

| innodb_use_fallocate | OFF | |

| innodb_use_global_flush_log_at_trx_commit | ON | |

| innodb_use_native_aio | ON | |

| innodb_use_sys_malloc | ON | |

| innodb_use_sys_stats_table | OFF | |

| innodb_version | 5.5.32-MariaDB-30.2 | |

| innodb_write_io_threads | 2 | |

| interactive_timeout | 28800 | |

| join_buffer_size | 131072 | |

| join_buffer_space_limit | 2097152 | |

| join_cache_level | 2 | |

| keep_files_on_create | OFF | |

| key_buffer_size | 8388608 | |

| key_cache_age_threshold | 300 | |

| key_cache_block_size | 1024 | |

| key_cache_division_limit | 100 | |

| key_cache_segments | 0 | |

| large_files_support | ON | |

| large_page_size | 0 | |

| large_pages | OFF | |

| lc_messages | en_US | |

| lc_messages_dir | /usr/share/mysql | |

| lc_time_names | en_US | |

| license | GPL | |

| local_infile | ON | |

| lock_wait_timeout | 31536000 | |

| locked_in_memory | OFF | |

| log | OFF | |

| log_bin | ON | |

| log_bin_trust_function_creators | OFF | |

| log_error | ||

| log_output | FILE | |

| log_queries_not_using_indexes | ON | |

| log_slave_updates | ON | |

| log_slow_filter | admin,filesort,filesort_on_disk,full_join,full_scan,query_cache,query_cache_miss,tmp_table,tmp_table_on_disk | |

| log_slow_queries | ON | |

| log_slow_rate_limit | 1 | |

| log_slow_verbosity | query_plan | |

| log_warnings | 2 | |

| long_query_time | 3.000000 | |

| low_priority_updates | OFF | |

| lower_case_file_system | OFF | |

| lower_case_table_names | 0 | |

| master_verify_checksum | OFF | |

| max_allowed_packet | 16777216 | |

| max_binlog_cache_size | 18446744073709547520 | |

| max_binlog_size | 104857600 | |

| max_binlog_stmt_cache_size | 18446744073709547520 | |

| max_connect_errors | 10 | |

| max_connections | 100 | |

| max_delayed_threads | 20 | |

| max_error_count | 64 | |

| max_heap_table_size | 16777216 | |

| max_insert_delayed_threads | 20 | |

| max_join_size | 18446744073709551615 | |

| max_length_for_sort_data | 1024 | |

| max_long_data_size | 16777216 | |

| max_prepared_stmt_count | 16382 | |

| max_relay_log_size | 0 | |

| max_seeks_for_key | 4294967295 | |

| max_sort_length | 1024 | |

| max_sp_recursion_depth | 0 | |

| max_tmp_tables | 32 | |

| max_user_connections | 0 | |

| max_write_lock_count | 4294967295 | |

| metadata_locks_cache_size | 1024 | |

| min_examined_row_limit | 0 | |

| mrr_buffer_size | 262144 | |

| multi_range_count | 256 | |

| myisam_block_size | 1024 | |

| myisam_data_pointer_size | 6 | |

| myisam_max_sort_file_size | 9223372036853727232 | |

| myisam_mmap_size | 18446744073709551615 | |

| myisam_recover_options | BACKUP,QUICK | |

| myisam_repair_threads | 1 | |

| myisam_sort_buffer_size | 536870912 | |

| myisam_stats_method | nulls_unequal | |

| myisam_use_mmap | OFF | |

| net_buffer_length | 16384 | |

| net_read_timeout | 30 | |

| net_retry_count | 10 | |

| net_write_timeout | 60 | |

| old | OFF | |

| old_alter_table | OFF | |

| old_passwords | OFF | |

| open_files_limit | 2159 | |

| optimizer_prune_level | 1 | |

| optimizer_search_depth | 62 | |

| optimizer_switch | index_merge=on,index_merge_union=on,index_merge_sort_union=on,index_merge_intersection=on,index_merge_sort_intersection=off,engine_condition_pushdown=off,index_condition_pushdown=on,derived_merge=on,derived_with_keys=on,firstmatch=on,loosescan=on,materialization=on,in_to_exists=on,semijoin=on,partial_match_rowid_merge=on,partial_match_table_scan=on,subquery_cache=on,mrr=off,mrr_cost_based=off,mrr_sort_keys=off,outer_join_with_cache=on,semijoin_with_cache=on,join_cache_incremental=on,join_cache_hashed=on,join_cache_bka=on,optimize_join_buffer_size=off,table_elimination=on,extended_keys=off | |

| performance_schema | OFF | |

| performance_schema_events_waits_history_long_size | 10000 | |

| performance_schema_events_waits_history_size | 10 | |

| performance_schema_max_cond_classes | 80 | |

| performance_schema_max_cond_instances | 1000 | |

| performance_schema_max_file_classes | 50 | |

| performance_schema_max_file_handles | 32768 | |

| performance_schema_max_file_instances | 10000 | |

| performance_schema_max_mutex_classes | 200 | |

| performance_schema_max_mutex_instances | 1000000 | |

| performance_schema_max_rwlock_classes | 30 | |

| performance_schema_max_rwlock_instances | 1000000 | |

| performance_schema_max_table_handles | 100000 | |

| performance_schema_max_table_instances | 50000 | |

| performance_schema_max_thread_classes | 50 | |

| performance_schema_max_thread_instances | 1000 | |

| pid_file | /var/run/mysqld/mysqld.pid | |

| plugin_dir | /usr/lib/mysql/plugin/ | |

| plugin_maturity | unknown | |

| port | 3306 | |

| preload_buffer_size | 32768 | |

| profiling | OFF | |

| profiling_history_size | 15 | |

| progress_report_time | 56 | |

| protocol_version | 10 | |

| query_alloc_block_size | 8192 | |

| query_cache_limit | 131072 | |

| query_cache_min_res_unit | 4096 | |

| query_cache_size | 33554432 | |

| query_cache_strip_comments | OFF | |

| query_cache_type | ON | |

| query_cache_wlock_invalidate | OFF | |

| query_prealloc_size | 8192 | |

| range_alloc_block_size | 4096 | |

| read_buffer_size | 1048576 | |

| read_only | ON | |

| read_rnd_buffer_size | 524288 | |

| relay_log | ||

| relay_log_index | ||

| relay_log_info_file | relay-log.info | |

| relay_log_purge | ON | |

| relay_log_recovery | OFF | |

| relay_log_space_limit | 0 | |

| replicate_annotate_row_events | OFF | |

| replicate_do_db | ||

| replicate_do_table | ||

| replicate_events_marked_for_skip | replicate | |

| replicate_ignore_db | ||

| replicate_ignore_table | peoplesforum.cache | |

| replicate_wild_do_table | ||

| replicate_wild_ignore_table | peoplesforum.cache% | |

| report_host | greeneggs | |

| report_password | ||

| report_port | 3306 | |

| report_user | ||

| rowid_merge_buff_size | 8388608 | |

| rpl_recovery_rank | 0 | |

| secure_auth | OFF | |

| secure_file_priv | ||

| server_id | 12302 | |

| skip_external_locking | ON | |

| skip_name_resolve | ON | |

| skip_networking | OFF | |

| skip_show_database | OFF | |

| slave_compressed_protocol | OFF | |

| slave_exec_mode | STRICT | |

| slave_load_tmpdir | /tmp | |

| slave_max_allowed_packet | 1073741824 | |

| slave_net_timeout | 3600 | |

| slave_skip_errors | 1062 | |

| slave_sql_verify_checksum | ON | |

| slave_transaction_retries | 10 | |

| slave_type_conversions | ||

| slow_launch_time | 2 | |

| slow_query_log | ON | |

| slow_query_log_file | /var/log/mysql/mariadb-slow.log | |

| socket | /var/run/mysqld/mysqld.sock | |

| sort_buffer_size | 262144 | |

| sql_auto_is_null | OFF | |

| sql_big_selects | ON | |

| sql_big_tables | OFF | |

| sql_buffer_result | OFF | |

| sql_log_bin | ON | |

| sql_log_off | OFF | |

| sql_low_priority_updates | OFF | |

| sql_max_join_size | 18446744073709551615 | |

| sql_mode | NO_ENGINE_SUBSTITUTION | |

| sql_notes | ON | |

| sql_quote_show_create | ON | |

| sql_safe_updates | OFF | |

| sql_select_limit | 18446744073709551615 | |

| sql_slave_skip_counter | 0 | |

| sql_warnings | OFF | |

| ssl_ca | ||

| ssl_capath | ||

| ssl_cert | ||

| ssl_cipher | ||

| ssl_key | ||

| storage_engine | InnoDB | |

| stored_program_cache | 256 | |

| sync_binlog | 3 | |

| sync_frm | ON | |

| sync_master_info | 0 | |

| sync_relay_log | 0 | |

| sync_relay_log_info | 0 | |

| system_time_zone | UTC | |

| table_definition_cache | 400 | |

| table_open_cache | 1024 | |

| thread_cache_size | 128 | |

| thread_concurrency | 10 | |

| thread_handling | one-thread-per-connection | |

| thread_pool_idle_timeout | 60 | |

| thread_pool_max_threads | 500 | |

| thread_pool_oversubscribe | 3 | |

| thread_pool_size | 8 | |

| thread_pool_stall_limit | 500 | |

| thread_stack | 294912 | |

| time_format | %H:%i:%s | |

| time_zone | SYSTEM | |

| timed_mutexes | OFF | |

| tmp_table_size | 16777216 | |

| tmpdir | /tmp | |

| transaction_alloc_block_size | 8192 | |

| transaction_prealloc_size | 4096 | |

| tx_isolation | REPEATABLE-READ | |

| unique_checks | ON | |

| updatable_views_with_limit | YES | |

| userstat | OFF | |

| version | 5.5.32-MariaDB-1~wheezy-log | |

| version_comment | mariadb.org binary distribution | |

| version_compile_machine | x86_64 | |

| version_compile_os | debian-linux-gnu | |

| wait_timeout | 600 |

I'm planning on doing a debug build from MDEV-572 and maybe try to get valgrind to narrow it down (if that doesn't bring the server to a total halt). Better suggestions welcome.

Attachments

Activity

| Field | Original Value | New Value |

|---|---|---|

| Assignee | Elena Stepanova [ elenst ] |

| Environment |

Debian Squeeze x86_64 Linux greeneggs.lentz.com.au 3.9.3-x86_64-linode33 #1 SMP Mon May 20 10:22:57 EDT 2013 x86_64 GNU/Linux |

Debian Wheezy x86_64 Linux greeneggs.lentz.com.au 3.9.3-x86_64-linode33 #1 SMP Mon May 20 10:22:57 EDT 2013 x86_64 GNU/Linux |

| Attachment | valgrind.mysqld.27336 [ 23400 ] |

| Attachment | catinthehat_memory-day_no_indexmerge.png [ 23402 ] |

| Attachment | psergey-mdev4974-xpl1.diff [ 23701 ] |

| Assignee | Elena Stepanova [ elenst ] | Sergei Petrunia [ psergey ] |

| Fix Version/s | 5.5.35 [ 14000 ] |

| Priority | Major [ 3 ] | Critical [ 2 ] |

| Attachment | drupal.sql [ 24500 ] |

| Attachment | leaks-track.sql [ 24501 ] |

| Attachment | leaks-track-allqueries.sql [ 24502 ] |

| Attachment | allqueries.sql [ 24503 ] |

| Status | Open [ 1 ] | In Progress [ 3 ] |

| Attachment | psergey-fix-mdev4954.diff [ 25600 ] |

| Assignee | Sergei Petrunia [ psergey ] | Oleksandr Byelkin [ sanja ] |

| Assignee | Oleksandr Byelkin [ sanja ] | Sergei Petrunia [ psergey ] |

| Resolution | Fixed [ 1 ] | |

| Status | In Progress [ 3 ] | Closed [ 6 ] |

| Workflow | defaullt [ 28729 ] | MariaDB v2 [ 43957 ] |

| Workflow | MariaDB v2 [ 43957 ] | MariaDB v3 [ 63200 ] |

| Workflow | MariaDB v3 [ 63200 ] | MariaDB v4 [ 147003 ] |

Hi Daniel,

Given that it's 5.5 (hence no multi-source replication there), what exactly do you mean by multi-master? Could you please specify the replication topology you are using?

And another question, for better understanding – how come are you using a Wheezy package on Squeeze?

Thanks.