Details

-

New Feature

-

Status: Stalled (View Workflow)

-

Major

-

Resolution: Unresolved

Description

to simplify the vector-based pipeline, we could remove the need for a user to generate vector embeddings for storing in the database.

instead a database server should be able to do it automatically and transparently behind the scenes

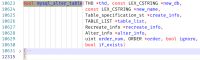

this task is about implementing a hook and the API that allows to add custom code that is invoked on INSERT (or UPDATE) and converts the data into an embedding. This implies that the original data is stored in the database too.

A possible SQL syntax could be based on the existing WITH PARSER clause.

One limitation of this approach — it leaves no place for chunking, as it strictly assumes one document = one embedding. Chunking can be done outside of the INSERT. With a stored procedure or a special LOAD DATA plugin (MDEV-28395)

A limitation of specifically WITH PARSER syntax — it doesn't cache generated embeddings. To actually save embeddings in the database we could go with a simple UDF function plugin. Another limitation — it doesn't allow combining steps into a single data-processing pipeline, that can be easily done with functions, like

INSERT INTO t1 (doc) VALUES (generate_embedding(pdf2text(wget('https://.....pdf')))); |

Functions seem to be more versatile, they can even do chunking (returning a new chunk on every call — which can be wrapped into SQL WHILE loop or a table function, when we'll have them). Function based approach can be implemented in steps:

- add a function plugin to generate embeddings, may be, few more for various LLMs and for helper transformations (like pdf2text, OCR, or chunking)

- how to pass secret parameters, like OpenAI key into such a function?

- introduce a concept of "expensive" function, if a stored generated column uses an "expensive" function, the server should avoid re-generating it whenever possible. ALTER/OPTIMIZE/etc should not regenerate it.

- extend this to virtual indexed columns. If an index can find a value by row id (normally indexes cannot do it, but mhnsw index, for example, can), then virtual indexed columns can reuse value and avoid regenerating them just as if they were stored — this would allow to reduce storage requirements by 66%

Attachments

Issue Links

- relates to

-

MDEV-32887 Vector Search

-

- Closed

-