Details

-

Bug

-

Status: Open (View Workflow)

-

Critical

-

Resolution: Unresolved

-

10.11.10

-

None

-

Ubuntu 22.04

Description

We have a cluster of 3 nodes, one of which gets the lions share of requests and runs some aggregation and reporting queries every morning. We have noticed that recent version (not sure when it really started to be an issue) seem to leak memory which is then terminated by OOM killer. We did tame OOM a bit but it genuinely runs out of memory.

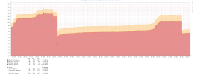

Looking at the graph below, we can see that the memory consumed each day is not given back and accumulates until it stops.

[server]

|

|

|

[mysqld]

|

|

|

basedir = /usr

|

bind-address = 0.0.0.0 |

bulk_insert_buffer_size = 16M

|

character-set-server = utf8mb4

|

collation-server = utf8mb4_general_ci

|

connect_timeout = 5 |

datadir = /var/lib/mysql

|

default_storage_engine = InnoDB

|

expire_logs_days = 3 |

group_concat_max_len = 1000000 |

|

|

innodb_buffer_pool_instances = 5 |

innodb_buffer_pool_size = 5G

|

innodb_change_buffer_max_size = 50 |

innodb_file_format = Barracuda

|

innodb_file_per_table = 1 |

innodb_flush_method = O_DIRECT

|

innodb_io_capacity = 400 |

innodb_large_prefix = on

|

innodb_log_buffer_size = 1G

|

innodb_log_file_size = 640M

|

innodb_log_files_in_group = 2 |

innodb_open_files = 400 |

innodb_read_io_threads = 16 |

innodb_thread_concurrency = 8 |

innodb_write_io_threads = 16 |

|

|

join_buffer_size = 2M

|

#key_buffer_size = 16M

|

lc-messages-dir = /usr/share/mysql

|

log_bin = /var/log/mysql/mariadb-bin

|

log_bin_index = /var/log/mysql/mariadb-bin.index

|

log_error = /var/log/mysql/error.log

|

# https://docs.rackspace.com/support/how-to/mariadb-error-log-configuration/ |

log_warnings = 1 |

|

|

max_allowed_packet = 256M

|

max_binlog_size = 512M

|

max_connections = 400 |

max_heap_table_size = 64M

|

myisam_recover_options = BACKUP

|

performance_schema = on

|

pid-file = /var/run/mysqld/mysqld.pid

|

port = 3306 |

query_cache_limit = 128K

|

query_cache_size = 64M

|

skip-external-locking

|

skip-name-resolve = 1 |

socket = /var/run/mysqld/mysqld.sock

|

sort_buffer_size = 4M

|

table_definition_cache = 16384 |

table_open_cache = 16384 |

thread_cache_size = 128 |

thread_cache_size = 8 |

thread_stack = 192K

|

tmp_table_size = 256M

|

tmpdir = /tmp

|

user = mysql

|

userstat = 1 |

wait_timeout = 600 |

|

|

[embedded]

|

|

|

[mariadb]

|

plugin_load_add = ha_federatedx

|

[mariadb-10.1] |

|

You can see that at 6am every day it takes a bite of memory and never releases it.

During that time, there are many rows updated. We use it for an inventory system where it crunches the previous days opening stock, then all the relevant transactions to produce a closing stock - ultimately though this is just a bunch of basic joins that inserts into a table. 1.9M rows per day and no fancy statements all just select / inserts. I would have thought that at no point should selects and inserts no matter how they were written would cause a memory leak over time.

Attachments

Issue Links

- relates to

-

MDEV-35469 Heap tables are calling mallocs to often

-

- Closed

-

-

MDEV-35750 Change MEM_ROOT allocation sizes to reduse calls to malloc() and avoid memory fragmentation

-

- Open

-