Details

-

Bug

-

Status: Closed (View Workflow)

-

Critical

-

Resolution: Not a Bug

-

10.3.22

Description

For query like this:

select * from t where (PK1, PK2, PK3) in ((v1,v2,v3), (v4,v5,v6), ...)

|

with long enough list of tuples for multi-column PK values there are 3 possible plans presented by quotes from ANALYZE FORMAT=JSON outputs):

1. Range scan, can be forced in some cases with FORCE INDEX(PRIMARY):

{

|

"query_block": {

|

"select_id": 1,

|

"r_loops": 1,

|

"r_total_time_ms": 19.184,

|

"table": {

|

"table_name": "t",

|

"access_type": "range",

|

"possible_keys": ["PRIMARY"],

|

"key": "PRIMARY",

|

"key_length": "74",

|

"used_key_parts": [

|

"PK1",

|

"PK2",

|

"PK3"

|

],

|

"r_loops": 1,

|

"rows": 1000,

|

"r_rows": 1000,

|

"r_total_time_ms": 15.165,

|

"filtered": 100,

|

"r_filtered": 100,

|

"attached_condition": "(t.PK1,t.PK2,t.PK3) in (<cache>((349,'*********','01')),<cache>((349,'*********','01')),)"

|

}

|

}

|

}

|

2. For the same IN list in other environment we end up with full table scan that is very slow:

{

|

"query_block": {

|

"select_id": 1,

|

"r_loops": 1,

|

"r_total_time_ms": 51060,

|

"table": {

|

"table_name": "t",

|

"access_type": "ALL",

|

"possible_keys": [

|

"PRIMARY",

|

...

|

],

|

"r_loops": 1,

|

"rows": 7957643,

|

"r_rows": 7.96e6,

|

"r_total_time_ms": 48324,

|

"filtered": 100,

|

"r_filtered": 0.0126,

|

"attached_condition": "(t.PK1,t.PK2,t.PK3) in (<cache>((***,'*******','01')),<cache>((***,'*******','01')),.......)"

|

}

|

}

|

}

|

3. Finally in other environment with very similar data for the same IN list we end up with semijoin optimization applied:

{

|

"query_block": {

|

"select_id": 1,

|

"r_loops": 1,

|

"r_total_time_ms": 11.565,

|

"duplicates_removal": {

|

"table": {

|

"table_name": "<derived3>",

|

"access_type": "ALL",

|

"r_loops": 1,

|

"rows": 1000,

|

"r_rows": 1000,

|

"r_total_time_ms": 0.1065,

|

"filtered": 100,

|

"r_filtered": 100,

|

"materialized": {

|

"query_block": {

|

"union_result": {

|

"table_name": "<unit3>",

|

"access_type": "ALL",

|

"r_loops": 0,

|

"r_rows": null,

|

"query_specifications": [

|

{

|

"query_block": {

|

"select_id": 3,

|

"table": {

|

"message": "No tables used"

|

}

|

}

|

}

|

]

|

}

|

}

|

}

|

},

|

"table": {

|

"table_name": "t",

|

"access_type": "eq_ref",

|

"possible_keys": [

|

"PRIMARY",

|

...

|

],

|

"key": "PRIMARY",

|

"key_length": "74",

|

"used_key_parts": [

|

"PK1",

|

"PK2",

|

"PK3"

|

],

|

"ref": ["tvc_0._col_1", "func", "func"],

|

"r_loops": 1000,

|

"rows": 1,

|

"r_rows": 0.998,

|

"r_total_time_ms": 7.7333,

|

"filtered": 100,

|

"r_filtered": 100,

|

"attached_condition": "t.PK2 = convert(tvc_0._col_2 using utf8) and t.PK3 = convert(tvc_0._col_3 using utf8)"

|

}

|

}

|

}

|

}

|

This plan is the fastest even comparing to range join, but there is no way to force it.

So, I think there is a bug when semijoin optimization is not used even when "range" is extended to full table scan as an alternative. I'd also like to have a way to force the best plan.

Attachments

Issue Links

- relates to

-

MDEV-20900 IN predicate to IN subquery conversion causes performance regression

-

- Closed

-

-

MDEV-21265 IN predicate conversion to IN subquery should be allowed for a broader set of datatype comparison

-

- Closed

-

Activity

| Field | Original Value | New Value |

|---|---|---|

| Priority | Major [ 3 ] | Critical [ 2 ] |

| Fix Version/s | 10.3 [ 22126 ] | |

| Assignee | Sergei Petrunia [ psergey ] |

| Labels | in-to-subquery |

| Link |

This issue relates to |

| Link |

This issue relates to |

| Attachment | a2.test [ 54105 ] |

| Status | Open [ 1 ] | In Progress [ 3 ] |

| Attachment | screenshot-1.png [ 54223 ] |

| Fix Version/s | N/A [ 14700 ] | |

| Fix Version/s | 10.3 [ 22126 ] | |

| Resolution | Not a Bug [ 6 ] | |

| Status | In Progress [ 3 ] | Closed [ 6 ] |

| Workflow | MariaDB v3 [ 113343 ] | MariaDB v4 [ 158356 ] |

| Zendesk Related Tickets | 184082 |

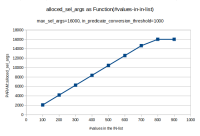

As far as I understand, the rewrite to semi-join depends purely on # of elements in the IN-list?