Details

-

Bug

-

Status: Closed (View Workflow)

-

Critical

-

Resolution: Fixed

-

10.4.18

-

None

-

CentOS 7.8

Description

Hi,

I have a setup with 3 Galera nodes on MariaDB 10.4.18.

I have blocking issue with MariaDB stability during network fluctuations (this usually happens for me when my client performs network interventions of it has hardware issues on the network).

The issues is the following:

1. After few ups and downs of the network, on single node, Galera cluster goes into a complete freeze.

2. The node that lost connection is OK, as it doe not receive traffic anymore

3 The other 2 nodes are accessible via mysql connection with root account but when I check the process list in MariaDB I have always the following query hanged updating: DELETE FROM mysql.wsrep_cluster.

- I see other queries after this, but they all have the time lower than "DELETE FROM mysql.wsrep_cluster", which makes me thing that this query somehow locks the whole database and the other queries are waiting for it. Unfortunately it never ends.

Logs analysis:

1. We do not see any slow log for the query that hangs

2. We do not see any error in MariaDB / Galera error log

In order to replicate this we perform the following test:

1. Start Galera on 3 nodes

2. We have a webservice that connects to Galera start a tool that sends queries continuously to the database

3. We perform successive ifup / ifdown at about 60 seconds interval on single node

4. After 10 -15 tries, the cluster hangs in the situation above

I'm attaching the script that we use for ifup / ifdown testing and also I'm putting here he Galera configuration:

#Galera Provider Configuration

|

wsrep_on=ON

|

wsrep_provider=/usr/lib64/galera-4/libgalera_smm.so |

|

|

#Galera Cluster Configuration

|

wsrep_cluster_name="<EDITED>" |

wsrep_cluster_address="gcomm://<EDITED>" |

|

|

#Galera Synchronization Configuration

|

wsrep_sst_method=rsync

|

|

|

#Galera Node Configuration

|

wsrep_node_address="<EDITED>" |

wsrep_node_name="<EDITED>" |

wsrep_slave_threads = 8 |

|

|

wsrep_provider_options="gcache.size = 2G; gcache.page_size = 1G; gcs.fc_limit = 256; gcs.fc_factor = 0.99; cert.log_conflicts = ON;" |

|

|

#read consistency

|

wsrep_sync_wait=3 |

wsrep_retry_autocommit = 1 |

|

|

wsrep_debug = 1 |

wsrep_log_conflicts = 1 |

Attachments

Issue Links

- duplicates

-

MDEV-26760 Galera hangs during concurrent updates

-

- Closed

-

- relates to

-

MDEV-23379 Deprecate and ignore options for InnoDB concurrency throttling

-

- Closed

-

Activity

| Field | Original Value | New Value |

|---|---|---|

| Description |

Hi,

I have a setup with 3 Galera nodes on MariaDB 10.4.18. I have blocking issue with MariaDB stability during network fluctuations (this usually happens for me when my client performs network interventions of it has hardware issues on the network). The issues is the following: 1. After few ups and downs of the network, on single node, Galera cluster goes into a complete freeze. 2. The node that lost connection is OK, as it doe not receive traffic anymore 3 The other 2 nodes are accessible via mysql connection with root account but when I check the process list in MariaDB I have always the following query hanged updating: *DELETE FROM mysql.wsrep_cluster*. - I see other queries after this, but they all have the time lower than "DELETE FROM mysql.wsrep_cluster", which makes me thing that this query somehow locks the whole database and the other queries are waiting for it. Unfortunately it never ends. In order to replicate this we perform the following test: 1. Start Galera on 3 nodes 2. We have a webservice that connects to Galera start a tool that sends queries continuously to the database 3. We perform successive ifup / ifdown at about 60 seconds interval on single node 4. After 10 -15 tries, the cluster hangs in the situation above I'm attaching the script that we use for ifup / ifdown testing and also I'm putting here he Galera configuration: # Galera Provider Configuration wsrep_on=ON wsrep_provider=/usr/lib64/galera-4/libgalera_smm.so # Galera Cluster Configuration wsrep_cluster_name="<EDITED>" wsrep_cluster_address="gcomm://<EDITED>" # Galera Synchronization Configuration wsrep_sst_method=rsync # Galera Node Configuration wsrep_node_address="<EDITED>" wsrep_node_name="<EDITED>" wsrep_slave_threads = 8 wsrep_provider_options="gcache.size = 2G; gcache.page_size = 1G; gcs.fc_limit = 256; gcs.fc_factor = 0.99; cert.log_conflicts = ON;" #read consistency wsrep_sync_wait=3 wsrep_retry_autocommit = 1 wsrep_debug = 1 wsrep_log_conflicts = 1 |

Hi,

I have a setup with 3 Galera nodes on MariaDB 10.4.18. I have blocking issue with MariaDB stability during network fluctuations (this usually happens for me when my client performs network interventions of it has hardware issues on the network). The issues is the following: 1. After few ups and downs of the network, on single node, Galera cluster goes into a complete freeze. 2. The node that lost connection is OK, as it doe not receive traffic anymore 3 The other 2 nodes are accessible via mysql connection with root account but when I check the process list in MariaDB I have always the following query hanged updating: *DELETE FROM mysql.wsrep_cluster*. - I see other queries after this, but they all have the time lower than "DELETE FROM mysql.wsrep_cluster", which makes me thing that this query somehow locks the whole database and the other queries are waiting for it. Unfortunately it never ends. In order to replicate this we perform the following test: 1. Start Galera on 3 nodes 2. We have a webservice that connects to Galera start a tool that sends queries continuously to the database 3. We perform successive ifup / ifdown at about 60 seconds interval on single node 4. After 10 -15 tries, the cluster hangs in the situation above I'm attaching the script that we use for ifup / ifdown testing and also I'm putting here he Galera configuration: #Galera Provider Configuration wsrep_on=ON wsrep_provider=/usr/lib64/galera-4/libgalera_smm.so #Galera Cluster Configuration wsrep_cluster_name="<EDITED>" wsrep_cluster_address="gcomm://<EDITED>" #Galera Synchronization Configuration wsrep_sst_method=rsync #Galera Node Configuration wsrep_node_address="<EDITED>" wsrep_node_name="<EDITED>" wsrep_slave_threads = 8 wsrep_provider_options="gcache.size = 2G; gcache.page_size = 1G; gcs.fc_limit = 256; gcs.fc_factor = 0.99; cert.log_conflicts = ON;" #read consistency wsrep_sync_wait=3 wsrep_retry_autocommit = 1 wsrep_debug = 1 wsrep_log_conflicts = 1 |

| Description |

Hi,

I have a setup with 3 Galera nodes on MariaDB 10.4.18. I have blocking issue with MariaDB stability during network fluctuations (this usually happens for me when my client performs network interventions of it has hardware issues on the network). The issues is the following: 1. After few ups and downs of the network, on single node, Galera cluster goes into a complete freeze. 2. The node that lost connection is OK, as it doe not receive traffic anymore 3 The other 2 nodes are accessible via mysql connection with root account but when I check the process list in MariaDB I have always the following query hanged updating: *DELETE FROM mysql.wsrep_cluster*. - I see other queries after this, but they all have the time lower than "DELETE FROM mysql.wsrep_cluster", which makes me thing that this query somehow locks the whole database and the other queries are waiting for it. Unfortunately it never ends. In order to replicate this we perform the following test: 1. Start Galera on 3 nodes 2. We have a webservice that connects to Galera start a tool that sends queries continuously to the database 3. We perform successive ifup / ifdown at about 60 seconds interval on single node 4. After 10 -15 tries, the cluster hangs in the situation above I'm attaching the script that we use for ifup / ifdown testing and also I'm putting here he Galera configuration: #Galera Provider Configuration wsrep_on=ON wsrep_provider=/usr/lib64/galera-4/libgalera_smm.so #Galera Cluster Configuration wsrep_cluster_name="<EDITED>" wsrep_cluster_address="gcomm://<EDITED>" #Galera Synchronization Configuration wsrep_sst_method=rsync #Galera Node Configuration wsrep_node_address="<EDITED>" wsrep_node_name="<EDITED>" wsrep_slave_threads = 8 wsrep_provider_options="gcache.size = 2G; gcache.page_size = 1G; gcs.fc_limit = 256; gcs.fc_factor = 0.99; cert.log_conflicts = ON;" #read consistency wsrep_sync_wait=3 wsrep_retry_autocommit = 1 wsrep_debug = 1 wsrep_log_conflicts = 1 |

Hi,

I have a setup with 3 Galera nodes on MariaDB 10.4.18. I have blocking issue with MariaDB stability during network fluctuations (this usually happens for me when my client performs network interventions of it has hardware issues on the network). The issues is the following: 1. After few ups and downs of the network, on single node, Galera cluster goes into a complete freeze. 2. The node that lost connection is OK, as it doe not receive traffic anymore 3 The other 2 nodes are accessible via mysql connection with root account but when I check the process list in MariaDB I have always the following query hanged updating: *DELETE FROM mysql.wsrep_cluster*. - I see other queries after this, but they all have the time lower than "DELETE FROM mysql.wsrep_cluster", which makes me thing that this query somehow locks the whole database and the other queries are waiting for it. Unfortunately it never ends. +In order to replicate this we perform the following test+: 1. Start Galera on 3 nodes 2. We have a webservice that connects to Galera start a tool that sends queries continuously to the database 3. We perform successive ifup / ifdown at about 60 seconds interval on single node 4. After 10 -15 tries, the cluster hangs in the situation above I'm attaching the script that we use for ifup / ifdown testing and also I'm putting here he Galera configuration: #Galera Provider Configuration wsrep_on=ON wsrep_provider=/usr/lib64/galera-4/libgalera_smm.so #Galera Cluster Configuration wsrep_cluster_name="<EDITED>" wsrep_cluster_address="gcomm://<EDITED>" #Galera Synchronization Configuration wsrep_sst_method=rsync #Galera Node Configuration wsrep_node_address="<EDITED>" wsrep_node_name="<EDITED>" wsrep_slave_threads = 8 wsrep_provider_options="gcache.size = 2G; gcache.page_size = 1G; gcs.fc_limit = 256; gcs.fc_factor = 0.99; cert.log_conflicts = ON;" #read consistency wsrep_sync_wait=3 wsrep_retry_autocommit = 1 wsrep_debug = 1 wsrep_log_conflicts = 1 |

| Description |

Hi,

I have a setup with 3 Galera nodes on MariaDB 10.4.18. I have blocking issue with MariaDB stability during network fluctuations (this usually happens for me when my client performs network interventions of it has hardware issues on the network). The issues is the following: 1. After few ups and downs of the network, on single node, Galera cluster goes into a complete freeze. 2. The node that lost connection is OK, as it doe not receive traffic anymore 3 The other 2 nodes are accessible via mysql connection with root account but when I check the process list in MariaDB I have always the following query hanged updating: *DELETE FROM mysql.wsrep_cluster*. - I see other queries after this, but they all have the time lower than "DELETE FROM mysql.wsrep_cluster", which makes me thing that this query somehow locks the whole database and the other queries are waiting for it. Unfortunately it never ends. +In order to replicate this we perform the following test+: 1. Start Galera on 3 nodes 2. We have a webservice that connects to Galera start a tool that sends queries continuously to the database 3. We perform successive ifup / ifdown at about 60 seconds interval on single node 4. After 10 -15 tries, the cluster hangs in the situation above I'm attaching the script that we use for ifup / ifdown testing and also I'm putting here he Galera configuration: #Galera Provider Configuration wsrep_on=ON wsrep_provider=/usr/lib64/galera-4/libgalera_smm.so #Galera Cluster Configuration wsrep_cluster_name="<EDITED>" wsrep_cluster_address="gcomm://<EDITED>" #Galera Synchronization Configuration wsrep_sst_method=rsync #Galera Node Configuration wsrep_node_address="<EDITED>" wsrep_node_name="<EDITED>" wsrep_slave_threads = 8 wsrep_provider_options="gcache.size = 2G; gcache.page_size = 1G; gcs.fc_limit = 256; gcs.fc_factor = 0.99; cert.log_conflicts = ON;" #read consistency wsrep_sync_wait=3 wsrep_retry_autocommit = 1 wsrep_debug = 1 wsrep_log_conflicts = 1 |

Hi,

I have a setup with 3 Galera nodes on MariaDB 10.4.18. I have blocking issue with MariaDB stability during network fluctuations (this usually happens for me when my client performs network interventions of it has hardware issues on the network). The issues is the following: 1. After few ups and downs of the network, on single node, Galera cluster goes into a complete freeze. 2. The node that lost connection is OK, as it doe not receive traffic anymore 3 The other 2 nodes are accessible via mysql connection with root account but when I check the process list in MariaDB I have always the following query hanged updating: *DELETE FROM mysql.wsrep_cluster*. - I see other queries after this, but they all have the time lower than "DELETE FROM mysql.wsrep_cluster", which makes me thing that this query somehow locks the whole database and the other queries are waiting for it. Unfortunately it never ends. Logs analysis: 1. We do not see any slow log query for th query that hangs 2. We do not see any error in MariaDB / Galera error log +In order to replicate this we perform the following test+: 1. Start Galera on 3 nodes 2. We have a webservice that connects to Galera start a tool that sends queries continuously to the database 3. We perform successive ifup / ifdown at about 60 seconds interval on single node 4. After 10 -15 tries, the cluster hangs in the situation above I'm attaching the script that we use for ifup / ifdown testing and also I'm putting here he Galera configuration: #Galera Provider Configuration wsrep_on=ON wsrep_provider=/usr/lib64/galera-4/libgalera_smm.so #Galera Cluster Configuration wsrep_cluster_name="<EDITED>" wsrep_cluster_address="gcomm://<EDITED>" #Galera Synchronization Configuration wsrep_sst_method=rsync #Galera Node Configuration wsrep_node_address="<EDITED>" wsrep_node_name="<EDITED>" wsrep_slave_threads = 8 wsrep_provider_options="gcache.size = 2G; gcache.page_size = 1G; gcs.fc_limit = 256; gcs.fc_factor = 0.99; cert.log_conflicts = ON;" #read consistency wsrep_sync_wait=3 wsrep_retry_autocommit = 1 wsrep_debug = 1 wsrep_log_conflicts = 1 |

| Description |

Hi,

I have a setup with 3 Galera nodes on MariaDB 10.4.18. I have blocking issue with MariaDB stability during network fluctuations (this usually happens for me when my client performs network interventions of it has hardware issues on the network). The issues is the following: 1. After few ups and downs of the network, on single node, Galera cluster goes into a complete freeze. 2. The node that lost connection is OK, as it doe not receive traffic anymore 3 The other 2 nodes are accessible via mysql connection with root account but when I check the process list in MariaDB I have always the following query hanged updating: *DELETE FROM mysql.wsrep_cluster*. - I see other queries after this, but they all have the time lower than "DELETE FROM mysql.wsrep_cluster", which makes me thing that this query somehow locks the whole database and the other queries are waiting for it. Unfortunately it never ends. Logs analysis: 1. We do not see any slow log query for th query that hangs 2. We do not see any error in MariaDB / Galera error log +In order to replicate this we perform the following test+: 1. Start Galera on 3 nodes 2. We have a webservice that connects to Galera start a tool that sends queries continuously to the database 3. We perform successive ifup / ifdown at about 60 seconds interval on single node 4. After 10 -15 tries, the cluster hangs in the situation above I'm attaching the script that we use for ifup / ifdown testing and also I'm putting here he Galera configuration: #Galera Provider Configuration wsrep_on=ON wsrep_provider=/usr/lib64/galera-4/libgalera_smm.so #Galera Cluster Configuration wsrep_cluster_name="<EDITED>" wsrep_cluster_address="gcomm://<EDITED>" #Galera Synchronization Configuration wsrep_sst_method=rsync #Galera Node Configuration wsrep_node_address="<EDITED>" wsrep_node_name="<EDITED>" wsrep_slave_threads = 8 wsrep_provider_options="gcache.size = 2G; gcache.page_size = 1G; gcs.fc_limit = 256; gcs.fc_factor = 0.99; cert.log_conflicts = ON;" #read consistency wsrep_sync_wait=3 wsrep_retry_autocommit = 1 wsrep_debug = 1 wsrep_log_conflicts = 1 |

Hi,

I have a setup with 3 Galera nodes on MariaDB 10.4.18. I have blocking issue with MariaDB stability during network fluctuations (this usually happens for me when my client performs network interventions of it has hardware issues on the network). The issues is the following: 1. After few ups and downs of the network, on single node, Galera cluster goes into a complete freeze. 2. The node that lost connection is OK, as it doe not receive traffic anymore 3 The other 2 nodes are accessible via mysql connection with root account but when I check the process list in MariaDB I have always the following query hanged updating: *DELETE FROM mysql.wsrep_cluster*. - I see other queries after this, but they all have the time lower than "DELETE FROM mysql.wsrep_cluster", which makes me thing that this query somehow locks the whole database and the other queries are waiting for it. Unfortunately it never ends. Logs analysis: 1. We do not see any slow log for the query that hangs 2. We do not see any error in MariaDB / Galera error log +In order to replicate this we perform the following test+: 1. Start Galera on 3 nodes 2. We have a webservice that connects to Galera start a tool that sends queries continuously to the database 3. We perform successive ifup / ifdown at about 60 seconds interval on single node 4. After 10 -15 tries, the cluster hangs in the situation above I'm attaching the script that we use for ifup / ifdown testing and also I'm putting here he Galera configuration: #Galera Provider Configuration wsrep_on=ON wsrep_provider=/usr/lib64/galera-4/libgalera_smm.so #Galera Cluster Configuration wsrep_cluster_name="<EDITED>" wsrep_cluster_address="gcomm://<EDITED>" #Galera Synchronization Configuration wsrep_sst_method=rsync #Galera Node Configuration wsrep_node_address="<EDITED>" wsrep_node_name="<EDITED>" wsrep_slave_threads = 8 wsrep_provider_options="gcache.size = 2G; gcache.page_size = 1G; gcs.fc_limit = 256; gcs.fc_factor = 0.99; cert.log_conflicts = ON;" #read consistency wsrep_sync_wait=3 wsrep_retry_autocommit = 1 wsrep_debug = 1 wsrep_log_conflicts = 1 |

| Description |

Hi,

I have a setup with 3 Galera nodes on MariaDB 10.4.18. I have blocking issue with MariaDB stability during network fluctuations (this usually happens for me when my client performs network interventions of it has hardware issues on the network). The issues is the following: 1. After few ups and downs of the network, on single node, Galera cluster goes into a complete freeze. 2. The node that lost connection is OK, as it doe not receive traffic anymore 3 The other 2 nodes are accessible via mysql connection with root account but when I check the process list in MariaDB I have always the following query hanged updating: *DELETE FROM mysql.wsrep_cluster*. - I see other queries after this, but they all have the time lower than "DELETE FROM mysql.wsrep_cluster", which makes me thing that this query somehow locks the whole database and the other queries are waiting for it. Unfortunately it never ends. Logs analysis: 1. We do not see any slow log for the query that hangs 2. We do not see any error in MariaDB / Galera error log +In order to replicate this we perform the following test+: 1. Start Galera on 3 nodes 2. We have a webservice that connects to Galera start a tool that sends queries continuously to the database 3. We perform successive ifup / ifdown at about 60 seconds interval on single node 4. After 10 -15 tries, the cluster hangs in the situation above I'm attaching the script that we use for ifup / ifdown testing and also I'm putting here he Galera configuration: #Galera Provider Configuration wsrep_on=ON wsrep_provider=/usr/lib64/galera-4/libgalera_smm.so #Galera Cluster Configuration wsrep_cluster_name="<EDITED>" wsrep_cluster_address="gcomm://<EDITED>" #Galera Synchronization Configuration wsrep_sst_method=rsync #Galera Node Configuration wsrep_node_address="<EDITED>" wsrep_node_name="<EDITED>" wsrep_slave_threads = 8 wsrep_provider_options="gcache.size = 2G; gcache.page_size = 1G; gcs.fc_limit = 256; gcs.fc_factor = 0.99; cert.log_conflicts = ON;" #read consistency wsrep_sync_wait=3 wsrep_retry_autocommit = 1 wsrep_debug = 1 wsrep_log_conflicts = 1 |

Hi,

I have a setup with 3 Galera nodes on MariaDB 10.4.18. I have blocking issue with MariaDB stability during network fluctuations (this usually happens for me when my client performs network interventions of it has hardware issues on the network). The issues is the following: 1. After few ups and downs of the network, on single node, Galera cluster goes into a complete freeze. 2. The node that lost connection is OK, as it doe not receive traffic anymore 3 The other 2 nodes are accessible via mysql connection with root account but when I check the process list in MariaDB I have always the following query hanged updating: *DELETE FROM mysql.wsrep_cluster*. - I see other queries after this, but they all have the time lower than "DELETE FROM mysql.wsrep_cluster", which makes me thing that this query somehow locks the whole database and the other queries are waiting for it. Unfortunately it never ends. Logs analysis: 1. We do not see any slow log for the query that hangs 2. We do not see any error in MariaDB / Galera error log +In order to replicate this we perform the following test+: 1. Start Galera on 3 nodes 2. We have a webservice that connects to Galera start a tool that sends queries continuously to the database 3. We perform successive ifup / ifdown at about 60 seconds interval on single node 4. After 10 -15 tries, the cluster hangs in the situation above I'm attaching the script that we use for ifup / ifdown testing and also I'm putting here he Galera configuration: {code:java} #Galera Provider Configuration wsrep_on=ON wsrep_provider=/usr/lib64/galera-4/libgalera_smm.so #Galera Cluster Configuration wsrep_cluster_name="<EDITED>" wsrep_cluster_address="gcomm://<EDITED>" #Galera Synchronization Configuration wsrep_sst_method=rsync #Galera Node Configuration wsrep_node_address="<EDITED>" wsrep_node_name="<EDITED>" wsrep_slave_threads = 8 wsrep_provider_options="gcache.size = 2G; gcache.page_size = 1G; gcs.fc_limit = 256; gcs.fc_factor = 0.99; cert.log_conflicts = ON;" #read consistency wsrep_sync_wait=3 wsrep_retry_autocommit = 1 wsrep_debug = 1 wsrep_log_conflicts = 1 {code} |

| Priority | Blocker [ 1 ] | Major [ 3 ] |

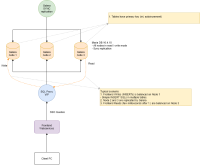

| Attachment | MariaDB valication diagram.png [ 58030 ] |

| Attachment | Galera Log - ETH-UP-Down-OK.txt [ 58031 ] |

| Attachment | MariaDB-server.cnf [ 58032 ] |

| Attachment | Galera Log - ETH-UP-Down-FAIL.txt [ 58033 ] |

| Attachment | MariaDB-Log.png [ 58034 ] |

| Attachment | galera-stability.php [ 58157 ] | |

| Attachment | galera-stability.sql [ 58158 ] | |

| Attachment | threads-tool.php [ 58159 ] |

| Attachment | MariaDB valication diagram.png [ 58030 ] |

| Attachment | MariaDB validation diagram.png [ 58160 ] |

| Assignee | Jan Lindström [ jplindst ] |

| Fix Version/s | 10.4 [ 22408 ] |

| Status | Open [ 1 ] | Confirmed [ 10101 ] |

| Priority | Major [ 3 ] | Critical [ 2 ] |

| Assignee | Jan Lindström [ jplindst ] | Mario Karuza [ mkaruza ] |

| Status | Confirmed [ 10101 ] | In Progress [ 3 ] |

| Assignee | Mario Karuza [ mkaruza ] | Jan Lindström [ jplindst ] |

| Status | In Progress [ 3 ] | In Review [ 10002 ] |

| issue.field.resolutiondate | 2021-09-30 10:58:57.0 | 2021-09-30 10:58:57.003 |

| Fix Version/s | 10.4.22 [ 26031 ] | |

| Fix Version/s | 10.5.13 [ 26026 ] | |

| Fix Version/s | 10.6.5 [ 26034 ] | |

| Fix Version/s | 10.4 [ 22408 ] | |

| Resolution | Fixed [ 1 ] | |

| Status | In Review [ 10002 ] | Closed [ 6 ] |

| Link |

This issue relates to |

| Fix Version/s | 10.5.5 [ 24423 ] | |

| Fix Version/s | 10.5.13 [ 26026 ] | |

| Fix Version/s | 10.6.5 [ 26034 ] |

| Link |

This issue duplicates |

| Fix Version/s | 10.5.13 [ 26026 ] | |

| Fix Version/s | 10.5.5 [ 24423 ] |

| Workflow | MariaDB v3 [ 122552 ] | MariaDB v4 [ 159379 ] |

Could not reproduce the issue using the given IP up/down script. I have used sysbench to simulate the load on all nodes. It will be great if you could provide more details about the queries which are executing on cluster nodes.