Details

-

Task

-

Status: Open (View Workflow)

-

Major

-

Resolution: Unresolved

-

None

Description

Problem description

Benchmarks show that Range-Locking mode experiences a lot of contention on the

locktree's root node mutex.

This is because every lock acquisition goes as follows:

- Acquire the locktree's root node mutex.

- Compare the root node's range with the range we intend to lock

- Typically, the ranges do not overlap, so we need to go to the left or right child

- Acquire the mutex of the left(or right) child node

- Possibly do a tree rotation

- Release the root node mutex.

Then the process repeats for the child child node, until we either have found the node whose range overlaps with the range we intend to lock, or we have found the place where we insert the node.

Proposed solution

The idea is that most lock acquisitions do not actually need to modify the

root node. They could use an RCU mechanism to access it.

The tree will have a member variable:

class locktree { |

bool root_rcu_enabled; |

...

|

}

|

Fast-path reader will do this:

tree_node *get_child_with_rcu(lookup_key) {

|

tree_node *res= nullptr;

|

rcu_read_lock();

|

if (locktree->root_rcu_enabled) { |

cmp = compare(lookup_key, locktree->root->key);

|

if (cmp == LESS_THAN) { |

Get the mutex on locktree->root->left_child;

|

res= locktree->root->left_child;

|

}

|

// if (cmp == GREATER_THAN) { same thing with right_child } |

}

|

rcu_read_unlock();

|

return res; |

}

|

If this function returns NULL, this means we need to touch the root node and so will retry using the locking.

tree_node *get_root_node() {

|

|

// Stop the new readers from using the RCU |

root_rcu_enabled= false; |

|

|

// Wait until all RCU readers are gone |

rcu.synchronize_rcu();

|

|

|

locktree->root->mutex_lock();

|

|

|

// Here, can make modifications to the root node |

|

|

// When we are done, release the mutex and enable the RCU |

locktree->root->mutex_unlock();

|

root_rcu_enabled= true; |

}

|

Other details

It is not clear if/how this approach could be extended to non-root nodes.

Attachments

Issue Links

- relates to

-

MDEV-15603 Gap Lock support in MyRocks

-

- Stalled

-

-

MDEV-22171 Range Locking: explore other data structures

-

- Open

-

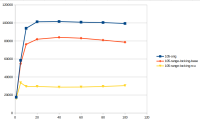

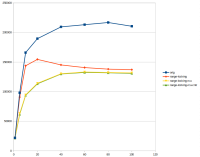

c5.2xlarge:

ubuntu@ip-172-31-17-220:~/range-locking-benchmark$ ./summarize-result.sh 105-origSERVER_DIR=mysql-5.6-origSYSBENCH_TEST=oltp_write_only.luaThreads, QPS1 17531.275 58527.5010 94065.1120 101323.1640 101602.4760 100858.5480 100453.83100 99408.72ubuntu@ip-172-31-17-220:~/range-locking-benchmark$ ./summarize-result.sh 105-range-locking-baseSERVER_DIR=mysql-5.6-range-lockingSYSBENCH_TEST=oltp_write_only.luaThreads, QPS1 17777.905 54689.0610 76271.5620 81926.3340 84046.3060 83086.2580 80931.29100 78644.12ubuntu@ip-172-31-17-220:~/range-locking-benchmark$ ./summarize-result.sh 104-rcu-relSERVER_DIR=mysql-5.6-range-locking-rcuSYSBENCH_TEST=oltp_write_only.luaThreads, QPS1 16099.385 33605.9110 29440.7720 29464.2340 28729.2160 28841.0980 29461.01100 30389.78