Details

-

Bug

-

Status: Closed (View Workflow)

-

Major

-

Resolution: Won't Fix

-

10.3.17, 10.3.20

-

None

-

Hide15.11.2019:

CentOS Linux release 7.6.1810 (Core)

Kernel: 3.10.0-957.27.2.el7.x86_64 #1 SMP Mon Jul 29 17:46:05 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux

MariaDB Server 10.3.17

18.11.2019

CentOS Linux release 7.7.1908 (Core)

Kernel: Linux dbslave-02.sb.inopla.gmbh 3.10.0-1062.4.3.el7.x86_64 #1 SMP Wed Nov 13 23:58:53 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux

MariaDB Server 10.3.20Show15.11.2019: CentOS Linux release 7.6.1810 (Core) Kernel: 3.10.0-957.27.2.el7.x86_64 #1 SMP Mon Jul 29 17:46:05 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux MariaDB Server 10.3.17 18.11.2019 CentOS Linux release 7.7.1908 (Core) Kernel: Linux dbslave-02.sb.inopla.gmbh 3.10.0-1062.4.3.el7.x86_64 #1 SMP Wed Nov 13 23:58:53 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux MariaDB Server 10.3.20

Description

Bei folgender Select Abfrage "Siehe Anhang > error_15112019.log!" kam es am 15.11.2019 zu einem Crash des MariaDB "mysqld" Dienstes mit Signal 11.

Wie auch dem Log zu entnehmen ist, führte der Dienst den Recovery Vorgang erfolgreich durch und hat sich anschließend automatisch durch "Shutdown" beendet.

Können Sie uns bitte beim Revidieren der genaueren Fehlerursache, die für den Crash der Dienstes mit "Signal 11" verantwortlich ist weiterhelfen.

Attachments

Issue Links

- relates to

-

MDEV-21134 Crash with partitioned table, PARTITION syntax, and index_merge

-

- Closed

-

Activity

A error log from today "mariadb_slave_error_log_18112019.txt" was attached.

Please provide the output of

SHOW CREATE TABLE cdr_acd; |

SHOW INDEX IN cdr_acd; |

where cdr_acd is the table from error_15112019.log.

You can obfuscate the column names, default values, etc, but please keep it consistent with the query – after obfuscation we need to see a non-ambiguous relation between fields used in the query and table columns.

Please also paste or attach your config file(s).

Info to "SHOW CREATE TABLE cdr_acd":

CREATE TABLE `cdr_acd` ( |

`id` int(11) unsigned NOT NULL AUTO_INCREMENT, |

`kid` int(11) unsigned NOT NULL, |

`cdr_in_id` int(11) unsigned NOT NULL, |

`agenten_id` int(11) unsigned NOT NULL, |

`skill_group_id` int(11) unsigned DEFAULT NULL, |

`direction` enum('inbound','outbound') NOT NULL, |

`from_number` varchar(25) NOT NULL, |

`to_number` varchar(25) NOT NULL, |

`service` varchar(55) NOT NULL, |

`duration` mediumint(7) unsigned NOT NULL, |

`duration_bill` mediumint(7) unsigned NOT NULL, |

`duration_ringing` mediumint(7) unsigned NOT NULL, |

`success` tinyint(1) unsigned NOT NULL, |

`anonymize` tinyint(1) unsigned NOT NULL DEFAULT 0, |

`date_time` datetime NOT NULL, |

`month` tinyint(2) unsigned NOT NULL DEFAULT 0, |

`created` datetime NOT NULL DEFAULT current_timestamp(), |

PRIMARY KEY (`id`,`month`) USING BTREE, |

KEY `kid` (`kid`), |

KEY `skill_group_id` (`skill_group_id`), |

KEY `cdr_in_id` (`cdr_in_id`), |

KEY `agenten_id` (`agenten_id`), |

KEY `from_number` (`from_number`), |

KEY `to_number` (`to_number`), |

KEY `direction` (`direction`), |

KEY `date_time` (`date_time`), |

KEY `created` (`created`), |

KEY `service` (`service`), |

KEY `anonymize` (`anonymize`) |

) ENGINE=InnoDB AUTO_INCREMENT=XXX DEFAULT CHARSET=latin1 |

PARTITION BY LIST (`month`) |

(PARTITION `p0` VALUES IN (0) ENGINE = InnoDB, |

PARTITION `p1` VALUES IN (1) ENGINE = InnoDB, |

PARTITION `p2` VALUES IN (2) ENGINE = InnoDB, |

PARTITION `p3` VALUES IN (3) ENGINE = InnoDB, |

PARTITION `p4` VALUES IN (4) ENGINE = InnoDB, |

PARTITION `p5` VALUES IN (5) ENGINE = InnoDB, |

PARTITION `p6` VALUES IN (6) ENGINE = InnoDB, |

PARTITION `p7` VALUES IN (7) ENGINE = InnoDB, |

PARTITION `p8` VALUES IN (8) ENGINE = InnoDB, |

PARTITION `p9` VALUES IN (9) ENGINE = InnoDB, |

PARTITION `p10` VALUES IN (10) ENGINE = InnoDB, |

PARTITION `p11` VALUES IN (11) ENGINE = InnoDB, |

PARTITION `p12` VALUES IN (12) ENGINE = InnoDB) |

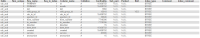

Info to "SHOW INDEX IN cdr_acd": > attached image file "show_index.png"

And finally two configuration files from the master "server_master.cnf" and slave "server_slave.cnf" server.

Dear Mrs. Stepanova, can you tell us something about the cause of the error. Did your research reveal something? I attached new error logs ("error_master_21112019.log / error_slave_21112019.log") to the ticket. Does the MariaDB Version 10.3.x have the problems with the table partitioning and the query on it? A secure and reliable use of the database system of MariaDB must be guaranteed. Thanks for your support.

Looking at the stack traces provided

stack_bottom = 0x7f7306156d00 thread_stack 0x30000

|

/usr/sbin/mysqld(my_print_stacktrace+0x2e)[0x559f5f74193e]

|

/usr/sbin/mysqld(handle_fatal_signal+0x30f)[0x559f5f1e12af]

|

/lib64/libpthread.so.0(+0xf5d0)[0x7fbe856c05d0]

|

/usr/sbin/mysqld(+0xcb09d7)[0x559f5f7089d7]

|

/usr/sbin/mysqld(+0xcba5da)[0x559f5f7125da]

|

/usr/sbin/mysqld(_ZN7handler7ha_openEP5TABLEPKcijP11st_mem_rootP4ListI6StringE+0x47)[0x559f5f1e5d47]

|

/usr/sbin/mysqld(+0xcb40e9)[0x559f5f70c0e9]

|

/usr/sbin/mysqld(_ZN18QUICK_RANGE_SELECT20init_ror_merged_scanEbP11st_mem_root+0x85)[0x559f5f2ef955]

|

/usr/sbin/mysqld(_ZN26QUICK_ROR_INTERSECT_SELECT20init_ror_merged_scanEbP11st_mem_root+0x7d)[0x559f5f2efb6d]

|

/usr/sbin/mysqld(_ZN26QUICK_ROR_INTERSECT_SELECT5resetEv+0x5f)[0x559f5f2f095f]

|

/usr/sbin/mysqld(_Z21join_init_read_recordP13st_join_table+0x48)[0x559f5f04bd18]

|

/usr/sbin/mysqld(_Z10sub_selectP4JOINP13st_join_tableb+0x169)[0x559f5f03b5c9]

|

/usr/sbin/mysqld(_ZN4JOIN10exec_innerEv+0xa7b)[0x559f5f05d2eb]

|

/usr/sbin/mysqld(_ZN4JOIN4execEv+0x33)[0x559f5f05d503]

|

/usr/sbin/mysqld(_Z12mysql_selectP3THDP10TABLE_LISTjR4ListI4ItemEPS4_jP8st_orderS9_S7_S9_yP13select_resultP18st_select_lex_unitP13st_select_lex+0x11a)[0x559f5f05d65a]

|

/usr/sbin/mysqld(_Z13handle_selectP3THDP3LEXP13select_resultm+0x1cc)[0x559f5f05e16c]

|

/usr/sbin/mysqld(+0x4bcebc)[0x559f5ef14ebc]

|

/usr/sbin/mysqld(_Z21mysql_execute_commandP3THD+0x33f2)[0x559f5f006432]

|

/usr/sbin/mysqld(_Z11mysql_parseP3THDPcjP12Parser_statebb+0x22b)[0x559f5f00bc2b]

|

/usr/sbin/mysqld(_Z16dispatch_command19enum_server_commandP3THDPcjbb+0x1c9f)[0x559f5f00e80f]

|

/usr/sbin/mysqld(_Z10do_commandP3THD+0x13e)[0x559f5f00f74e]

|

/usr/sbin/mysqld(_Z24do_handle_one_connectionP7CONNECT+0x221)[0x559f5f0e2f61]

|

/usr/sbin/mysqld(handle_one_connection+0x3d)[0x559f5f0e302d]

|

/usr/sbin/mysqld(+0xc9c85d)[0x559f5f6f485d]

|

/lib64/libpthread.so.0(+0x7dd5)[0x7fbe856b8dd5]

|

/lib64/libpthread.so.0(+0xf5d0)[0x7fbe856c05d0]

|

/usr/sbin/mysqld(+0xcb09d7)[0x559f5f7089d7]

|

/usr/sbin/mysqld(+0xcba5da)[0x559f5f7125da]

|

/usr/sbin/mysqld(_ZN7handler7ha_openEP5TABLEPKcijP11st_mem_rootP4ListI6StringE+0x47)[0x559f5f1e5d47]

|

The crash happens when index_merge_intersect strategy is being initialized... it is setting up the storage engine to do a ROR scan

The top functions do not have debug info (and addresses do not make much sense?)

/usr/sbin/mysqld(+0xcb09d7)[0x559f5f7089d7]

|

/usr/sbin/mysqld(+0xcba5da)[0x559f5f7125da]

|

/usr/sbin/mysqld(_ZN7handler7ha_openEP5TABLEPKcijP11st_mem_rootP4ListI6StringE+0x47)[0x559f5f1e5d47]

|

But in another example, they do:

/lib64/libpthread.so.0(+0xf5f0)[0x7efcbb6345f0]

|

/usr/sbin/mysqld(+0xcbb9bf)[0x559aeff019bf]

|

/usr/sbin/mysqld(+0xcc75da)[0x559aeff0d5da]

|

/usr/sbin/mysqld(_ZN7handler7ha_openEP5TABLEPKcijP11st_mem_rootP4ListI6StringE+0x47)[0x559aef9de187]

|

These are:

0000000000cc7060 t _ZN12ha_partition4openEPKcij.part.258

|

cc75da is in this func^

|

0000000000cc76a0 t _ZN12ha_partition4openEPKcij

|

0000000000cbb480 t _ZN12ha_partition4infoEj

|

cbb9bf is in this func ^

|

0000000000cbba30 t _ZN12ha_partition5resetEv

|

This makes sense, ha_partition::open() does calls ha_partition::info(). And it crashes somewhere inside.

- Do you have MariaDB-server-debuginfo package installed? It should allow to get more precise stack traces.

- If you re-run a query that caused a crash (search for "Query " in the error log to find it) - does it always cause the crash? Or there are cases where it runs successfully? (In other words, is there a query that would reliably cause the crash? If yes, this would help to narrow down the problem)

@Sergei Petrunia

Currently the following software package "MariaDB-server-debuginfo" is not installed. We will do that tomorrow and will provide you with more information from the error log.

With SQL queries of the following kind, we were able to provoke the crashes several times in a test environment. Several times in succession, sometimes with some breaks in between. Also several times immediately after the recovery start of the "mysqld" service. During the test run, we generated very large numbers of SQL queries.

SELECT COUNT(id) AS requests FROM api_log PARTITION(p11) WHERE kid='XXXX' AND auth_type='1' AND auth_id='XXXX' AND created >= DATE_SUB(NOW(), INTERVAL 60 MINUTE) LIMIT 1 |

SELECT COUNT(id) AS requests FROM api_log PARTITION(p11) WHERE kid='XXXX' AND auth_type='1' AND auth_id='XXXX' AND created >= DATE_SUB(NOW(), INTERVAL 60 MINUTE) LIMIT 1 |

During the runs with many SQL queries without "PARTITION (pXX)" parameters, we could not provoke crashes. The data was queried randomly from the table.

SELECT COUNT(id) AS requests FROM api_log WHERE kid='XXXX' AND auth_type='1' AND auth_id='XXXX' AND created >= DATE_SUB(NOW(), INTERVAL 60 MINUTE) LIMIT 1 |

Currently the following software package "MariaDB-server-debuginfo" is not installed. We will do that tomorrow and will provide you with more information from the error log.

FYI, once you install the MariaDB-server-debuginfo package, the error log still might not contain any additional information. There is a bug in how MariaDB's signal handler tries to match the addresses of the crash stack with the addresses in the debuginfo package. See MDEV-20738 for more information about that bug.

In order to get a better stack trace, you might have to perform the following steps:

Install the debuginfo package. i.e.:

sudo yum install MariaDB-server-debuginfo

|

And then enable core dumps. i.e.:

sudo mkdir /tmp/corefiles

|

sudo chmod 777 /tmp/corefiles

|

sudo echo /tmp/corefiles/core > /proc/sys/kernel/core_pattern

|

sudo echo 1 > /proc/sys/kernel/core_uses_pid

|

sudo echo 2 > /proc/sys/fs/suid_dumpable

|

sudo tee /etc/systemd/system/mariadb.service.d/limitcore.conf <<EOF

|

[Service]

|

|

|

LimitCORE=infinity

|

EOF

|

sudo systemctl daemon-reload

|

https://mariadb.com/kb/en/library/enabling-core-dumps/

And then restart MariaDB:

sudo systemctl restart mariadb

|

At that point, if you are able to reproduce the crash, then the core dump would be in /tmp/corefiles.

Back traces can be extracted from the core dump with:

sudo gdb --batch --eval-command="thread apply all bt full" /usr/sbin/mysqld ${path_to_core_file} > mysqld_full_bt_all_threads.txt

|

We provoked the crash with the SQL query and created the core file.

SELECT COUNT(id) AS requests FROM api_log PARTITION(p11) WHERE kid='XXXX' AND auth_type='1' AND auth_id='XXXX' AND created >= DATE_SUB(NOW(), INTERVAL 60 MINUTE) LIMIT 1 |

Output from was attached "mysqld_full_bt_all_threads.txt" >

gdb --batch --eval-command="thread apply all bt full" /usr/sbin/mysqld /tmp/corefiles/core.20531 > /root/mysqld_full_bt_all_threads.txt

Output from MariaDB v. 10.4.10 was attached "mysqld_full_bt_all_threads_10.4.txt" >

gdb --batch --eval-command="thread apply all bt full" /usr/sbin/mysqld /tmp/corefiles/core.26072 > /root/mysqld_full_bt_all_threads_10.4.txt

inopladbadmins, thanks for the extra info.

So, indeed it crashes in

#0 ha_partition::info (...) at /usr/src/debug/MariaDB-10.3.20/src_0/sql/ha_partition.cc:8251

|

#1 in ha_partition::open (...) at /usr/src/debug/MariaDB-10.3.20/src_0/sql/ha_partition.cc:3653

|

#2 in handler::ha_open (...) at /usr/src/debug/MariaDB-10.3.20/src_0/sql/handler.cc:2760

|

#3 in ha_partition::clone (...) at /usr/src/debug/MariaDB-10.3.20/src_0/sql/ha_partition.cc:3754

|

8246 stats.delete_length+= file->stats.delete_length;

|

8247 if (file->stats.check_time > stats.check_time)

|

8248 stats.check_time= file->stats.check_time;

|

8249 stats.checksum+= file->stats.checksum;

|

8250 }

|

> 8251 if (stats.records && stats.records < 2 &&

|

8252 !(m_file[0]->ha_table_flags() & HA_STATS_RECORDS_IS_EXACT))

|

8253 stats.records= 2;

|

8254 if (stats.records > 0)

|

8255 stats.mean_rec_length= (ulong) (stats.data_file_length / stats.records);

|

line 8251 is here.

*this pointer is valid, so the only way this could crash is that m_file[0]

is invalid?

Other notes:

- We crash in ha_partition::info() when it is called for the clone.

- The query and the relevant parts of DDL are below

- Partition pruning doesn't give us anything here (but note the PARTITION syntax)

- It's 2- or 3-way ROR-index_merge.

SELECT COUNT(id) AS requests |

FROM api_log PARTITION(p11) |

WHERE |

kid='XXXX' AND |

auth_type='1' AND |

auth_id='XXXX' AND |

created >= DATE_SUB(NOW(), INTERVAL 60 MINUTE) |

LIMIT 1

|

|

|

CREATE TABLE `cdr_acd` ( |

`id` int(11) unsigned NOT NULL AUTO_INCREMENT, |

`kid` int(11) unsigned NOT NULL, |

-- no auth_type or auth_id fields provided. But they should be there, and |

-- either one or both of them are indexed and participate in index_merge. |

|

|

`created` datetime NOT NULL DEFAULT current_timestamp(), |

|

|

PRIMARY KEY (`id`,`month`) USING BTREE, |

KEY `kid` (`kid`), |

...

|

PARTITION BY LIST (`month`) |

(PARTITION `p0` VALUES IN (0) ENGINE = InnoDB, |

PARTITION `p1` VALUES IN (1) ENGINE = InnoDB, |

...

|

);

|

Ok, I've got a reason how it could get wrong.

for (i= bitmap_get_first_set(&m_part_info->read_partitions); |

i < m_tot_parts;

|

i= bitmap_get_next_set(&m_part_info->read_partitions, i))

|

{

|

file= m_file[i];

|

file->info(HA_STATUS_VARIABLE | no_lock_flag | extra_var_flag);

|

stats.records+= file->stats.records;

|

stats.deleted+= file->stats.deleted;

|

stats.data_file_length+= file->stats.data_file_length;

|

stats.index_file_length+= file->stats.index_file_length;

|

stats.delete_length+= file->stats.delete_length;

|

if (file->stats.check_time > stats.check_time) |

stats.check_time= file->stats.check_time;

|

stats.checksum+= file->stats.checksum;

|

}

|

if (stats.records && stats.records < 2 && |

!(m_file[0]->ha_table_flags() & HA_STATS_RECORDS_IS_EXACT))

|

stats.records= 2;

|

Note that the above loop only accesses m_file[i] if m_part_info->read_partitions.is_set![]() . If it is not: then regular ha_partition objects will have m_file[i] pointing to a ha_innobase object for which open() has not been called (but one can still call ha_table_flags() for it).

. If it is not: then regular ha_partition objects will have m_file[i] pointing to a ha_innobase object for which open() has not been called (but one can still call ha_table_flags() for it).

A ha_partition object that is a result of a clone operation will have m_file[i] == NULL. And this is how we will crash if we try to call ha_table_flags() for it.

One thing that is not clear is how did we manage to pick an index_merge plan for a query for which we had stats.records==1 .

This code looks odd :

if (stats.records && stats.records < 2 &&

|

this checks for stats.records==1. This is old code from Sun era from 2008: https://github.com/mariadb/server/commit/dc9c5440e357b1afde67ea43ad3e626835e55896

Ok, this particular crash can be avoided by this change:

- !(m_file[0]->ha_table_flags() & HA_STATS_RECORDS_IS_EXACT))

|

+ !( (m_file_sample?(m_file_sample->ha_table_flags() & HA_STATS_RECORDS_IS_EXACT)) : 0 ) |

but you'll also want to have MDEV-21134 fixed, otherwise there's a high risk of hitting it.

I made priority equal for related bugs (and also it has no a test suite).

Dear Mr. Byelkin,

do you already have a release date where the bugfix will be released?

Is the test suite deployed internally when the bug fix has been implemented?

Yes we make or redo (simplify, rewrite and so on) test suites for bug when fix them. but we in any case we prefer to have something to start with (I mean how to repeat the bug), here we should guess. Sergey tried and failed to make repeatable test suite, so I think it is not trivial, and so I can not predict how it can go. Also the bug is not the first in the queue (there are way to move it first, but it is not rising the priority, rather becoming paying customer).

So taking all above into account I can not predict version, sorry.

Mit freundlichen Grüßen

Oleksandr Byelkin

Can we support you in any way? It has high priority for us.

The https://mariadb.com/kb/en/library/mariadb-community-bug-reporting/ is the guide to the good bug report, in this particular case repeatable test suite would be really helpful, we see and could guess what is going wrong by stack trace, but still can not easy guess what lead to such state (we will of course).

Also your bug report is quite fresh so it is quite far away in the queue (you can check it by sorting my bugs ("assignee = sanja" in advanced search) by priority and date in JIRA, we do not make secret from bugreports as ORACLE do) so you have time.

The following test case fails with the same stack trace as the one reported here.

I'm not quite sure it represents the exact same use case, as it involves a HEAP table, while the reported ones were InnoDB.

Still, the resemblance is remarkable.

If it is the same problem, then it apparently stopped happening on 10.5. Hopefully affected instances have long been upgraded and don't encounter it anymore.

--source include/have_partition.inc

|

|

|

CREATE TABLE t ( |

id INT, |

a datetime,

|

b time, |

KEY(a) USING BTREE, |

KEY(b) USING BTREE |

) ENGINE=HEAP PARTITION BY key (id) partitions 2; |

|

|

INSERT INTO t VALUES |

(1, '0000-00-00', '00:00:00') , |

(2, '0000-00-00', '00:00:00') , |

(3, '2017-01-01', '00:00:00') , |

(4, '1986-01-01', '00:00:00') , |

(5, '2012-01-01', '00:00:00'); |

|

|

SELECT * FROM t WHERE a IS NULL OR b IS NULL; |

|

|

# Cleanup

|

DROP TABLE t; |

|

10.4 f5dceafd |

#3 <signal handler called>

|

#4 0x000055d0a3a6e8d4 in ha_partition::open (this=0x621000093928, name=0x603000014328 "./test/t#P#p0", mode=33, test_if_locked=2) at /data/src/10.4/sql/ha_partition.cc:3569

|

#5 0x000055d0a328ff48 in handler::ha_open (this=0x621000093928, table_arg=0x62000003c088, name=0x603000014328 "./test/t#P#p0", mode=33, test_if_locked=2, mem_root=0x0, partitions_to_open=0x0) at /data/src/10.4/sql/handler.cc:2808

|

#6 0x000055d0a3bf5aaa in ha_heap::clone (this=0x61a00002dca8, name=0x7f54366a9960 "./test/t#P#p0", mem_root=0x61100002c948) at /data/src/10.4/storage/heap/ha_heap.cc:153

|

#7 0x000055d0a3a6fb4b in ha_partition::open (this=0x621000092798, name=0x61b00003a938 "./test/t", mode=33, test_if_locked=1026) at /data/src/10.4/sql/ha_partition.cc:3664

|

#8 0x000055d0a328ff48 in handler::ha_open (this=0x621000092798, table_arg=0x62000003c088, name=0x61b00003a938 "./test/t", mode=33, test_if_locked=1026, mem_root=0x0, partitions_to_open=0x0) at /data/src/10.4/sql/handler.cc:2808

|

#9 0x000055d0a3a70fc5 in ha_partition::clone (this=0x61d0002058a8, name=0x61b00003a938 "./test/t", mem_root=0x61100002c948) at /data/src/10.4/sql/ha_partition.cc:3844

|

#10 0x000055d0a3635b4c in QUICK_RANGE_SELECT::init_ror_merged_scan (this=0x613000050d80, reuse_handler=false, local_alloc=0x61100002c948) at /data/src/10.4/sql/opt_range.cc:1523

|

#11 0x000055d0a36383b6 in QUICK_ROR_UNION_SELECT::reset (this=0x61100002c8c0) at /data/src/10.4/sql/opt_range.cc:1803

|

#12 0x000055d0a2c2ad50 in join_init_read_record (tab=0x62b0000662a8) at /data/src/10.4/sql/sql_select.cc:21820

|

#13 0x000055d0a2c24323 in sub_select (join=0x62b000063f00, join_tab=0x62b0000662a8, end_of_records=false) at /data/src/10.4/sql/sql_select.cc:20886

|

#14 0x000055d0a2c2232e in do_select (join=0x62b000063f00, procedure=0x0) at /data/src/10.4/sql/sql_select.cc:20412

|

#15 0x000055d0a2bb11bd in JOIN::exec_inner (this=0x62b000063f00) at /data/src/10.4/sql/sql_select.cc:4605

|

#16 0x000055d0a2bae7c4 in JOIN::exec (this=0x62b000063f00) at /data/src/10.4/sql/sql_select.cc:4387

|

#17 0x000055d0a2bb2856 in mysql_select (thd=0x62b00005b208, tables=0x62b000062938, wild_num=1, fields=..., conds=0x62b000063448, og_num=0, order=0x0, group=0x0, having=0x0, proc_param=0x0, select_options=2147748608, result=0x62b000063ed0, unit=0x62b00005f140, select_lex=0x62b0000622f0) at /data/src/10.4/sql/sql_select.cc:4826

|

#18 0x000055d0a2b83461 in handle_select (thd=0x62b00005b208, lex=0x62b00005f080, result=0x62b000063ed0, setup_tables_done_option=0) at /data/src/10.4/sql/sql_select.cc:442

|

#19 0x000055d0a2af328b in execute_sqlcom_select (thd=0x62b00005b208, all_tables=0x62b000062938) at /data/src/10.4/sql/sql_parse.cc:6473

|

#20 0x000055d0a2ae07a0 in mysql_execute_command (thd=0x62b00005b208) at /data/src/10.4/sql/sql_parse.cc:3976

|

#21 0x000055d0a2afc463 in mysql_parse (thd=0x62b00005b208, rawbuf=0x62b000062228 "SELECT * FROM t WHERE a IS NULL OR b IS NULL", length=44, parser_state=0x7f54366ac860, is_com_multi=false, is_next_command=false) at /data/src/10.4/sql/sql_parse.cc:8008

|

#22 0x000055d0a2ad27a6 in dispatch_command (command=COM_QUERY, thd=0x62b00005b208, packet=0x629000230209 "", packet_length=44, is_com_multi=false, is_next_command=false) at /data/src/10.4/sql/sql_parse.cc:1857

|

#23 0x000055d0a2acf315 in do_command (thd=0x62b00005b208) at /data/src/10.4/sql/sql_parse.cc:1378

|

#24 0x000055d0a2ece0ba in do_handle_one_connection (connect=0x6080000009a8) at /data/src/10.4/sql/sql_connect.cc:1420

|

#25 0x000055d0a2ecd9d1 in handle_one_connection (arg=0x6080000009a8) at /data/src/10.4/sql/sql_connect.cc:1324

|

#26 0x000055d0a3b3aaee in pfs_spawn_thread (arg=0x615000003508) at /data/src/10.4/storage/perfschema/pfs.cc:1869

|

#27 0x00007f543e4a7fd4 in start_thread (arg=<optimized out>) at ./nptl/pthread_create.c:442

|

#28 0x00007f543e5285bc in clone3 () at ../sysdeps/unix/sysv/linux/x86_64/clone3.S:81

|

For what reasons is the case currently not processed. What conditions must be met for the case to get rolling? We have had another crash of "mysqld" on another slave server with signal 11. This time it happened with the version 10.3.20. After the first error message the servers were updated from 10.3.17 to the version 10.3.20 with the hope for improvement.